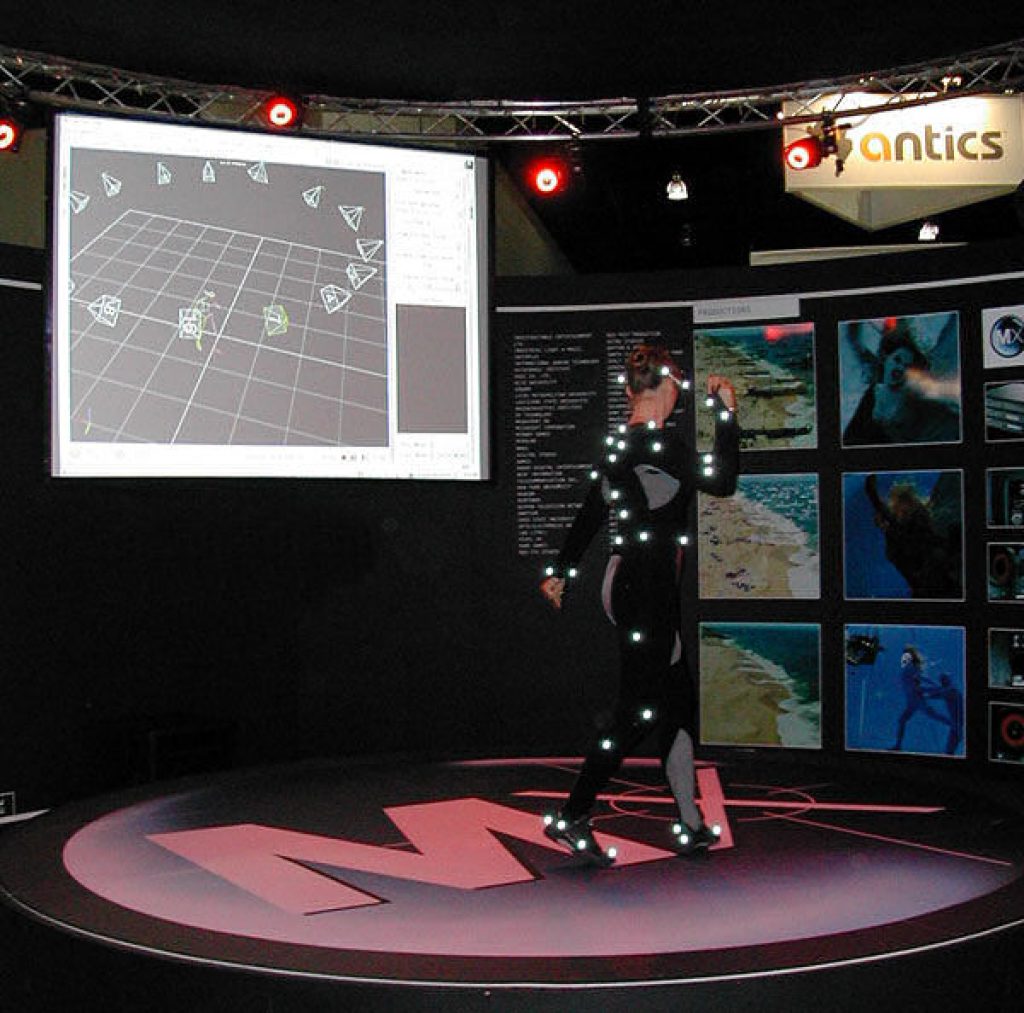

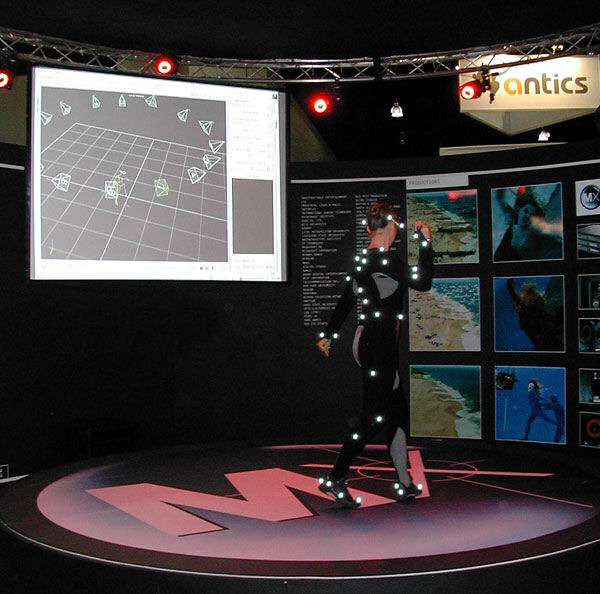

I still remember my first SIGGRAPH, in 1999. At it, I auditioned my first motion capture system, and at first I admittedly wasn't very impressed. After all, the model employed in the demo was clad in a somewhat silly looking skin-tight outfit, complete with what looked like dozens of cotton balls attached at various points on his body.

But as soon as I glanced at the computer monitor to the side of the demo floor, my jaw dropped and I immediately 'got it.' I'd seen plenty of unrealistic computer algorithm-simulated humanoid renderings in computer games, Hollywood movies, and the like. Here was what seemed to be a far superior alternative approach; capture the motion of real-life humanoids, then transform it into a wire-frame model and overlay the polygons and textures of the desired cyber-human's body.

I was so impressed that I devoted an EDN Magazine cover story to the topic that December. Hollywood apparently agrees, and the technology's progression in recent years has been quite stunning. Consider, for example, the realism improvements from 2004's The Polar Express through 2007's Beowulf and culminating with 2009's Avatar. This week marks another SIGGRAPH show, and not surprisingly, motion capture breakthroughs are among the news coming out of Vancouver.

Back in late 1999, I wrote:

The next challenge for motion-capture-system vendors is to dispense with the need for sensors. In this scenario, the image-processing subsystem would directly infer skeletal motion characteristics from the captured video images using sophisticated edge-detection algorithms that, for example, determine where a chin ends and a neck begins. Oxford Metrics is working on such a system but believes that production is approximately four years away.

As with many aspects of technology, the fulfillment of that aspiration took longer than initially expected, but Organic Motion thinks it finally has the problem licked. The company's OpenStage 2.0 system requires neither sensor-clogged outfits nor specialty backdrops, according to the developer; it comprises eight to 24 high-speed cameras, covers regions from four to 30 square feet, and costs $40,000 to $80,000 USD. For more information, check out the following additional coverage:

· Engadget, and

Next, there's Microsoft Research, which according to DailyTech's coverage has developed a system that "is capable of capturing facial performances matching the acquisition speed of motion capture system and the spatial resolution of static face scans." In this particular case, the company employs a marker-based motion capture technique, which it subsequently blends with 3D facial scan data to "reconstruct high-fidelity 3D facial performances." If, as you read these words, you're remembering my Avatar Kinect coverage of two weeks back, I'd agree that you're probably on the right track.

Finally, consider that a conventional motion capture system involves multiple cameras simultaneously capturing, from different angles, a model on a custom set wearing numerous passive reflective sensors. What if, on the other hand, you turned the process around, with the model wearing multiple active image sensor-based camera systems? That's the question that Carnegie Mellon University researchers asked, and in partnership with Disney Research, they've come up with a compelling approach that's usage environment-agnostic. Quoting from the summary of the paper they're presenting this week:

We present the theory and practice of using body-mounted cameras to reconstruct the motion of a subject. Outward-looking cameras are attached to the limbs of the subject, and the joint angles and root pose are estimated through non-linear optimization. The optimization objective function incorporates terms for image matching error and temporal continuity of motion. Structure-from-motion is used to estimate the skeleton structure and to provide initialization for the non-linear optimization procedure. Global motion is estimated and drift is controlled by matching the captured set of videos to reference imagery. We show results in settings where capture would be difficult or impossible with traditional motion capture systems, including walking outside and swinging on monkey bars.

Make sure you hit the above link for more information, including a PDF copy of the paper, along with a compelling demonstration video (QuickTime MOV). And once again, here's some additional coverage for your perusal:

· Gizmodo

· Slashdot, and