Technologies

scroll to learn more or view by subtopic

The listing below showcases the most recently published content associated with various AI and visual intelligence functions.

View all Posts

“Improved Navigation Assistance for the Blind via Real-time Edge AI,” a Presentation from Tesla

Aishwarya Jadhav, Software Engineer in the Autopilot AI Team at Tesla, presents the “Improved Navigation Assistance for the Blind via Real-time Edge AI,” tutorial at the May 2024 Embedded Vision Summit. In this talk, Jadhav presents recent work on AI Guide Dog, a groundbreaking research project aimed at providing navigation… “Improved Navigation Assistance for the

“Introduction to Modern Radar for Machine Perception,” a Presentation from Sensor Cortek

Robert Laganière, Professor at the University of Ottawa and CEO of Sensor Cortek, presents the “Introduction to Modern Radar for Machine Perception” tutorial at the May 2024 Embedded Vision Summit. In this presentation, Laganière provides an introduction to radar (short for radio detection and ranging) for machine perception. Radar is… “Introduction to Modern Radar for

2023 Milestone: More than 760,000 LiDAR Systems in Passenger Cars. Which Technology Leads the Market?

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Hesai, Seyond, RoboSense, and Valeo: Yole Group’s analysts invite you to dive deep into leading LiDAR tech. OUTLINE: LiDAR can achieve an angular resolution of 0.05°, offering more precise object detection and

Orchestrating Innovation at Scale with NVIDIA Maxine and Texel

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. The NVIDIA Maxine AI developer platform is a suite of NVIDIA NIM microservices, cloud-accelerated microservices, and SDKs that offer state-of-the-art features for enhancing real-time video and audio. NVIDIA partners use Maxine features to create better virtual interaction

“Removing Weather-related Image Degradation at the Edge,” a Presentation from Rivian

Ramit Pahwa, Machine Learning Scientist at Rivian, presents the “Removing Weather-related Image Degradation at the Edge” tutorial at the May 2024 Embedded Vision Summit. For machines that operate outdoors—such as autonomous cars and trucks—image quality degradation due to weather conditions presents a significant challenge. For example, snow, rainfall and raindrops… “Removing Weather-related Image Degradation at

The Two Long-wave Infrared Innovations Required for a US$500M Market

Since thermal cameras have been an automotive technology for 25 years and are still not prevalent, it’s clear that developments are required for them to become a common choice in ADAS sensor suites. Although over 1.2 million on-road vehicles have thermal LWIR camera technology installed, this is 0.08% of the estimated total of 1.5 billion

“Seeing the Invisible: Unveiling Hidden Details through Advanced Image Acquisition Techniques,” a Presentation from Qualitas Technologies

Raghava Kashyapa, CEO of Qualitas Technologies, presents the “Seeing the Invisible: Unveiling Hidden Details through Advanced Image Acquisition Techniques” tutorial at the May 2024 Embedded Vision Summit. In this presentation, Kashyapa explores how advanced image acquisition techniques reveal previously unseen information, improving the ability of algorithms to provide valuable insights.… “Seeing the Invisible: Unveiling Hidden

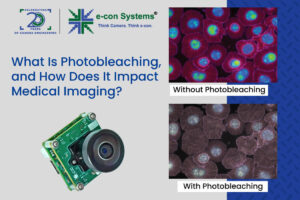

What Is Photobleaching, and How Does It Impact Medical Imaging?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Photobleaching is a major challenge in medical imaging, as it compromises the accuracy of fluorescent-based techniques. See how photobleaching works and find out how you can overcome this challenge to help medical applications enhance the

“Omnilert Gun Detect: Harnessing Computer Vision to Tackle Gun Violence,” a Presentation from Omnilert

Chad Green, Director of Artificial Intelligence at Omnilert, presents the “Omnilert Gun Detect: Harnessing Computer Vision to Tackle Gun Violence” tutorial at the May 2024 Embedded Vision Summit. In the United States in 2023, there were 658 mass shootings, and 42,996 people lost their lives to gun violence. Detecting and… “Omnilert Gun Detect: Harnessing Computer

Redefining Hybrid Meetings With AI-powered 360° Videoconferencing

This blog post was originally published at Ambarella’s website. It is reprinted here with the permission of Ambarella. The global pandemic catalyzed a boom in videoconferencing that continues to grow as companies embrace hybrid work models and seek more sustainable approaches to business communication with less travel. Now, with videoconferencing becoming a cornerstone of modern