Cameras and Sensors for Embedded Vision

WHILE ANALOG CAMERAS ARE STILL USED IN MANY VISION SYSTEMS, THIS SECTION FOCUSES ON DIGITAL IMAGE SENSORS

While analog cameras are still used in many vision systems, this section focuses on digital image sensors—usually either a CCD or CMOS sensor array that operates with visible light. However, this definition shouldn’t constrain the technology analysis, since many vision systems can also sense other types of energy (IR, sonar, etc.).

The camera housing has become the entire chassis for a vision system, leading to the emergence of “smart cameras” with all of the electronics integrated. By most definitions, a smart camera supports computer vision, since the camera is capable of extracting application-specific information. However, as both wired and wireless networks get faster and cheaper, there still may be reasons to transmit pixel data to a central location for storage or extra processing.

A classic example is cloud computing using the camera on a smartphone. The smartphone could be considered a “smart camera” as well, but sending data to a cloud-based computer may reduce the processing performance required on the mobile device, lowering cost, power, weight, etc. For a dedicated smart camera, some vendors have created chips that integrate all of the required features.

Cameras

Until recent times, many people would imagine a camera for computer vision as the outdoor security camera shown in this picture. There are countless vendors supplying these products, and many more supplying indoor cameras for industrial applications. Don’t forget about simple USB cameras for PCs. And don’t overlook the billion or so cameras embedded in the mobile phones of the world. These cameras’ speed and quality have risen dramatically—supporting 10+ mega-pixel sensors with sophisticated image processing hardware.

Consider, too, another important factor for cameras—the rapid adoption of 3D imaging using stereo optics, time-of-flight and structured light technologies. Trendsetting cell phones now even offer this technology, as do latest-generation game consoles. Look again at the picture of the outdoor camera and consider how much change is about to happen to computer vision markets as new camera technologies becomes pervasive.

Sensors

Charge-coupled device (CCD) sensors have some advantages over CMOS image sensors, mainly because the electronic shutter of CCDs traditionally offers better image quality with higher dynamic range and resolution. However, CMOS sensors now account for more 90% of the market, heavily influenced by camera phones and driven by the technology’s lower cost, better integration and speed.

Airy3D and Lattice to Showcase Compact, Integrated Humanoid and Robotic 3D Vision Demo at Embedded World 2026

Montreal, Canada — March 4, 2026 — Airy3D today announced a joint demonstration with Lattice Semiconductor highlighting a compact and compute-efficient 3D vision solution for humanoids and advanced robotics, which will be on display at Embedded World 2026. The demo combines Airy3D’s DepthIQ™ technology with a compact, low-power Lattice CrossLink™-NX FPGA to enable high-quality depth

Multi-Sensor IoT architecture: inside the stack and how to scale it

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. What Is a Multi-Sensor Stack, Really? At its core, a multi-sensor stack is a layered system where multiple sensor types (visual, thermal, acoustic, motion, environmental) work in parallel to generate a contextual understanding of the world around

From ADAS to Robotaxi: How to Overcome the Major Vision Challenges

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Key Takeaways Why does robotaxi vision need more than task-driven ADAS sensing? Impact of long-duty operation and changing lighting on perception reliability Challenges faced across vehicles, cities, and operating conditions How visual data continuity affects

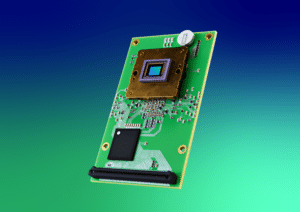

Vision Components unveils all-in-one VC EvoCam with MediaTek processor

Ettlingen, February 18, 2026 — Vision Components is presenting the VCSBC EvoCam for the first time at embedded world, a new generation of all-in-one intelligent board-level cameras featuring the MediaTek Genio 510 processor. Measuring tiny 65 x 40 mm, the camera is equipped with all necessary components for image acquisition and image processing, making the

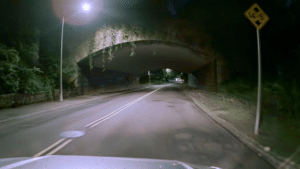

Pushing the Limits of HDR with Ubicept

This blog post was originally published at Ubicept’s website. It is reprinted here with the permission of Ubicept. Executive summary Ubicept’s SPAD-based system offers consistent HDR performance in nighttime driving conditions, preserving shadow and highlight detail where conventional cameras fall short. Unlike traditional HDR techniques which often struggle with motion artifacts, Ubicept Photon Fusion maintains

e-con Systems Launches DepthVista Helix 3D CW iToF Camera for Robotics and Industrial Automation

California & Chennai (February 17, 2026): e-con Systems, a global leader in embedded vision solutions, launches DepthVista Helix 3D CW iToF Camera, a high-performance depth camera engineered to deliver reliable and accurate 3D perception for wide range of industrial robotics applications, including Autonomous Mobile Robots (AMRs), pick-and-place, bin-picking, palletization and depalletization robots, industrial safety and automation, and

Sony Pregius IMX264 vs. IMX568: A Detailed Sensor Comparison Guide

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. The image sensor is an important component in defining the camera’s image quality. Many real-world applications pushed for smaller pixel sizes to increase resolution in compact form factors. To address this demand, Sony has been improving

Upcoming Webinar on CSI-2 over D-PHY & C-PHY

On February 24, 2026, at 9:00 am PST (12:00 pm EST) MIPI Alliance will deliver a webinar “MIPI CSI-2 over D-PHY & C-PHY: Advancing Imaging Conduit Solutions” From the event page: MIPI CSI-2®, together with MIPI D-PHY™ and C-PHY™ physical layers, form the foundation of image sensor solutions across a wide range of markets, including

What’s New in MIPI Security: MIPI CCISE and Security for Debug

This blog post was originally published at MIPI Alliance’s website. It is reprinted here with the permission of MIPI Alliance. As the need for security becomes increasingly more critical, MIPI Alliance has continued to broaden its portfolio of standardized solutions, adding two more specifications in late 2025, and continuing work on significant updates to the MIPI Camera

What Sensor Fusion Architecture Offers for NVIDIA Orin NX-Based Autonomous Vision Systems

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Key Takeaways Why multi-sensor timing drift weakens edge AI perception How GNSS-disciplined clocks align cameras, LiDAR, radar, and IMUs Role of Orin NX as a central timing authority for sensor fusion Operational gains from unified time-stamping