Software for Embedded Vision

Accelerating Product Development in the Era of Physical AI

This video was originally published at Peridio’s website. It is reprinted here with the permission of Peridio. The embedded world is undergoing its biggest transformation in a generation. AI workloads are now moving into the physical world — into cameras, robots, tractors, and drones — and edge devices are evolving into intelligent agents. Yet the

Why On-device AI Matters

This blog post was originally published at ENERZAi’s website. It is reprinted here with the permission of ENERZAi. Hello! I’m Minwoo Son from ENERZAi’s Business Development team. Through several posts so far, we’ve shared ENERZAi’s full-stack software capabilities for delivering high-performance on-device AI — including Optimium, our proprietary AI compiler that encapsulates our optimization expertise;

Upcoming Webinar on LLM-driven Driver Development

On March 19, 2026, at 1:00 pm EDT (10:00 am PDT) Boston.AI will deliver a webinar “Intelligent Driver Development with LLM Context Engineering ” From the event page: Developing even simple sensor drivers can consume valuable engineering time, requiring manual transcription of registers from datasheets into code—an error-prone and repetitive process. In this webinar, you’ll

Ambarella to Showcase “The Ambarella Edge: From Agentic to Physical AI” at Embedded World 2026

Enabling developers to build, integrate, and deploy edge AI solutions at scale SANTA CLARA, Calif., — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, today announced that it will exhibit at Embedded World 2026, taking place March 10-12 in Nuremberg, Germany. At the show, Ambarella’s theme, “The Ambarella Edge: From Agentic to Physical AI,”

Production-Ready, Full-Stack Edge AI Solutions Turn Microchip’s MCUs and MPUs Into Catalysts for Intelligent Real-Time Decision-Making

Chandler, Ariz., February 10, 2026 — A major next step for artificial intelligence (AI) and machine learning (ML) innovation is moving ML models from the cloud to the edge for real-time inferencing and decision-making applications in today’s industrial, automotive, data center and consumer Internet of Things (IoT) networks. Microchip Technology (Nasdaq: MCHP) has extended its edge AI offering

Accelerating next-generation automotive designs with the TDA5 Virtualizer™ Development Kit

This blog post was originally published at Texas Instruments’ website. It is reprinted here with the permission of Texas Instruments. Introduction Continuous innovation in high-performance, power-efficient systems-on-a-chip (SoCs) is enabling safer, smarter and more autonomous driving experiences in even more vehicles. As another big step forward, Texas Instruments and Synopsys developed a Virtualizer Development Kit™ (VDK) for the

Into the Omniverse: OpenUSD and NVIDIA Halos Accelerate Safety for Robotaxis, Physical AI Systems

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA Editor’s note: This post is part of Into the Omniverse, a series focused on how developers, 3D practitioners and enterprises can transform their workflows using the latest advancements in OpenUSD and NVIDIA Omniverse. New NVIDIA safety

Driving the Future of Automotive AI: Meet RoX AI Studio

This blog post was originally published at Renesas’ website. It is reprinted here with the permission of Renesas. In today’s automotive industry, onboard AI inference engines drive numerous safety-critical Advanced Driver Assistance Systems (ADAS) features, all of which require consistent, high-performance processing. Given that AI model engineering is inherently iterative (numerous cycles of ‘train, validate, and

Production Software Meets Production Hardware: Jetson Provisioning Now Available with Avocado OS

This blog post was originally published at Peridio’s website. It is reprinted here with the permission of Peridio. The gap between robotics prototypes and production deployments has always been an infrastructure problem disguised as a hardware problem. Teams build incredible computer vision models and robotic control systems on NVIDIA Jetson developer kits, only to hit

Robotics Builders Forum offers Hardware, Know-How and Networking to Developers

On February 25, 2026 from 8:30 am to 5:30 pm ET, Advantech, Qualcomm, Arrow, in partnership with D3 Embedded, Edge Impulse, and the Pittsburgh Robotics Network will present Robotics Builders Forum, an in-person conference for engineers and product teams. Qualcomm and D3 Embedded are members of the Edge AI and Vision Alliance, while Edge Impulse

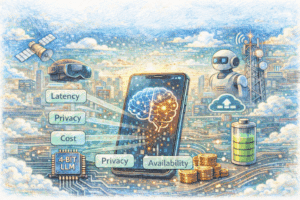

On-Device LLMs in 2026: What Changed, What Matters, What’s Next

In On-Device LLMs: State of the Union, 2026, Vikas Chandra and Raghuraman Krishnamoorthi explain why running LLMs on phones has moved from novelty to practical engineering, and why the biggest breakthroughs came not from faster chips but from rethinking how models are built, trained, compressed, and deployed. Why run LLMs locally? Four reasons: latency (cloud

Voyager SDK v1.5.3 is Live, and That Means Ultralytics YOLO26 Support

Voyager v1.5.3 dropped, and Ultralytics YOLO26 support is the big headline here. If you’ve been following Ultralytics’ releases, you’ll know Ultralytics YOLO26 is specifically engineered for edge devices like Axelera’s Metis hardware. Why Ultralytics YOLO26 matters for your projects: The architecture is designed end-to-end, which means no more NMS (non-maximum suppression) post-processing. That translates to simpler deployment and

Free Webinar Highlights Compelling Advantages of FPGAs

On March 17, 2026 at 9 am PT (noon ET), Efinix’s Mark Oliver, VP of Marketing and Business Development, will present the free hour webinar “Why your Next AI Accelerator Should Be an FPGA,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration page: Edge AI system developers often

HCLTech Recognized as the ‘Innovation Award’ Winner of the 2025 Ericsson Supplier Awards

LONDON and NOIDA, India, Jan 19 2026 — HCLTech, a leading global technology company, today announced that it has been recognized by Ericsson as the ‘Innovation Award’ winner in the 2025 Ericsson Supplier Awards. The award has been given in recognition of HCLTech’s contribution to enhancing Ericsson’s operational efficiency through AI-driven capabilities and automation. HCLTech was selected

Top Python Libraries of 2025

This article was originally published at Tryolabs’ website. It is reprinted here with the permission of Tryolabs. Welcome to the 11th edition of our yearly roundup of the Python libraries! If 2025 felt like the year of Large Language Models (LLMs) and agents, it’s because it truly was. The ecosystem expanded at incredible speed, with new models,

Quadric’s SDK Selected by TIER IV for AI Processing Evaluation and Optimization, Supporting Autoware Deployment in Next-Generation Autonomous Vehicles

Quadric today announced that TIER IV, Inc., of Japan has signed a license to use the Chimera AI processor SDK to evaluate and optimize future iterations of Autoware, open-source software for autonomous driving pioneered by TIER IV. Burlingame, CA, January 14, 2026 – Quadric today announced that TIER IV, Inc., of Japan has signed a

Deep Learning Vision Systems for Industrial Image Processing

This blog post was originally published at Basler’s website. It is reprinted here with the permission of Basler. Deep learning vision systems are often already a central component of industrial image processing. They enable precise error detection, intelligent quality control, and automated decisions – wherever conventional image processing methods reach their limits. We show how a

Free Webinar Examines Autonomous Imaging for Environmental Cleanup

On March 3, 2026 at 9 am PT (noon ET), The Ocean Cleanup’s Robin de Vries, ADIS (Autonomous Debris Imaging System) Lead, will present the free hour webinar “Cleaning the Oceans with Edge AI: The Ocean Cleanup’s Smart Camera Transformation,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration