Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

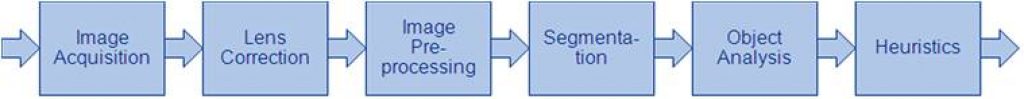

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

10xEngineers and Andes Enable High-Performance AI Compilation for RISC-V AX46MPV Cores

Hsinchu, Taiwan – February 26, 2026 – The collaboration between 10xEngineers, a services company specializing in AI compilers, and Andes Technology Corporation, a leading provider of high-performance, low-power 32- and 64-bit RISC-V processor IP and a Founding Premier Member of RISC-V International, delivers first-class AI workload compilation for Andes AX46MPV cores using 10xEngineers’ AI graph compiler, Baltoro. This collaboration enables

Edge AI and Vision on Renesas RA8P1 MCU

Take a look at the Renesas flagship MCU, RA8P1, featuring a 1GHz ARM Cortex-M85 and Ethos-U55 NPU. Here we are showcasing one facial detection demo using YOLO and a Wheat Disease Detection demo, in partnership with Moschip.

Chips&Media Accelerates WAVE-N Ecosystem: Redefining the Future of Next-Generation Customized NPUs

February 24, 2026, Seoul, — As a premier multimedia IP provider, Chips&Media is proud to announce the strategic expansion of the WAVE-N ecosystem – our next-generation customized NPU architecture. Key Objectives: Strategic Partnerships: Cultivating alliances with leading AI-based imaging network providers IP Integration: Merging our silicon-proven WAVE-N IP with pre-evaluated, high-performance AI networks Customer-Centric Innovation: Providing clients with access to

CES 2026: Physical AI moves from concept to system architecture

This market analysis was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The world’s largest consumer electronics conference demonstrated the technical synergies between automotive and robotics. At CES 2026, there was a clear cross-sector message: Physical AI is the common language across the automotive, robotaxi

How Lenovo is scaling Level 4 autonomous robotaxis on Arm

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. As L4 robotaxis shift from pilot to production, Arm offers the compute foundation needed to deliver end-to-end physical AI that scales across vehicle fleets. After years of autonomous driving pilots and controlled trials, the automotive industry is moving toward the production-scale deployment of Level 4 (L4) robotaxis. This marks

What Does a GPU Have to Do With Automotive Security?

This blog post was originally published at Imagination Technologies’ website. It is reprinted here with the permission of Imagination Technologies. The automotive industry is undergoing the most significant transformation since the advent of electronics in cars. Vehicles are becoming software-defined, connected, AI-driven, and continuously updated. This evolution brings extraordinary new capability – but it also brings

Ambarella to Showcase “The Ambarella Edge: From Agentic to Physical AI” at Embedded World 2026

Enabling developers to build, integrate, and deploy edge AI solutions at scale SANTA CLARA, Calif., — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, today announced that it will exhibit at Embedded World 2026, taking place March 10-12 in Nuremberg, Germany. At the show, Ambarella’s theme, “The Ambarella Edge: From Agentic to Physical AI,”

Right Sizing AI for Embedded Applications

This blog post was originally published at BrainChip’s website. It is reprinted here with the permission of BrainChip. We all know the AI revolution train is heading straight for the Embedded Station. Some of us are already in the driver’s seat, while others are waiting for the first movers to pave the way so we can

Robotics Builders Forum offers Hardware, Know-How and Networking to Developers

On February 25, 2026 from 8:30 am to 5:30 pm ET, Advantech, Qualcomm, Arrow, in partnership with D3 Embedded, Edge Impulse, and the Pittsburgh Robotics Network will present Robotics Builders Forum, an in-person conference for engineers and product teams. Qualcomm and D3 Embedded are members of the Edge AI and Vision Alliance, while Edge Impulse

NanoXplore and STMicroelectronics Deliver European FPGA for Space Missions

Key Takeaways: NanoXplore’s NG-ULTRA FPGA becomes the first product qualified to new European ESCC 9030 standard for space applications The product leverages a supply chain fully based in the European Union, from design to manufacturing and test, and delivered by ST Its advanced digital capability enables European customers to develop higher performance, more competitive satellites

Voyager SDK v1.5.3 is Live, and That Means Ultralytics YOLO26 Support

Voyager v1.5.3 dropped, and Ultralytics YOLO26 support is the big headline here. If you’ve been following Ultralytics’ releases, you’ll know Ultralytics YOLO26 is specifically engineered for edge devices like Axelera’s Metis hardware. Why Ultralytics YOLO26 matters for your projects: The architecture is designed end-to-end, which means no more NMS (non-maximum suppression) post-processing. That translates to simpler deployment and

Free Webinar Highlights Compelling Advantages of FPGAs

On March 17, 2026 at 9 am PT (noon ET), Efinix’s Mark Oliver, VP of Marketing and Business Development, will present the free hour webinar “Why your Next AI Accelerator Should Be an FPGA,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration page: Edge AI system developers often

Meet MIPS S8200: Real-Time, On-Device AI for the Physical World

This blog post was originally published at MIPS’s website. It is reprinted here with the permission of MIPS. Physical AI is the ability for machines to sense their environment, think locally, act safely, and communicate quickly without waiting on the cloud. In safety-critical scenarios like driver assistance or industrial robotics, milliseconds matter. That’s why MIPS’

The Next Platform Shift: Physical and Edge AI, Powered by Arm

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. The Arm ecosystem is taking AI beyond the cloud and into the real-world As CES 2026 opens, a common thread quickly emerges across the show floor: most of what people are seeing, touching, and experiencing is already built on Arm. Arm-based

STM32MP21x: It’s Never Been More Cost-effective or More Straightforward to Create Industrial Applications with Cameras

This blog post was originally published at STMicroelectronics’ website. It is reprinted here with the permission of STMicroelectronics. ST is launching today the STM32MP21x product line, the most affordable STM32MP2, comprising a single-core Cortex-A35 running at 1.5 GHz and a Cortex-M33 at 300 MHz. It thus completes the STM32MP2 series announced in 2023, which became our first 64-bit MPUs. After the

Upcoming Webinar on Last Mile Logistics

On January 28, 2026, at 11:00 am PST (2:00 pm EST) Alliance Member company STMicroelectronics will deliver a webinar “Transforming last mile logistics with STMicroelectronics and Point One” From the event page: Precision navigation is rapidly becoming the standard for last mile delivery vehicles of all types. But what does it truly take to keep

Why Scalable High-Performance SoCs are the Future of Autonomous Vehicles

This blog post was originally published at Texas Instruments’ website. It is reprinted here with the permission of Texas Instruments. Summary The automotive industry is ascending to higher levels of vehicle autonomy with the help of central computing platforms. SoCs like the TDA5 family offer safe, efficient AI performance through an integrated C7™ NPU and

Getting Started with Edge AI on NVIDIA Jetson: LLMs, VLMs, and Foundation Models for Robotics

This article was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Running advanced AI and computer vision workloads on small, power-efficient devices at the edge is a growing challenge. Robots, smart cameras, and autonomous machines need real-time intelligence to see, understand, and react without depending on the cloud. The NVIDIA

Microchip Expands PolarFire FPGA Smart Embedded Video Ecosystem with New SDI IP Cores and Quad CoaXPress Bridge Kit

Solution stacks deliver broadcast-quality video, SLVS-EC to CoaXPress bridging and ultra-low power operation for next-generation medical, industrial and robotic vision applications CHANDLER, Ariz., January 19, 2025 —Microchip Technology (Nasdaq: MCHP) has expanded its PolarFire® FPGA smart embedded video ecosystem to support developers who need reliable, low-power, high-bandwidth video connectivity. The embedded vision solution stacks combine hardware evaluation kits, development

Quadric, Inference Engine for On-Device AI Chips, Raises $30M Series C as Design Wins Accelerate Across Edge

Tripling product revenues, comprehensive developer tools, and scalable inference IP for vision and LLM workloads, position Quadric as the platform for on-device AI. BURLINGAME, Calif., Jan. 14, 2026 (PRNewswire) — Quadric®, the inference engine that powers on-device AI chips, today announced an oversubscribed $30 million Series C funding round, bringing total capital raised to $72