Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

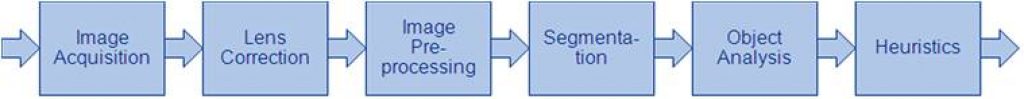

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

BrainChip Demonstrates Human Behavior Detection at IFS Direct Connect 2024

Laguna Hills, Calif. – February 15, 2024 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI, will host an exhibit demonstrating human behavior AI at IFS Direct Connect 2024 at the San Jose McEnery Convention Center in San Jose, California February 21.

Generative AI in 2024: The 6 Most Important Consumer Tech Trends

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Qualcomm executives reveal key trends in AI, consumer technology and more for the future Not that long ago, the banana-peel-and-beer-fueled DeLorean in “Back to the Future” was presented as comedy. Yet today, 10% of cars are electric-powered.1

Adapting Strategies: Industrial Machine Vision’s Response to Short-term Challenges

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. In 2023, the total machine vision market generated $6.9 billion in revenue. When will growth return? OUTLINE Industrial machine vision: a $7.8 billion market in 2029 compared to $6.9 billion in 2023.

Varjo Selects Inuitive’s NU4100 Vision Processor for Its Next Generation XR-4 Series Virtual and Mixed Reality Products

The XR-4 Series leverages the unparalleled capabilities of the NU4100 vision processor to achieve high-resolution, low-latency video streaming from multiple cameras, bridging the gap towards human-like visual perception Ra’anana, Israel, February 05, 2024 – Varjo, the technology leader in industrial-grade VR/XR hardware and software, recently announced its next-generation XR-4 Series headsets. The XR-4 Series deploys

Generative AI, High Performance Computing to Fuel High Bandwidth Memory Market Growth

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The market for high bandwidth memory (HBM) is poised for rapid growth over the next five years, announces Yole Group in its latest analyses. According to the analysts, it is led by

NVIDIA RTX 2000 Ada Generation GPU Brings Performance, Versatility for Next Era of AI-accelerated Design and Visualization

Latest RTX technology delivers cost-effective package for designers, developers, engineers, and embedded and edge applications. Generative AI is driving change across industries — and to take advantage of its benefits, businesses must select the right hardware to power their workflows. The new NVIDIA RTX 2000 Ada Generation GPU delivers the latest AI, graphics and compute

DRAM: An Industry in Full Flight

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Generative AI and High Bandwidth Memory (HBM) fuel DRAM market growth. OUTLINE The HBM market has the potential to grow to US$14 billion in 2024. Yole Group expects HBM revenue growth to

Qualcomm at NeurIPS 2023: Cutting-edge Research in Generative and Embodied AI, Model Efficiency, and More

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Learn about Qualcomm Technologies’ accepted AI demos and papers Neural Information Processing Systems (NeurIPS) is the marquee machine learning (ML) conference of the year. This year the paper acceptance rate was 26%, making it one of the

AMD Unveils Embedded+ Architecture; Combines Embedded Processors with Adaptive SoCs to Accelerate Time-to-Market for Edge AI Applications

AMD-validated solutions provide a simplified path to AI inferencing, sensor fusion, industrial networking, control, and visualization for ODM partners SANTA CLARA, Calif., Feb. 06, 2024 (GLOBE NEWSWIRE) — AMD (NASDAQ: AMD) today announced the launch of AMD Embedded+, a new architectural solution that combines AMD Ryzen™ Embedded processors with Versal™ adaptive SoCs onto a single integrated

Smartphone Memory: Generational AI Upgrades Will Drive a Spike in DRAM Demand

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. AI requirements to hasten small NAND phase-out Artificial intelligence (AI) has been incorporated into smartphone features for several years, but the implementation of adapted Large Language Models (LLM) in high-end devices could

MIPS Launches New Strategic Focus and ReBrand: The Freedom to Innovate Compute

Today is an exciting day at MIPS as we launch a rebrand and strategic focus on giving our customers the Freedom to Innovate Compute. For years, when customers have needed more processing power and compute density, we’ve always gone back to the evolution of process nodes. Every few years, a new process node comes along

Free Webinar Explores How to Accelerate Edge AI Development With Microservices For NVIDIA Jetson

On March 5, 2024 at 8 am PT (11 am ET), NVIDIA senior product manager Chintan Shah will present the free hour webinar “Accelerate Edge AI Development With Microservices For NVIDIA Jetson,” organized by the Edge AI and Vision Alliance. Here’s the description, from the event registration page: Building vision AI applications for the edge

The Generative AI Economy: Worth Up to $7.9T

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The potential for generative AI is unlimited Generative artificial intelligence (AI) is so powerful, it’s impacting aspects of the human condition, driving innovation across industries, creating new consumer and enterprise applications, and stimulating revenue growth. And as

Robotic Vehicles: Steering Towards a $1B Market Value

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. What strategies will Baidu-Apollo, Cruise, Waymo, AutoX, and others adopt in response to this revolution? OUTLINE The robotic vehicle processor market is expected to reach US$1 billion in 2034, with an estimated

The Age of Artificial Intelligence: AI Chips to 2034

Artificial Intelligence is transforming the world as we know it; from the success of DeepMind over Go world champion Lee Sedol in 2016 to the robust predictive abilities of OpenAI’s ChatGPT, the complexity of AI training algorithms is growing at a startlingly fast pace, where the amount of compute necessary to run newly-developed training algorithms

The State of AI and Where It’s Heading in 2024

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. An interview with Qualcomm Technologies’ SVP, Durga Malladi, about AI benefits, challenges, use cases and regulations It’s an exciting and dynamic time as the adoption of on-device generative artificial intelligence (AI) grows, driving the democratization of AI

Announcing NVIDIA Metropolis Microservices for Jetson for Rapid Edge AI Development

Building vision AI applications for the edge often comes with notoriously long and costly development cycles. At the same time, quickly developing edge AI applications that are cloud-native, flexible, and secure has never been more important. Now, a powerful yet simple API-driven edge AI development workflow is available with the new NVIDIA Metropolis microservices. NVIDIA

Build Vision AI Applications at the Edge with NVIDIA Metropolis Microservices and APIs

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. NVIDIA Metropolis microservices provide powerful, customizable, cloud-native APIs and microservices to develop vision AI applications and solutions. The framework now includes NVIDIA Jetson, enabling developers to quickly build and productize performant and mature vision AI applications at

BrainChip and MYWAI Partner to Deliver Next-Generation Edge AI Solutions

Laguna Hills, Calif. – January 16, 2023 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI IP, and MYWAI, the leading AIoT solution provider for Edge intelligence in the European Union (EU), today announced a strategic partnership to deliver next-generation Edge AI

Advancing Perception Across Modalities with State-of-the-art AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Qualcomm AI Research papers at ICCV, Interspeech and more The last few months have been exciting for the Qualcomm AI Research team, with the opportunity to present our latest papers and artificial intelligence (AI) demos at conferences