Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

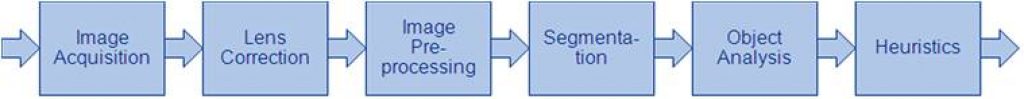

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

Unleashing the Potential for Assisted and Automated Driving Experiences Through Scalability

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Working within an ecosystem of innovators and suppliers is paramount to addressing the challenge of building a scalable ADAS solution While the recent sentiment around fully autonomous vehicles is not overly positive, more and more vehicles on

The Building Blocks of AI: Decoding the Role and Significance of Foundation Models

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. These neural networks, trained on large volumes of data, power the applications driving the generative AI revolution. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more accessible,

NIST Advanced Packaging Summit

Semiconductor packaging can be incredibly boring. That said, advanced packaging is a key to every high-complexity semiconductor – both on and off the planet. Nvidia, AMD, and Intel have all utilized chiplets and advanced packages for their latest AI/ML accelerators and processors. Without 2.5D and 3D design and assembly techniques, these and many other essential

Turbocharging AI During CES

This blog post was originally published at Ambarella’s website. It is reprinted here with the permission of Ambarella. Innovation was the focus of our exhibition during this year’s CES, with the theme of Turbocharged AI. Over the course of four days, we held more than 300 customer meetings that showcased nearly 40 demonstrations and customer

DEEPX Expands First-generation AI Chips into Intelligent Security and Video Analytics Markets

Award-winning on-device AI chipmaker will pursue key industry partnerships to bolster this expansion; following a strong showing at ISC West, it will continue this momentum at Secutech Taipei, Embedded Vision Summit, and COMPUTEX 2024. TAIPEI and SEOUL, South Korea, April 23, 2024 /PRNewswire/ — DEEPX, an on-device AI semiconductor company, is announcing plans to expand

Oriented FAST and Rotated BRIEF (ORB) Feature Detection Speeds Up Visual SLAM

This blog post was originally published at Ceva’s website. It is reprinted here with the permission of Ceva. In the realm of smart edge devices, signal processing and AI inferencing are intertwined. Sensing can require intense computation to filter out the most significant data for inferencing. Algorithms for simultaneous localization and mapping (SLAM), a type

Democratizing AI: Top 5 Insights from Axios, Meta, Black Magic Design, and Our Panel of Industry Titans

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. In a panel discussion at our annual Snapdragon Summit in the breathtaking setting of Maui, Hawaii, we had the privilege of engaging in a dynamic conversation with four esteemed experts about the democratization of artificial intelligence (AI).

AI Decoded: Demystifying Large Language Models, the Brains Behind Chatbots

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Explore what LLMs are, why they matter and how to use them. Editor’s note: This post is part of our AI Decoded series, which aims to demystify AI by making the technology more accessible, while showcasing new

Intel Builds World’s Largest Neuromorphic System to Enable More Sustainable AI

Hala Point, the industry’s first 1.15 billion neuron neuromorphic system, builds a path toward more efficient and scalable AI. What’s New: Today, Intel announced that it has built the world’s largest neuromorphic system. Code-named Hala Point, this large-scale neuromorphic system, initially deployed at Sandia National Laboratories, utilizes Intel’s Loihi 2 processor, aims at supporting research for future brain-inspired artificial

Arm’s Mission to Help Tackle AI’s Insatiable Energy Needs

This blog post was originally published at Arm’s website. It is reprinted here with the permission of Arm. The challenge (and opportunity) of powering workloads in the AI datacenter AI has the potential to exceed all the transformative innovations created in the past century. The benefits to society around health care, productivity, education and many

How Embedded Vision is Helping Build Smart Buses of the Future

This blog post was originally published at TechNexion’s website. It is reprinted here with the permission of TechNexion. The transportation sector has always been at the forefront of technological advancements, and the emergence of the “Smart Bus” system is a testament to this evolution. This innovative system is not just about enhancing the passenger experience

Edge AI and Vision Alliance Conversation with GenAI Nerds on Generative AI At the Edge

Kerry Shih of GenAI Nerds interviews Jeff Bier, Founder of the Edge AI and Vision Alliance, and Phil Lapsley, the Alliance’s Vice President of Business Development, about the opportunities and trends for generative AI at the edge. Shih, Bier and Lapsley discuss topics such as: Where we are in the generative AI hype cycle What

AMD Expands Commercial AI PC Portfolio to Deliver Leadership Performance Across Professional Mobile and Desktop Systems

AMD Ryzen™ PRO 8040 Series and AMD Ryzen™ PRO 8000 Series provide businesses with the most advanced x86 processors1 for AI PCs SANTA CLARA, Calif., April 16, 2024 (GLOBE NEWSWIRE) — Today, AMD (NASDAQ: AMD) announced new products that will expand its commercial mobile and desktop AI PC portfolio, delivering exceptional productivity and premium AI

Microchip Technology Acquires Neuronix AI Labs

Innovative technology enhances AI-enabled intelligent edge solutions and increases neural networking capabilities CHANDLER, Ariz., April 15, 2024 — Microchip Technology (Nasdaq: MCHP) has acquired Neuronix AI Labs to expand its capabilities for power-efficient, AI-enabled edge solutions deployed on field programmable gate arrays (FPGAs). Neuronix AI Labs provides neural network sparsity optimization technology that enables a

Partitioning Strategies to Optimize AI Inference for Multi-core Platforms

This blog post was originally published at Ceva’s website. It is reprinted here with the permission of Ceva. Not so long ago, AI inference at the edge was a novelty easily supported by a single NPU IP accelerator embedded in the edge device. Expectations have accelerated rapidly since then. Now we want embedded AI inference

Exploring the Impact of Generative AI: Identifying the Winners

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Datacenter GPU and AI ASIC revenue could reach $156 billion by 2025 and $233 billion by 2029. OUTLINE The massive growth that the data center GPU and AI ASIC market experienced in

AI Chips and Chat GPT: Exploring AI and Robotics

AI chips can empower the intelligence of robotics, with future potential for smarter and more independent cars and robots. Alongside the uses of Chat GPT and chatting with robots at home, the potential for this technology to enhance working environments and reinvent socializing is promising. Cars that can judge the difference between people and signposts

Efficiently Packing Neural Network AI Model for the Edge

This blog post was originally published at Ceva’s website. It is reprinted here with the permission of Ceva. Packing applications into constrained on-chip memory is a familiar problem in embedded design, and is now equally important in compacting neural network AI models into a constrained storage. In some ways this problem is even more challenging

AI Decoded From GTC: The Latest Developer Tools and Apps Accelerating AI on PC and Workstation

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Next Chat with RTX features showcased, TensorRT-LLM ecosystem grows, AI Workbench general availability, and NVIDIA NIM microservices launched. Editor’s note: This post is part of the AI Decoded series, which demystifies AI by making the technology more

The Rise of Generative AI: A Timeline of Breakthrough Innovations

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Explore the most pivotal advancements that shaped the landscape of generative AI From Alan Turing’s pioneering work to the cutting-edge transformers of the present, the field of generative artificial intelligence (AI) has witnessed remarkable breakthroughs — and