This blog post was originally published at Ceva’s website. It is reprinted here with the permission of Ceva.

In the realm of smart edge devices, signal processing and AI inferencing are intertwined. Sensing can require intense computation to filter out the most significant data for inferencing. Algorithms for simultaneous localization and mapping (SLAM), a type of navigation, entail complex image processing at the sensing stage to detect features. Executing in real time, these algorithms must quickly perform feature detection. Introduced in 2011, Oriented Fast and Rotated Brief is one of the faster feature-detection algorithms. Nonetheless, cost and power constraints mean the underlying hardware must also be efficient, and software must be optimized and, for the sake of developer productivity, easy to use.

SLAM assesses where a moving agent is, while also mapping the environment. Self-driving cars employ the technique and so can other devices, such as autonomous mobile robots (AMR), which are warehouse vehicles that move materials. The AMR is a successor to the autonomous guided vehicle (AGV), an older technology that followed a fixed path outlined, for example, by a painted stripe. Like a self-driving car, an AMR can employ cameras and other sensors that feed a SLAM system.

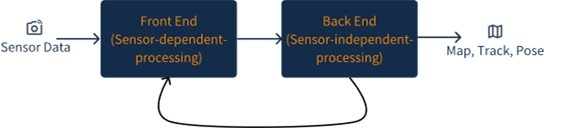

Figure 1. SLAM pipeline

Image processing in the front end identifies features or interest points in frames captured by the camera. Unlike algorithmic or neural-network face or object detection techniques, which try to find things belonging to a defined set of items, visual SLAM has no such predefined set. Moreover, SLAM tracks objects from frame to frame. This is complicated because the agent—and potentially also the objects—are moving, and the objects’ appearance varies in each frame, becoming occluded, changing illumination, or appearing to scale, translate, or rotate. At the same time, front-end processing must keep up with the frame rate.

Feature Detection is the Key to Visual SLAM

The three-step challenge at the core of visual SLAM’s front end is to find correspondences between two frames. The first step is feature detection. Also called a key or interest point, a feature is the part of an image that’s distinctive enough to identify a specific object among many alternatives. Approaches to feature detection include identifying maxima/minima image locations using a difference of Gaussians, employing a corner detector, and applying region detectors.

To be effective, feature detection must find the same interest points in different images. Likewise, the second step of deriving a feature vector representing related features must produce a distinctive descriptor that’s robust to frame-to-frame appearance changes. The third step is to match descriptors between images by selecting the vector in the second image that has the least mathematical difference from each one in the first.

The seminal Scale Invariant Feature Transform (SIFT) method essentially merges feature detection and descriptor-vector creation into a single SIFT-key derivation step. To create the keys, SIFT employs the difference of Gaussians, convolving an image multiple times, which entails floating-point multiplication and addition operations for each pixel. Further operations compute gradients and rotation to provide robustness to illumination and orientation changes. To achieve scale invariance, SIFT copies and resizes an image a few times to build a pyramid, repeating image processing on each pyramid level.

For the matching step, SIFT compares each key to hundreds of neighbors in the second image, clustering comparisons with similar location, orientation, and scale predictions. SIFT then processes each cluster containing at least three entries, using linear algebra—more floating-point arithmetic—to verify keys in a cluster indeed match despite apparent rotation, scaling, or stretching of objects in the second image.

The Speeded Up Robust Features (SURF) method attempts to be faster than SIFT but equally repeatable and robust. For feature detection, it replaces the difference-of-Gaussians technique with a simpler box filter. This approach also obviates building an image pyramid, applying different-size filters instead of copying and resizing the image, thereby reducing computational complexity. To produce a feature vector, SURF divides the image into regions centered on each interest point and computes four sums of differences for each region, clustering those with similar values for the sums. SURF further reduces complexity in the matching step by addressing rotation by examining pixels along a radius from the initial interest point.

Oriented Fast and Rotated Brief Improves Feature Detection

Overall, SURF is less computationally demanding than SIFT. An even more efficient method is Oriented FAST and Rotated BRIEF (ORB). Its developer reports that it’s 14 times faster than SURF, which in turn is 24 times faster than SIFT; the newer algorithm also performs about as well, or in some cases, much better. For feature detection, ORB applies a corner-detection algorithm, FAST (Features from Accelerated Segment Test).

FAST is simple and quick, comparing a pixel to those on a Bresenham circle around it, first checking the pixel to see if it is brighter or darker from N consecutive pixels on the circle. If it is, it could be a corner. To refine the selection of key points, the algorithm employs non-maximal suppression (NMS) to identify and retain the most distinctive key points. Executing the intensity comparisons requires far fewer operations than computing the sum of Gaussians or applying a box filter. The second step of NMS needs to process only the feature list, and not all pixels in the image, which reduces computation as well. To achieve scale invariance, ORB builds an image pyramid and applies FAST to each level. To account for orientation, ORB adds the intensity of pixels above and below the pixel and that of those to the left and right and compares the two values.

ORB uses Rotated BRIEF to compute feature descriptors. In ORB, BRIEF descriptors are vectors of 256 bits. Each bit represents the result of a comparison between the average intensity of two 5×5-pixel windows in the same 31×31-pixel patch. There are 676 of these windows in a patch and therefore more than 200,000 possible pairwise comparisons. To maximize a descriptor’s discriminative power, ORB selects from among the 200,000 the 256 comparison results that are furthest from the average and the least correlated with each other. As with FAST, ORB modifies the original BRIEF approach to be orientation-invariant. Simplifying this task, having determined the orientation of the features belonging to each descriptor, ORB uses those values to normalize a descriptor’s orientation.

For matching, ORB employs a simple hashing function based on a subset of descriptor bits. The algorithm assigns each descriptor in an image to several hash buckets. To match a descriptor from a new frame, ORB computes which buckets it would map to and compares it to the descriptors contained in each bucket.

ORB Feature Detection Runs on Ceva

Although it sounds complex, ORB is fast because it entails integer and logical operations in place of floating-point ones. Many operations can be vectorized, such as the comparison of 5×5-pixel windows and 256-bit descriptors. A developer seeking to incorporate ORB in software running on a PC or similar system will find the algorithm included in OpenCV and available in Python.

A production system such as an AMR or other vehicle, however, will use a custom chip to reduce power and cost compared with a PC. ORB implemented in C compiled to run on the chip’s CPU cores will be functional but impractical, either necessitating brute hardware capabilities similar to the PC or failing to meet real-time requirements. An alternative approach is to employ a vector DSP. Designed specifically to move data and run computationally intense algorithms like ORB, a vector DSP is much more efficient. Achieving this advantage requires tuning the software while being careful to maintain the algorithm’s correctness.

Fortunately, Ceva’s Vision AI DSP and SLAM SDK includes an ORB library for its vector DSPs. Presenting an API similar to the OpenCV implementation, it’s easy to use. Moreover, it’s fast. We built the library in our Ceva Vec-C language, employing standard C code and intrinsics for performance and maintainability. Running on a Ceva SensPro DSP integrated in an SoC, an ORB-based SLAM implementation runs 30× faster than executing solely on the CPU integrated in the same chip.

We demonstrated a visual SLAM solution from Van Gogh Imaging enhanced with our ORB library and running on SensPro at CES 2024. For more information on how to employ our ORB library, the Ceva SLAM SDK, or the SensPro line of DSPs in your computer vision project, please contact us.

Yael Newman

Team Leader, Vision Business Unit, Ceva