This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Working within an ecosystem of innovators and suppliers is paramount to addressing the challenge of building a scalable ADAS solution

While the recent sentiment around fully autonomous vehicles is not overly positive, more and more vehicles on the road now include some assisted and automated driving features that consumers value. There remains much work to be done before human drivers can truly “tune out” from the driving task, and shifting public perception will be essential as the industry introduces new assisted and automated driving features.

The National Highway Traffic Safety Administration (NHTSA) continues to educate consumers on the benefits of both driver assistance technologies that warn drivers or may intervene in a potentially hazardous scenario and automated safety technologies where the system is designed to take certain actions, such as automatic emergency braking. NHTSA has also established a 5-Star Safety Rating system to help consumers better understand what to expect from a safety perspective when purchasing a vehicle.

The capability is already in place

New features are continually being evaluated for inclusion in vehicles with the hopes of reducing the 3,000 road fatalities that occur in the U.S. each month.1 The most basic Advanced Driver Assistance System (ADAS) functionality that’s in approximately 90% of new vehicles hitting the road today helps with steering and/or speed control.2 These ADAS functions often help support automatic emergency braking and lane departure warning features, which have been shown to reduce crashes by more than 50% and 23% respectively.3

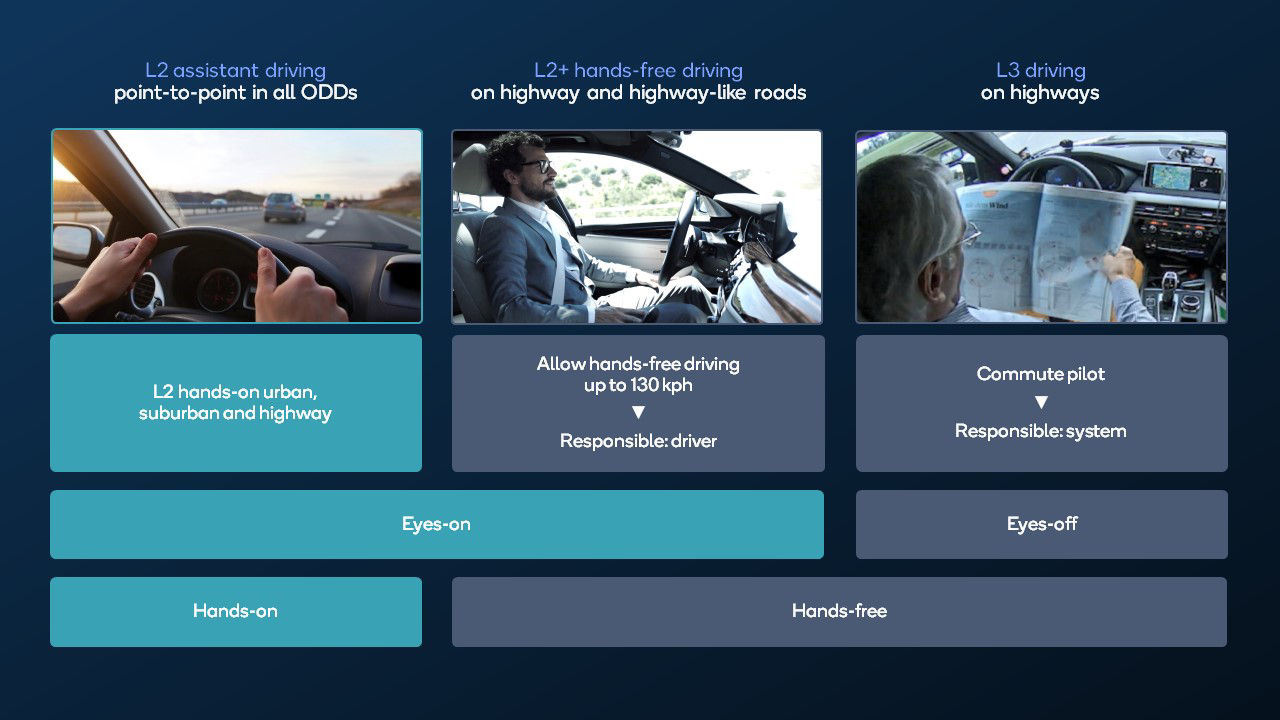

The effectiveness of these initial automated driving features has given automakers a solid foundation to build upon as they look towards developing more advanced automated driving levels — L2, L2+ and L3. In addition to having a growing number of features that consumers already leverage and a roadmap towards full autonomy, automakers also need the right system architecture and access to cloud for scaling ADAS.

Architecture to scale the experience

To meet growing demand for technology at every tier and over the vehicle’s life span, automakers will need a system architecture that’s flexible enough to scale from meeting basic safety requirements to controlling the vehicle. Today, most ADAS system architectures are made up of smart sensors with isolated processing and decision-making but we are starting to see them evolve to a more centralized compute approach that can support information from multiple sensors throughout the vehicle allowing scalability. The industry is moving toward equipping all vehicles for Level 2 autonomation as a baseline system architecture. Building for Level 2 gives automakers the flexibility to add or remove system components to create a variety of automated driving experiences across different trim levels.

Because AD technology requires a great deal of verification and validation, having centralized compute resources that can reuse software and toolchains will be essential to cost-effectively scale automated driving features. For example, today’s L2 systems may only need less than 10 TOPS to detect and classify objects on the road, but when an automaker wants to extend the operational design domain for a feature, increased processing power for new stack components would be required. For example, the camera might be required to detect more in its field of view to deliver a new feature driving additional data into the system. This drives a need for scalability in the compute footprint and I/O required for the platform.

Ongoing development in the cloud

Integrating a cloud-based environment that supports data collection and reprocessing, development and testing, simulation, and machine learning is essential to harness value from the massive amounts of data generated by vehicles. Currently limited by the quantity of hardware available in local server farms to run simulations, moving reprocessing to the cloud can scale the development and delivery of new automated driving functions faster.

Having a cloud-based workbench allows automakers to build new features, analyze their performance, and train machine learning models to improve the vehicle’s functionality throughout its lifetime.

Using all the vehicle’s sensors, automakers can model the vehicle and its environment to create a digital twin in the cloud. This allows them to extend the areas where ADAS features can operate, helping them scale. Data sent to the cloud can be processed and analyzed in parallel, further automating processes such as establishing ground truth and annotating objects on the road for classification. Contextual data from the vehicle’s surroundings can also be used to train artificial intelligence models, allowing its sensors to better detect objects in a vehicle’s field of view.

Unlike the largely hardware-based vehicle advancements of the past, software-driven system design is continuous. New features can be developed in the cloud and deployed at the edge, allowing for an in-vehicle experience that is always improving throughout the vehicle’s lifecycle. Consumers can upgrade their vehicles on-demand, moving to higher levels of autonomy over time.

Technology partner of choice

Working closely with automakers over the last decade or so, we’ve learned the importance of providing platforms that are open and highly scalable. We help automakers put the right infrastructure and ecosystem in place for optimal performance throughout the vehicle’s lifecycle — wireless network operators, cloud service providers, road infrastructure operators, map providers and more.

Building the infrastructure and technology partnerships required for autonomous vehicles is a time-consuming and investment-intensive process. However, with ongoing collaboration and advancements, we are steadily moving towards a future where autonomous vehicles will revolutionize transportation.

Anshuman Saxena

VP, Product Management, Qualcomm Technologies, Inc.

References

- National Highway Traffic Safety Administration. (March 3, 2022). NHTSA Proposes Significant Updates to Five-Star Safety Ratings Program. Retrieved on March 4, 2024 from: https://www.nhtsa.gov/press-releases/five-star-safety-ratings-program-updates-proposed

- Bartlett, Jeff S. (November 4, 2021). Consumer Reports. How Much Automation Does Your Car Really Have?. Retrieved on March 4, 2024 from: https://www.consumerreports.org/cars/automotive-technology/how-much-automation-does-your-car-really-have-level-2-a3543419955/

- Insurance Institute for Highway Safety, Highway Loss Data Institute. (July 2023). Real-world benefits of crash avoidance technologies. Retrieved on March 4, 2024 from: https://www.iihs.org/media/290e24fd-a8ab-4f07-9d92-737b909a4b5e/oOlxAw/Topics/ADVANCED%20DRIVER%20ASSISTANCE/IIHS-HLDI-CA-benefits.pdf