Processors for Embedded Vision

THIS TECHNOLOGY CATEGORY INCLUDES ANY DEVICE THAT EXECUTES VISION ALGORITHMS OR VISION SYSTEM CONTROL SOFTWARE

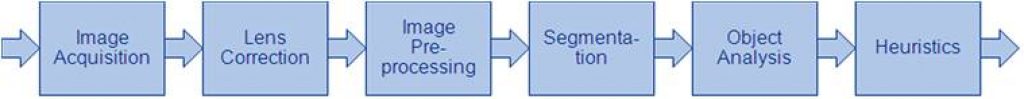

This technology category includes any device that executes vision algorithms or vision system control software. The following diagram shows a typical computer vision pipeline; processors are often optimized for the compute-intensive portions of the software workload.

The following examples represent distinctly different types of processor architectures for embedded vision, and each has advantages and trade-offs that depend on the workload. For this reason, many devices combine multiple processor types into a heterogeneous computing environment, often integrated into a single semiconductor component. In addition, a processor can be accelerated by dedicated hardware that improves performance on computer vision algorithms.

General-purpose CPUs

While computer vision algorithms can run on most general-purpose CPUs, desktop processors may not meet the design constraints of some systems. However, x86 processors and system boards can leverage the PC infrastructure for low-cost hardware and broadly-supported software development tools. Several Alliance Member companies also offer devices that integrate a RISC CPU core. A general-purpose CPU is best suited for heuristics, complex decision-making, network access, user interface, storage management, and overall control. A general purpose CPU may be paired with a vision-specialized device for better performance on pixel-level processing.

Graphics Processing Units

High-performance GPUs deliver massive amounts of parallel computing potential, and graphics processors can be used to accelerate the portions of the computer vision pipeline that perform parallel processing on pixel data. While General Purpose GPUs (GPGPUs) have primarily been used for high-performance computing (HPC), even mobile graphics processors and integrated graphics cores are gaining GPGPU capability—meeting the power constraints for a wider range of vision applications. In designs that require 3D processing in addition to embedded vision, a GPU will already be part of the system and can be used to assist a general-purpose CPU with many computer vision algorithms. Many examples exist of x86-based embedded systems with discrete GPGPUs.

Digital Signal Processors

DSPs are very efficient for processing streaming data, since the bus and memory architecture are optimized to process high-speed data as it traverses the system. This architecture makes DSPs an excellent solution for processing image pixel data as it streams from a sensor source. Many DSPs for vision have been enhanced with coprocessors that are optimized for processing video inputs and accelerating computer vision algorithms. The specialized nature of DSPs makes these devices inefficient for processing general-purpose software workloads, so DSPs are usually paired with a RISC processor to create a heterogeneous computing environment that offers the best of both worlds.

Field Programmable Gate Arrays (FPGAs)

Instead of incurring the high cost and long lead-times for a custom ASIC to accelerate computer vision systems, designers can implement an FPGA to offer a reprogrammable solution for hardware acceleration. With millions of programmable gates, hundreds of I/O pins, and compute performance in the trillions of multiply-accumulates/sec (tera-MACs), high-end FPGAs offer the potential for highest performance in a vision system. Unlike a CPU, which has to time-slice or multi-thread tasks as they compete for compute resources, an FPGA has the advantage of being able to simultaneously accelerate multiple portions of a computer vision pipeline. Since the parallel nature of FPGAs offers so much advantage for accelerating computer vision, many of the algorithms are available as optimized libraries from semiconductor vendors. These computer vision libraries also include preconfigured interface blocks for connecting to other vision devices, such as IP cameras.

Vision-Specific Processors and Cores

Application-specific standard products (ASSPs) are specialized, highly integrated chips tailored for specific applications or application sets. ASSPs may incorporate a CPU, or use a separate CPU chip. By virtue of their specialization, ASSPs for vision processing typically deliver superior cost- and energy-efficiency compared with other types of processing solutions. Among other techniques, ASSPs deliver this efficiency through the use of specialized coprocessors and accelerators. And, because ASSPs are by definition focused on a specific application, they are usually provided with extensive associated software. This same specialization, however, means that an ASSP designed for vision is typically not suitable for other applications. ASSPs’ unique architectures can also make programming them more difficult than with other kinds of processors; some ASSPs are not user-programmable.

Intel Announces New Program for AI PC Software Developers and Hardware Vendors

Intel expands its AI PC Acceleration Program to better equip software developers and independent hardware vendors. What’s New: Intel Corporation today announced the creation of two new artificial intelligence (AI) initiatives as part of the AI PC Acceleration Program: the AI PC Developer Program and the addition of independent hardware vendors to the program. These are

Efinix Rolls Out Line of FPGAs to Accelerate and Adapt Automotive Designs and Applications

Designed to deliver the power behind the promise of the software defined vehicle, Efinix FPGAs uniquely positioned to drive modern vehicles forward March 25, 2024 12:01 AM Eastern Daylight Time–CUPERTINO, Calif.–(BUSINESS WIRE)–Efinix®, an innovator in programmable logic solutions, today announced a line of FPGA solutions designed specifically for the automotive industry to drive forward the

Enabling the Generative AI Revolution with Intelligent Computing Everywhere

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. This post originally appeared on World Economic Forum on January 15, 2024. Generative artificial intelligence is era-defining and could benefit the global economy to the tune of $2.6 to $4.4 trillion annually. To realize the full potential of

The Semiconductor Industry: Mobile and Consumer are Its Beating Heart

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. These market segments generated $296 billion in semiconductor revenue in 2023, with an expected CAGR2023-2030 of 2.1% OUTLINE Semiconductor content will remain stable at ~35% for the mobile and computing subsegments while

Blaize Releases Picasso Analytics

A new easy to use framework and toolkit to empower developers and accelerate advanced analytics for edge AI applications El Dorado Hills, CA, March 20, 2024 — Blaize, a leading provider of full-stack artificial intelligence (AI) solutions for automotive and edge computing in multiple large and rapidly growing markets, announced today the availability of a

Qualcomm Champions the Most Powerful Snapdragon 7 Series Yet, Snapdragon 7+ Gen 3, Featuring Exceptional On-device AI Capabilities

Highlights: Snapdragon 7+ Gen 3, showcases new-in-series on-device generative AI capabilities as well as a 15% boost in CPU performance and a 45% GPU improvement. This Mobile Platform is the first in the 7-series to support Wi-Fi 7 with HBS (High Band Simultaneous) Multi-Link for unrivaled connectivity. Snapdragon 7+ Gen 3 will be initially adopted

indie Semiconductor Announces Strategic Investment in AI Processor Leader Expedera

Partnership Capitalizes on Expedera’s Breakthrough AI Capabilities in Support of indie’s ADAS Portfolio Leverages indie’s Multi-modal Sensing Technology and Expedera’s Scalable NPU IP Yields Class-leading Edge AI Performance at Ultra-low Power and Low Latency March 20, 2024 04:05 PM Eastern Daylight Time–ALISO VIEJO, Calif. & SANTA CLARA, Calif.–(BUSINESS WIRE)–indie Semiconductor, Inc. (Nasdaq: INDI), an Autotech

Calculating Video Quality Using NVIDIA GPUs and VMAF-CUDA

This blog post was originally published at NVIDIA’s website. It is reprinted here with the permission of NVIDIA. Video quality metrics are used to evaluate the fidelity of video content. They provide a consistent quantitative measurement to assess the performance of the encoder. Peak signal-to-noise ratio (PSNR): A long-established quality metric that compares the pixel

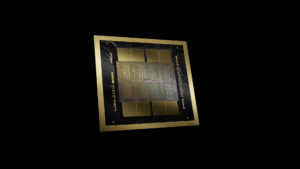

NVIDIA Blackwell Platform Arrives to Power a New Era of Computing

NVIDIA Blackwell powers a new era of computing, enabling organizations everywhere to build and run real-time generative AI on trillion-parameter large language models. New Blackwell GPU, NVLink and Resilience Technologies Enable Trillion-Parameter-Scale AI Models New Tensor Cores and TensorRT- LLM Compiler Reduce LLM Inference Operating Cost and Energy by up to 25x New Accelerators Enable

Qualcomm Brings the Best of On-device AI to More Smartphones with Snapdragon 8s Gen 3

Highlights: Snapdragon 8s Gen 3 Mobile Platform leverages specially selected premium capabilities for next-level on-device generative AI features, photography and gaming experiences. Snapdragon 8s Gen 3 will be adopted by major OEMs including Honor, iQOO, realme, Redmi and Xiaomi, with commercial devices expected to be announced in the coming months. Qualcomm Technologies, Inc. today announced

AI Accelerators and HPC: Latest Innovations in the Advanced IC Substrates Market

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. The demands and complex requirements of the semiconductor industry were a key driver, transformation enabler, and innovation-inducer of the advanced packaging industry, including the market of advanced IC substrates. While the advanced

The Power to Transform Retail with On-device Generative AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. NRF 2024 — Retail’s Big Show — is just a few days away as I write these words, and Qualcomm Technologies, Inc., alongside our customers, are excited to demonstrate how we are bringing to life digital retail

A One-stop Solution for Digital Skin Analyzers

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. In this blog, we are exploring types of skin analyzers prevalent in the industry and giving insights on the kind of cameras that should be used to get the best results. In the skincare industry,

University of Western Australia Latest to Join the BrainChip University AI Accelerator Program

Laguna Hills, Calif. – March 12, – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event-based, neuromorphic AI IP, today announced that The University of Western Australia has joined the BrainChip University AI Accelerator Program, ensuring that UWA students have the tools and resources

ProHawk AI Unveils AI Computer Vision Solutions at NVIDIA GTC

Sponsoring the largest technology and AI events, to be held March 18-21 in San Jose, California Unveiling ProHawk’s edge to cloud solutions embedded across the suite of NVIDIA platforms Also demonstrating integrated solutions with BCD, Dedicated Computing, Network Optix, and Onyx Healthcare LAKE MARY, FLORIDA, March 12, 2024 – ProHawk Technology Group (ProHawk AI), a

Visidon AI-powered Low-light Video Enhancement Selected for Hailo-15 AI Vision Processor

The CNN-based technology developed by Visidon allows for significant improvement to video analytics accuracy in low-light environments, marking a new era for intelligent cameras deployed in public spaces, smart cities, factories, buildings, retail locations, and more. March 12th 2024 – The leading AI chipmaker Hailo has selected Visidon CNN-powered low-light video enhancement for their Hailo-15

AI on the Edge: Generative AI Technology Impacts, Insights and Predictions

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. Explore topics about on-device generative AI in 2023 with our subject matter experts Welcome back to AI on the Edge. In our last roundup of posts, we introduced our new series with topics relating to the ever-growing

BrainChip Boosts Space Heritage with Launch of Akida into Low Earth Orbit

Laguna Hills, Calif. – March 4, 2024 – BrainChip Holdings Ltd (ASX: BRN, OTCQX: BRCHF, ADR: BCHPY), the world’s first commercial producer of ultra-low power, fully digital, event- based, neuromorphic AI, today saw its Akida™ AI technology launched into low earth orbit aboard the Optimus-1 spacecraft built by the Space Machines Company. The successful launch

AMD Extends Market-leading FPGA Portfolio with AMD Spartan UltraScale+ Family Built for Cost-sensitive Edge Applications

New FPGAs offer high I/O counts, power efficiency, and state-of-the-art security features for embedded vision, healthcare, industrial networking, robotics, and video applications SANTA CLARA, Calif., — March 5, 2024 — AMD (NASDAQ: AMD) today announced the AMD Spartan™ UltraScale+™ FPGA family, the newest addition to the extensive portfolio of AMD Cost-Optimized FPGAs and adaptive SoCs. Delivering cost

How is AI Transforming the Semiconductor Industry?

This blog post was originally published at Digica’s website. It is reprinted here with the permission of Digica. The semiconductor industry stands as a driving force behind technological advancements, powering the devices that have become integral to modern life. As the demand for faster, smaller and more energy-efficient chips continues to grow, the industry faces