Kudos to EDN Magazine's Margery Conner for "tweeting" me a heads-up about a Samsung announcement at the recent ISSCC (International Solid State Circuits Conference), originally covered by Nikkei Business Publications' Tech-On! and later also picked up by Gizmodo, The Verge and TechCrunch. At first glance, the image sensor above may look like a convention Bayer-patterned CMOS IC, with twice as many green pixels as either red or blue, and interpolation used to approximate each pixel's missing visible-spectrum data.

But peer closer and, underneath each eight-pixel cluster, you'll find a four-pixel-sized "Z" element. Its intent is to discern depth data, by means of a time-of-flight algorithm. Here are a few relevant excerpts from the Wikipedia description (of the entire camera, not just the sensor subsystem):

A time-of-flight camera (ToF camera) is a range imaging camera system that resolves distance based on the known speed of light, measuring the time-of-flight of a light signal between the camera and the subject for each point of the image… A time-of-flight camera consists of the following components:

- Illumination unit: It illuminates the scene. As the light has to be modulated with high speeds up to 100 MHz, only LEDs or laser diodes are feasible. The illumination normally uses infrared light to make the illumination unobtrusive.

- Optics: A lens gathers the reflected light and images the environment onto the image sensor. An optical band pass filter only passes the light with the same wavelength as the illumination unit. This helps suppress background light.

- Image sensor: This is the heart of the TOF camera. Each pixel measures the time the light has taken to travel from the illumination unit to the object and back. Several different approaches are used for timing.

- Driver electronics: Both the illumination unit and the image sensor have to be controlled by high speed signals. These signals have to be very accurate to obtain a high resolution. For example, if the signals between the illumination unit and the sensor shift by only 10 picoseconds, the distance changes by 1.5 mm. For comparison: current CPUs reach frequencies of up to 3 GHz, corresponding to clock cycles of about 300 ps – the corresponding 'resolution' is only 45 mm.

- Computation/Interface: The distance is calculated directly in the camera. To obtain good performance, some calibration data is also used. The camera then provides a distance image over a USB or Ethernet interface.

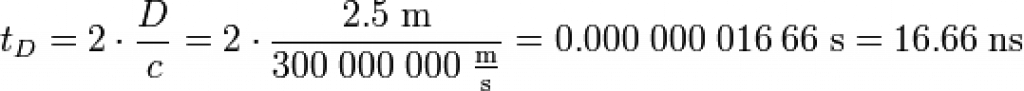

The simplest version of a time-of-flight camera uses light pulses. The illumination is switched on for a very short time, the resulting light pulse illuminates the scene and is reflected by the objects. The camera lens gathers the reflected light and images it onto the sensor plane. Depending on the distance, the incoming light experiences a delay. As light has a speed of approximately c = 300,000,000 meters per second, this delay is very short: an object 2.5 m away will delay the light by:

![]()

The pulse width of the illumination determines the minimum range the camera can handle. With a pulse width of e.g. 50 ns, the range is limited to

![]()

These short times show that the illumination unit is a critical part of the system. Only with some special LEDs or lasers is it possible to generate such short pulses.

Specifically, this sensor was developed by Samsung’s Advanced Institute of Technology, and as such should be considered as an R&D prototype, not a production-planned device. It time-multiplexes the visual-spectrum and depth capture functions for each frame, delivering 1920×720 pixel images (spatially interpolated to comprehend the silicon area consumed by "Z" pixels) in the former case and 480×360 pixel depth images (note the differing aspect ratios in the two cases).

Although this particular sensor's pixel count notably undershoots that of leading-edge production ICs, its cost- and complexity-reduction potential are notable. "Stereo" depth schemes require dual visible-light image sensors. Kinect's structured light scheme requires two sensors, too, although one is of the infrared variety. Samsung's scheme, on the other hand, does it all with a single piece of silicon…one whose visible-light resolution will presumably increase to a substantial degree should Samsung decide to fabricate it on a leading-edge process lithography.