This blog post was originally published in the July 2015 edition of BDTI's InsideDSP newsletter. It is reprinted here with the permission of BDTI.

Lately, neural network algorithms have been gaining prominence in computer vision and other fields where there's a need to extract insights based on ambiguous data.

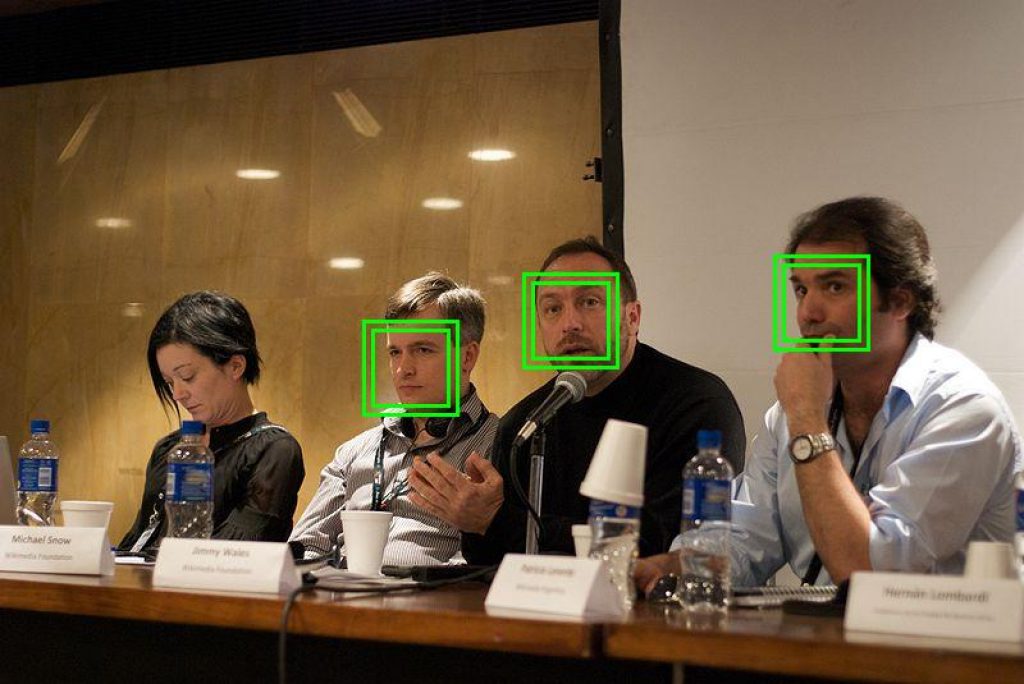

As I wrote last year, classical computer vision algorithms typically attempt to identify objects by first detecting small features, then finding collections of these small features to identify larger features, and then reasoning about these larger features to deduce the presence and location of an object of interest (such as a face). These approaches can work well when the objects of interest are fairly uniform and the imaging conditions are favorable, but they often struggle when conditions are more challenging. An alternative approach, convolutional neural networks ("CNNs") – massively parallel algorithms made up of layers of computation nodes – have been showing impressive results on these more challenging problems.

The algorithms embodied in CNNs are fairly simple, comprised of operations like convolution and decimation. CNNs gain their powers of discrimination through a combination of exhaustive training on sample images, and massive scale – often amounting to millions of compute nodes (or "neurons"), requiring billions of compute operations per image. This creates challenges when using CNNs for real-time or high-throughput applications.

Due to the massive computation and memory bandwidth requirements of sophisticated CNNs, CNN implementations often use highly parallel processors. General-purpose graphical processing units (GPGPUs) are popular for this purpose, and FPGAs are also used. But the structure of CNN algorithms is very regular and the types of computation operations used are very uniform, begging the question: how much can be gained by using a processor specifically designed for CNNs?

This is not a new question. Pioneers in neural network research have been developing specialized neural network chips for over 20 years, often with impressive results. But so far, none have been deployed at scale.

It seems possible, though, that this is about to change, for three reasons. First, CNNs are emerging as the clear approach of choice for a range of computer vision tasks. Second, the massive libraries of images required to train CNNs are increasingly available. And third, modern chip manufacturing processes make it practical to build chips with billions of transistors that fit in the price, size and power envelopes of mass-market devices.

One interesting recent development in this space is Synopsys' introduction of the DesignWare EV licensable silicon IP processor family. DesignWare EV processors consist of a combination of RISC CPU cores and an "object detection engine" containing two to four processing elements. The object detection engine is intended to efficiently implement CNNs. Note that Synopsys itself doesn't make chips; the DesignWare EV processors are offered as licensable silicon IP blocks, for inclusion into other companies' chip designs.

The cost of designing a complex chip means that new chip designs can usually be justified only with the expectation of selling chips in very large volumes – typically tens or hundreds of millions. Clearly Synopsys believes that the time has come for deployment of CNNs in mass-market applications. Is Synopsys right about this? I think they are. I'm reminded of advice from technology forecaster Paul Saffo: Since new technologies take 20 years to become "overnight successes," when seeking technologies that are ready to break out, look for something that has been failing for 20 years and build on that. Based on this criteria, the time may be ripe for neural network processors.

I'm very excited to report that my colleagues and I at BDTI will soon be starting our first evaluations of neural network processors. I look forward to sharing the results within you in the coming months. Stay tuned!

(In the meantime, to learn more about what Synopsys is doing with its neural network engine, check out the videos of presentations by Pierre Paulin and Bruno Lavigeur from the recent Embedded Vision Summit.)

By Jeff Bier

Founder: Embedded Vision Alliance

President and Co-Founder: BDTI