This blog post was originally published at CEVA's website. It is reprinted here with the permission of CEVA.

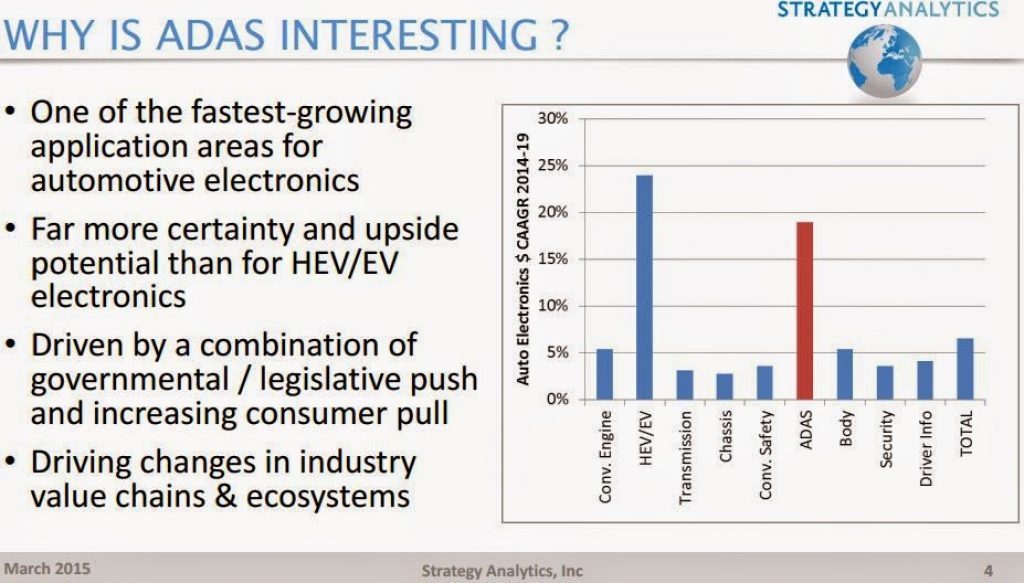

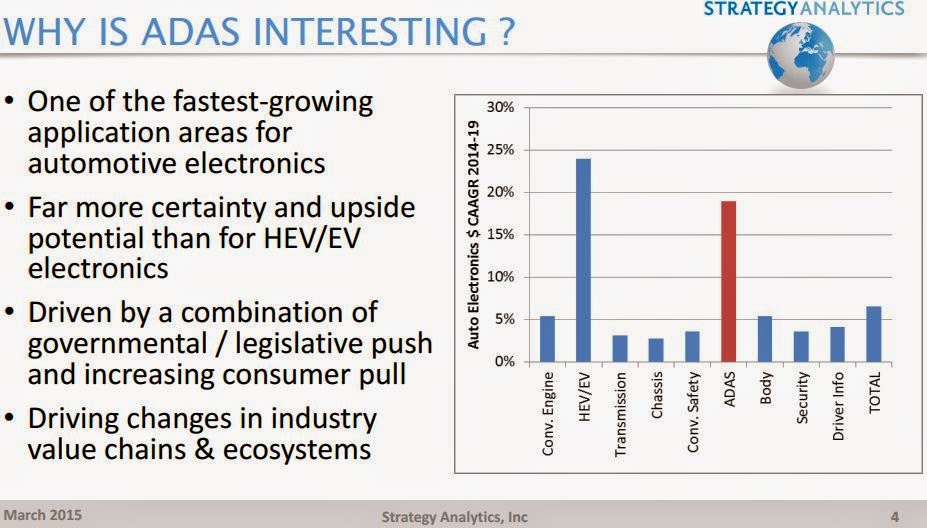

With an estimated 2020 market of $60bn and a CAGR of almost 23% between now and then, ADAS is becoming the new sweetheart of the automotive industry, driving most of the innovation within this enormous ecosystem. No surprise we face announcements and future product introductions almost on a daily basis, including the recent news about Google opening its very own car company, Uber making bold moves towards the Robot Taxi future and GM sponsoring the newly opened MCity Facility in Detroit.

Recently Strategy Analytics published a presentation “Vision-Based ADAS: Seeing the Way Forward” (PDF) by Ian Riches. There are few interesting statements over there and I encourage you to have a look at it. See the below slide showing the importance of ADAS.

Two Conflicting Trends

The magnitude of the challenges these and many other automotive-related companies are facing these days has pushed engineering teams to master artificial intelligence and deep learning algorithms at the highest levels seen to-date. What once would have been considered state-of-the-art technologies developed by NASA, DARPA and various military/defense institutes, are now being created by these automotive engineering teams, and will in the future trickle down towards consumer electronic devices such as smartphones, wearables and drones.

The challenges faced by the automotive industry are unique as it blends two sometimes conflicting trends: On the one hand, the drive towards autonomous driving and the resulting requirement to meet the most demanding safety standards and fault coverage. On the other, vehicles are (still) consumer devices in terms of cost sensitivity, hence these ADAS systems must be cheap add-ons, especially as standard bodies such as Euro-NCAP and NHTSA embrace them into their safety rating systems. This requires out-of-the-box thinking on everyone’s part – algorithm developers, system manufacturers, silicon vendors and processor designers – and close cooperation of the whole ecosystem.

Take a relatively simple ADAS task such as lane departure warning. Running lane departure in “normal” driving conditions has become fairly mundane; challenges appear when environmental conditions aren’t perfect – rainy weather, thick fog, direct light reflections, or a high-contrast scene such as exiting a tunnel. This is when you need to apply all sorts of unique filters and imaging algorithms to clean up the image, fuse multiple exposures, sometimes from different sensors, and other techniques.

It gets much more challenging when road signs and lanes aren’t clearly marked, when lanes converge, when there’s road construction (and lane markings are over-layered with different colors or simply disappear), or when there are simply no lane markings. Also, consider that there are multiple ways to mark lanes, some of which are geography dependent, such as continuous lines, dotted lines, double lines, or even using Botts’ dots instead of road markings. The system now has to imitate the human brain (did I mention artificial intelligence?) in order to collect indications, including road markings, the edge of the road, construction signals, the location of cars ahead of us, the location of cars driving towards us, traffic signs, etc’ and so infer the “lane”.

Harnessing the Power of Energy-Efficient Processors

Now take such a lane departure warning system and try to convert it into a lane keeping system – actively steering the car to keep it in lane in an autonomous driving environment. The accuracy of this system should only compare (and sometimes surpass) to human vision and intelligence system. Implementing the advanced algorithms needed to process the vast array of visual information – while meeting aggressive power and cost budgets – is only possible by harnessing the power of energy-efficient vision-based processors.

Detecting (and keeping) the driver’s lane is really just the tip of the iceberg; in order to truly drive autonomously, cars would need to infer every object around the car continuously (cars, traffic signs, road markings, lanes, pedestrians, bumps, bicyclists, traffic lights, road debris, etc’), understand the relationships between the different objects (for example, there’s correlation between the behavior of the car in front of you and the traffic light) and take sound decisions in an instant.

In the future we could foresee such safety-critical life-saving AI systems run into moral matters as well; for example, should the car crash into an animal that jumped the road, or hit a pedestrian on the sidewalk instead? And how should the system prioritize the driver’s safety vs. the safety of other people on the road? With such a broad scope of artificial intelligence, we should not expect to see fully autonomous cars on the road in the next 10 years, but certainly some significant steps in the form of advanced vision-based algorithms and the associated energy-efficient embedded processors.

Eran Briman

Vice President of Marketing, CEVA