This blog post was originally published at CEVA's website. It is reprinted here with the permission of CEVA.

The trade media is abuzz with speculations that the iPhone 7 is going to incorporate 3D vision using dual rear-facing cameras and add depth-sensing capability for mapping out 3D environments and tracking body movements and facial expressions. The basis of these speculations are multiple 3D technology acquisitions that Apple has made during the past couple of years.

In April 2015, Apple snapped multi-sensor camera technology firm LinX Imaging for an estimated $20 million. LinX employed small cameras with multiple sensors to capture several images with the same push of the button and blended them into a single image. That allows the camera to capture the same image from slightly different angles which enables generating depth information. The end result for the user is the ability to focus the image on different areas or objects in the picture. This technology could even work well on video given enough processing power.

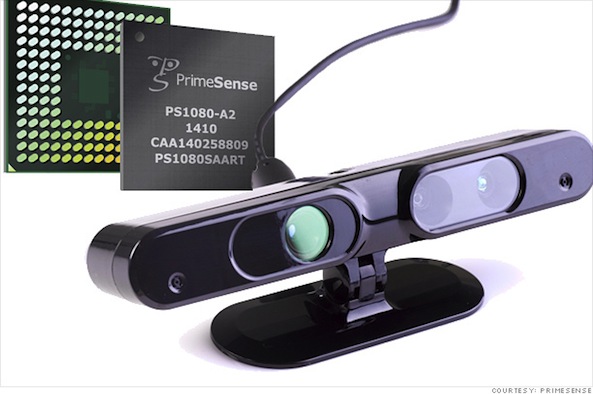

PrimeSense Chip Allowed Kinect to Perceive the World in Three Dimensions and Translate These Perceptions into a Synchronized Image

Earlier, in November 2013, Apple had announced the purchase of another Israel–based 3D imaging firm PrimeSense, which provided chips for 3D motion and gesture recognition in Microsoft’s original Kinect sensor system for Xbox 360 game console. PrimeSense had created a system-on-chip (SoC) solution for merging a standard color video camera with a depth image camera, which coded a 3D scene, objects within it and their movements using near-infrared light invisible to the human eye.

The Evolution of 3D Vision

The chipset from PrimeSense carried out sophisticated analysis of the camera data, enabling the sensor system to map out walls and furniture in a room, capture 3D object shapes, and sense bodies, their position, movements and gestures. However, when Apple acquired PrimeSense for $360 million, industry observers reckoned that the Cupertino firm was going to use the 3D motion sensing control technology in its much talked about iTV.

At that time, 3D sensing and machine vision inside smartphones and tablets seemed to be a far-fetched idea simply because of power it consumed, usually over 1 watt. Kinect and other early 3D vision use cases had one thing in common: a power consumption level that smartphones and tablets simply couldn’t afford. So 3D vision technology remained the staple of high-end imaging products for quite some time.

Matterport Also Used PrimeSense’s Carmine Sensor Chip in Its Immersive 3D Models

Take, for instance, upstart Matterport, which combined multiple sensors into a single camera unit to capture interiors in their entirety, mapping out objects and creating accurate representations quickly and cleanly.

Nevertheless, by the early 2010s, smartphones were starting to employ a bunch of sensors like accelerometer and gyroscope to facilitate contextual information inside the device. Next up, 3D vision promised to bring smartphones the ability to sense contextual signals to see the world outside the device. However, smartphones, unlike the above 3D use cases, required chips that would operate in the range of a couple of hundred milliwatts.

Smartphone Sensing Outside

Fast forward to 2015 and technology stalwarts like Amazon, Apple and Google are experimenting with the idea of using multiple cameras for facial tracking, gesture recognition and other 3D machine vision applications in smartphone, tablets and wearable devices. Computer vision technology using multi-camera 3D systems is promising to allow these portable devices to see around them more like a human does.

In hindsight, PrimeSense, which launched the Carmine camera sensor chip for Kinect motion-sensing camera, eventually set the trend line with the release of an embedded version “Capri” that came in a much smaller form factor. The Capri sensor chip was also cheaper, consumed far less power and was more viable for running alongside a mobile processor. PrimeSense’s design goals were a harbinger of phone makers’ dream of using computer vision technology for detecting and mapping 3D spaces in real-time.

Beyond 2015, 3D and Augmented Reality

The last and possibly most interesting acquisition of Apple was done in May 2015 of German technology company Metaio for an undisclosed amount. Metaio demonstration of combining depth information to improve augmentation of objects in the scene:

Metaio has been for years one of the leading software companies for augmented reality. However, looking closely at the technology, Metaio started showing usage of 3D data for improving quality of its technology just before the Apple acquisition. Although the technology has still a long way to go and probably won’t be ready for the iPhone7 launch, this is clearly the missing link with the previous Apple acquisitions.

Intelligent Vision Enables Smartphones and Tablets to Read and Interpret the Real World with Multiple Cameras

Presently, the existing hardware building blocks like CPU and GPU in mobile chipsets are not designed to handle 3D imaging tasks, which employ processing-intensive algorithms for image analytics and enhancement, computational photography, and computer machine vision. Here, companies like CEVA help offload the task of processing vision data from CPU and avoid the battery drain through specialized vision processing cores.

CEVA’s new XM4 intelligent image processor has been developed from the ground up to accelerate the most demanding computer vision and 3D image processing algorithms in a power-efficient manner. The CEVA-XM4—targeted to serve smartphones, tablets, wearables and other mobile embedded devices—is a DSP and IP memory subsystem core that connects to hardware accelerators via dedicated ports and comes with lots of software computer vision libraries to provide a jump-start for algorithms developers.

Find out more about the multiple facets of 3D vision technology and how intelligent vision processors can facilitate 3D vision in a white paper from CEVA Inc.

Yair Siegel

Director of Product Marketing, Imaging & Vision, CEVA