Computer vision applications, unlike traditional image processing, employ a heterogeneous mix of algorithms with a great diversity of control flows and memory access patterns. Therefore, vision processors are naturally evolving towards complex architectures that include, besides the CPU and GPU-like arrays of processing units, dedicated hardware blocks that specialize on certain portions of computer vision processing. Since vision is inherently a continuous, dynamic process, vision processors are frequently targeted at the mobile embedded domain, in devices such as wearables, smartphones, drones and robots that imposes stringent size and power constraints. For example, a vision processor might have a single texture-sampling unit shared by multiple processing elements, as opposed to a GPU, where this ratio is higher.

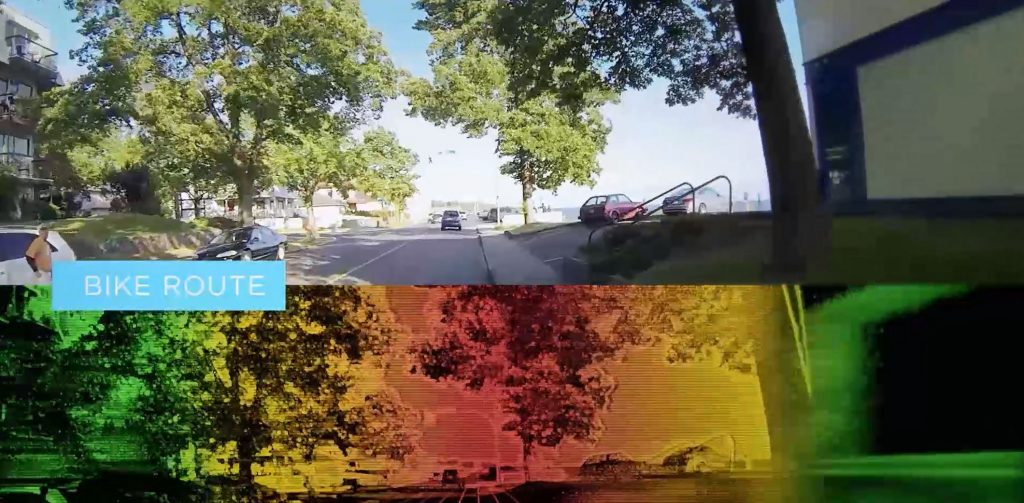

Considering these particularities of vision processors, we can imagine an OpenCL mapping of a dense depth mapping application onto such a device. A generic application could have the following steps:

- Acquire a stereo image pair

- Run a series of preprocessing filters (deBayer, denoise, contrast enhancement, lens distortion correction, etc.)

- Run the depth extraction algorithm

- Run an object detection algorithm

The first step is straightforward and done by the OpenCL host, which is the CPU or another processing core that has access to peripheral blocks for signal acquisition. The second step could be offloaded to an ISP block if available, through API calls which launch built-in kernels that encapsulate access to the low-level hardware interface. The third step would be executed by the general processing cores and potentially make use of a vendor-specific extension that enables increased hardware supported precision. And the fourth step might benefit from an already available domain-specific library, easily accessed and used via a native kernel.

OpenCL's execution model covers a wide range of combinations of custom and general-purpose hardware resources, giving vendors freedom to trade between portability and performance. Vendor-specific extensions can become key features of a platform, as they enable it to differentiate at the algorithm core, where differentiation matters the most. Another way that OpenCL allows vendors to squeeze the last bit of performance from their hardware is to optimize the built-in function library, which also preserves portability. Furthermore, exposing particular hardware instructions is possible by implementing native built-in functions, a significant facility for embedded cores that can trade speed for accuracy. Lastly, for the most speed-critical parts of an algorithm, vendors can choose to offer the tools for writing hand-optimized assembly code and integrating it in OpenCL kernels.

By Ovidiu Vesa

Software Architect, Movidius