In this edition of Embedded Vision Insights:

- Embedded Vision Summit Program

- Heterogeneous Recognition Algorithms

- OpenVX For Portable, Efficient Vision Software

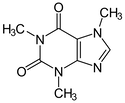

- Deep Learning from a Mobile Perspective

- Embedded Vision Community Conversations

- Embedded Vision in the News

- Upcoming Industry Events

| LETTER FROM THE EDITOR |

|

The Embedded Vision Summit will be here in just a month and a half, and the published conference program is rapidly nearing completion. If you haven't visited the Summit area of the Alliance website lately, I've no doubt you'll be impressed with the breadth of presentations and workshops already listed there, with the remainder to follow shortly. Highlights include a Deep Learning Day on May 2, keynotes from Google and NASA respectively on May 2 and 3, and multiple workshops on May 4. And while you're on the website, I encourage you to register now for the Summit, as space is limited and seats are filling up! Also, while you're on the Alliance website, make sure to check out all the other great new content there. It includes the latest in a series of technical articles from Imagination Technologies on heterogeneous computing for computer vision, along with a video tutorial from Basler on image quality, and a Mobile World Congress show report from videantis. Equally notable are the ten new demonstration videos of various embedded vision technologies, products and applications from Alliance members. Finally, I encourage you to check out a new section of this newsletter, listing upcoming vision-related industry events from the Alliance and other organizations. If you know of an event that should be added to the list, please email me the details. Thanks as always for your support of the Embedded Vision Alliance, and for your interest in and contributions to embedded vision technologies, products and applications. Please don't hesitate to let me know how the Alliance can better serve your needs. Brian Dipert |

| FEATURED VIDEOS |

|

"Programming Novel Recognition Algorithms on Heterogeneous Architectures," a Presentation from Xilinx

BDTI Demonstrations of OpenCV- and OpenCL-based Vision Algorithms

|

| FEATURED ARTICLES |

|

OpenVX Enables Portable, Efficient Vision Software

Computer Vision Empowers Autonomous Drones

|

| FEATURED DOWNLOADS |

|

Deep Learning from a Mobile Perspective

|

| FEATURED COMMUNITY DISCUSSIONS |

|

FPGA Design Engineer (Job Posting)

|

| FEATURED NEWS |

|

iCatch Technology Selects CEVA Imaging and Vision DSP for Digital Video and Image Product Line FotoNation to Deliver Next Generation Multimedia Experiences on Smartphones ON Semiconductor Expands Optical Image Stabilization Portfolio, Bringing Superior Picture Quality to Built-In Camera Applications Texas Instruments "Jacinto" Processors Power Volkswagen's MIB II Infotainment Systems

|

| UPCOMING INDUSTRY EVENTS |

|

NVIDIA GPU Technology Conference (GTC): April 4-7, 2016, San Jose, California Embedded Vision Summit: May 2-4, 2016, Santa Clara, California NXP FTF Technology Forum: May 16-19, 2016, Austin, Texas Augmented World Expo: June 1-2, 2016, Santa Clara, California Low-Power Image Recognition Challenge (LPIRC): June 5, 2016, Austin, Texas Sensors Expo: June 21-23, 2016, San Jose, California IEEE Computer Vision and Pattern Recognition (CVPR) Conference: June 26-July 1, 2016, Las Vegas, Nevada IEEE International Conference on Image Processing (ICIP): September 25-28, 2016, Phoenix, Arizona |