This blog post was originally published in the mid-April 2017 edition of BDTI's InsideDSP newsletter. It is reprinted here with the permission of BDTI.

GPS has proven to be an extraordinarily valuable and versatile technology. Originally developed for the military, today GPS is used in a vast and diverse range of applications. Millions of people use it daily for navigation and fitness. Farmers use it to manage their crops. Drones use it to automatically return to their starting location. Railroads use it to track train cars and other equipment.

In the 40 years since the first GPS satellite was launched, GPS receivers have shrunk dramatically in size, cost and power consumption. This means that GPS can now be deployed in almost any type of system. Today, GPS receivers are integrated into wristwatches and pet collars – to say nothing of the billion-plus smartphones shipped last year. GPS hardware, software and services are expected to become a $100 billion market in the next few years.

Nevertheless, GPS has its limitations. It doesn’t work well indoors, or even in outdoor locations that lack an unobstructed view of the sky. (You know, like big cities.) And, in typical applications, it’s only accurate to within a few meters – more than sufficient to enable driving directions, for example, but not nearly accurate enough for autonomous vehicles.

What if we were to develop a new technology that was better than GPS? A technology that worked in urban environments, as well as indoors, and that was accurate to the centimeter level? That would be awesome, right? With this technology, our mobile phones would be able to guide us through train stations and shopping malls, and autonomous vehicles could know their locations with high accuracy.

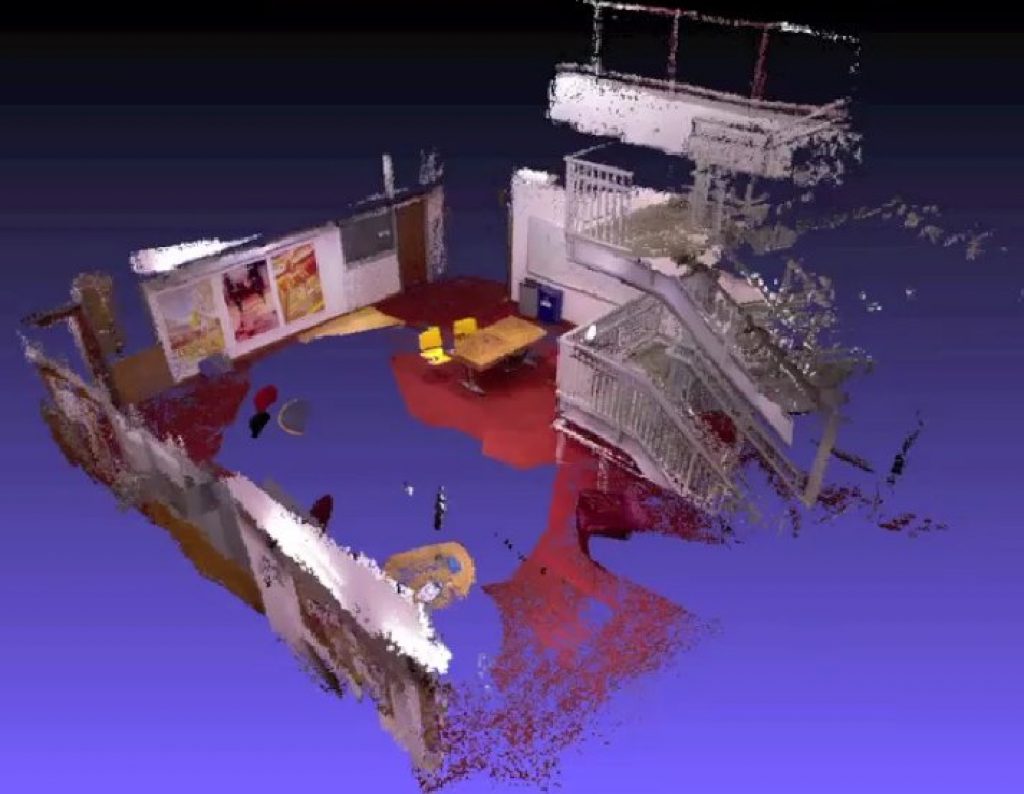

It turns out that this technology already exists. It’s often referred by the acronym SLAM, which stands for simultaneous localization and mapping. SLAM refers to a large family of techniques and technologies that can be used to create a map of a space and determine the location of a device within that space. SLAM can use many different types of sensors, from LIDAR to tactile sensors. When SLAM uses image sensors, it is often referred to as "VSLAM" – or "visual SLAM."

Besides working indoors and providing increased accuracy, VSLAM has some distinct advantages over GPS. For example, it allows devices to navigate without depending on any external technology such as satellites. It enables devices to construct 3D maps on the fly. And it facilitates mapping not only of spaces (rooms, roads, and the like), but also of the objects in those spaces (vehicles, furniture, people).

Thanks to rapid improvements in computer vision algorithms and embedded processors, it’s now feasible to integrate VSLAM into cost-, size- and power-constrained devices. For example, last year the first mobile phones debuted with Google’s "Tango" technology, based on VSLAM.

As I’ve mentioned in earlier columns, we live in a 3D world, and enabling our devices to understand this world is key to enabling them to be safer, more autonomous, and more capable. SLAM is still in its infancy, practically speaking, but long-term, I believe that 3D perception will be one of the most important capabilities required for creating "machines that see."

That’s why I’ve made 3D perception one of the main themes of this year’s Embedded Vision Summit, taking place May 1-3, 2017 in Santa Clara, California. Marc Pollefeys, Director of Science for Microsoft HoloLens and a professor at ETH Zurich, has been pioneering 3D computer vision techniques (including VSLAM) for over 20 years, and will give the opening keynote on May 1st. His talk will be followed by an outstanding line-up of presentations on 3D sensors, algorithms, and applications – including some, like a tiny robot, that may surprise you.

All told, the Summit program will feature more than 90 speakers and 100 demonstrations, with emphasis on deep learning, 3D perception, and energy-efficient computer vision implementation. If you’re interested in implementing visual intelligence in real-world devices, mark your calendar and plan to be there. I look forward to seeing you in Santa Clara!

Jeff Bier

Co-Founder and President, BDTI

Founder, Embedded Vision Alliance