This blog post was originally published at videantis' website. It is reprinted here with the permission of videantis.

It’s been several weeks since AutoSens was held in Detroit and there are only a few weeks left until AutoSens will again land at the beautiful AutoWorld car museum in Brussels in September. Seems like this is the right time to again write about what’s going on in automotive sensing and perception at AutoSens.

We really like AutoSens, and writing show reports has become a bit of a habit. You can read and compare our show previous reports from 2016, 2017, and 2018 on our website.

One key thing to note: just before the Detroit event, the organization announced that there will be a third additional annual location for AutoSens added to the calendar. November 2020 will see its first Hong Kong-based event, bringing AutoSens to Asia now also.

AutoSens Detroit by the numbers

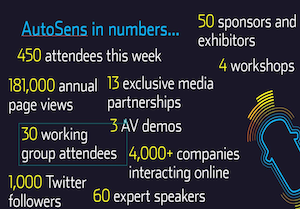

If you haven’t attended an AutoSens show yet, and to give you a good feel for its size, here’s the conference in numbers:

If you haven’t attended an AutoSens show yet, and to give you a good feel for its size, here’s the conference in numbers:

- 450 attendees (550 in Brussels 2018)

- 2 days of conference, 1 day of workshops

- 60 expert speakers (70 in Brussels)

- 50 exhibitors (same as in Brussels)

- 30 IEEE P2020 work group attendees (60 in Brussels)

- 2 parallel tracks of talks (versus 3 in Brussels)

So, the event is only slightly smaller than the main Brussels event. Robert Stead, the AutoSens managing director has mentioned that he doesn’t want AutoSens to grow much further, and 400-600 attendees does feel like about the perfect size. Since many people attend this show every year, and many members are active as speakers, exhibitors, or workshop attendees, the event has a real community feel to it. This makes it easy to meet new industry colleagues and have interesting discussions. AutoSens is very different from a large show like CES, where the 170K+ people in attendance mostly move by in an anonymous crowd.

Workshops

The week started with a day of workshops. There were different half-day sessions addressing topics such as infrared camera sensing, enabling technologies for automated driving, the Robot Operating System, material interactions for autonomous sensor applications, and concluding with sponsored round table discussions.

The P2020 Standards Group met again also for two days. This workgroup specifies ways to measure and test automotive image capture quality. The best resource of what the group’s been working on is probably still the 32-page whitepaper, which was published in September 2018. P2020’s importance seems to grow, with OEMs also picking up techniques and methods that have been developed inside P2020.

The talks

While AutoSens is all about the community, over the course of two days, there are many great talks to attend, covering the whole spectrum of everything ADAS and autonomous. All the presentation slides can be found online, although the very latest presentations from Detroit are only available at a small fee. AutoSens also has a YouTube channel, where the talks from previous events are made available.

Here’s an overview of Detroit’s presentations, split up in the following categories: sensing, image sensors, Lidar, unique sensors, image processing, perception and AI, applications, development support, supporting technologies, and business aspects.

Sensing

These intelligent automotive applications all start with the sensor. The data is only as good as the sensor will capture it, so the more powerful the sensor, the more powerful the use case can be. The focus at AutoSens was on image sensors and Lidar, and very little radar. Then there were a few unique use cases highlighted with non-typical sensors too.

Image sensors

Sony spoke about RGGB vs RCCB pixel configurations and that the ISP pipeline is key to getting good performance out of these. OmniVision discussed their RGB-Ir sensor, specifically targeting the rapidly growing field of interior driver and passenger monitoring systems. Besides detection and monitoring of passengers, OmniVision showed detection of unattended children, object detection, and enhanced viewing at night. A rolling shutter is fine for most in-cabin applications they mention.

Lidar

The University of Wisconsin-Madison described a new way to code the emitted light using Hamiltonian coding, resulting in much higher accuracies. Aeye spoke about changing the sampling, make this scene aware instead of using a regular grid, again resulting in higher accuracies. Trioptics spoke about alignment and testing of Lidar systems for mass production, a key step to bring such systems to consumer vehicles. Imec described their 3D imaging system consisting of Lidar, mm-wave Radar, and gated imagers with sensor fusion and perception algorithms, modeling and simulation tools. On Semi gave an overview of their SiPMs and SPAD arrays and how they’re used in Lidar systems.

Unique sensors

Then there were a couple of talks that presented unique ways to use sensors. One interesting example came from WaveSense, which uses radar to capture a signature of the ground underneath the car. By storing and comparing these fingerprints between vehicles, the very exact location of a vehicle can be deduced. This can be a key datapoint since GPS is not accurate enough for many applications. Also, using cameras for this localization task isn’t always possible due to difficult weather conditions, or in case there are very few landmarks that can be used for identification. In addition, other vehicles surrounding the car may block the camera’s view. The WaveSense radar solution covers all these use cases in a robust manner. Seek Thermal proposed to make thermal imaging sensors a first-class citizen among the other commonly used sensors. Since many accidents happen in the dark, thermal imaging can play a major role in detecting situations that normal cameras can’t detect and prevent such accidents.

Image processing

After the image and data capture phase is complete, the next step is to process this data into more meaningful images that are ready for further processing downstream by intelligent algorithms or the human eye.

ARM spoke about optimizing the ISP that translates raw image sensor data into pictures. They highlighted that ISPs have two functions: making pretty pictures for humans and to provide images for the computer vision / deep learning and SLAM algorithms downstream. An interesting note was that any ISP could be replaced by a neural network also. So why do we still need ISPs then? ARM stated that ISPs are primarily more efficient than neural nets, and that the ISPs need to be tuned, but can otherwise replace neural nets. In addition, a tunable ISP enables switching to new sensors with different characteristics, preventing having to retrain the neural networks. Geo semiconductor’s talk focused purely on ISP tuning for automotive, showing many unique challenges that show up in automotive only and not so much in typical consumer applications. Valeo focused on mitigating another effect that shows up primarily in automotive: Led flicker. MIT gave a talk that focused on how to see through fog using a camera, laser, and a SPAD camera.

Perception and AI

After the data is captured and processed, a key next step is to intelligently analyze that data and extract meaning from it. Typical use cases are to detect vehicles in view, pedestrians, lanes, traffic signs, where the road begins and ends, and many more.

Brodmann17 gave an interesting talk focusing on running such neural networks in real time on small systems. At videantis we see quite a few designs that need to run such algorithms at low power and with low compute requirements such that they can be embedded in small cameras or ECUs in the vehicle. While we can provide many operations per Watt, limiting compute requirements always remains an important aspect. Brodmann17 gave an overview of some of the unique tricks they use, like sharing of weights and computations in the networks. Voxel51 focused on another aspect of AI: the immense amount of video that algorithm designers capture and store to train the neural nets with. They showed their video management tool which includes AI aspects such that algorithm designers can quickly access and extract the videos that are most meaningful for their areas of concern. Perceptive automata focused on another aspect where automotive needs AI: in predicting human behavior. Their algorithms address questions like “Is she going to cross the road or not?” or “Does he know our car is here?” Answering these questions about intent and awareness means having to predict human behavior, something we do all the time when driving the car.

Applications

Applications tie all the three steps together: sensing, processing, intelligently analyzing and then taking action. There are many imaging-centric applications possible in the vehicle, but AutoSens mostly focuses on the underlying technologies that drive these applications, not the end applications themselves. One vendor showing an end application was Mercedes-Benz, which highlighted their MBUX interior assistant. This system uses cameras and depth sensors to detect hand movements and gestures and uses this information to highlight icons on the dashboard, distinguish between driver and passenger, activate individual seat adjustments, reading lights, and more.

Applications tie all the three steps together: sensing, processing, intelligently analyzing and then taking action. There are many imaging-centric applications possible in the vehicle, but AutoSens mostly focuses on the underlying technologies that drive these applications, not the end applications themselves. One vendor showing an end application was Mercedes-Benz, which highlighted their MBUX interior assistant. This system uses cameras and depth sensors to detect hand movements and gestures and uses this information to highlight icons on the dashboard, distinguish between driver and passenger, activate individual seat adjustments, reading lights, and more.

Development support

Besides the base technologies of sensing, processing and the AI that make up the applications, there’s a broad set of additional supporting tools that are used during the development of these complex systems.

One task is to ensure the system behaves correctly under all circumstances. Since you can’t simply test everything on the road and drive millions of miles for every line of code you change, simulation is key. Siemens focused their presentation on validation using digital twins in combination with formal verification. Dell EMC’s CTO Florian Baumann showed the challenges and requirements of synthetically generating sensor data for the development of ADAS systems and related aspects. Parallel Domain’s talk focused more specifically on automating the creation of accurate virtual worlds, which can then function as a simulated environment for the sensors.

Codeplay gave a talk about how to develop safety critical, high performance software for automotive applications. They distributed an extensive slide deck containing a wealth of information touching on many aspects of the development flow and new C++ standards.

Supporting technologies

The Changan US R&D Center spoke about vehicle-to-everything communications and Synopsys gave an overview of MIPI and how it addresses the automotive requirements. MIPI is a leading open interfacing standard used for sensor chip connections.

Maps are another key aspect of autonomous driving, and both leading map makers Here and TomTom interestingly presented at AutoSens also. Here discussed their HD Maps based on camera and Lidar sensing and TomTom focused on combining lidar, camera, GPS, IMUs, and the aspect of crowd sourcing data.

Business aspects

Finally, Rudy Burger from Woodside Capital presented an overview of where most of the investments in intelligent vehicle technologies have been made.

Rudy showed that most of the investment has gone into companies that develop “full stack autonomous software” and LiDAR technology. Rudy showed that 80 LiDAR companies have been founded over the last 12 years with an aggregate funding of $1B. Another interesting data point was that 3 of the top 5 best funded private companies are Chinese.

Rudy mentioned that when he speaks to an autonomous vehicle public relations division that they happily talk about launch robotaxis this year. At the same time, while speaking to engineers at the same company, they tell him they’re merely at the research stage.

Conclusion

Robert Stead summed it up as follows in the AutoSens video where he highlights his three takeaways from the conference:

Robert Stead summed it up as follows in the AutoSens video where he highlights his three takeaways from the conference:

- According to the Gartner hype curve autonomous driving is in the trough of disillusionment, but there were no disillusioned people at the show.

- Focus on the wins: there’s no mass deployment of autonomous vehicles yet, but there are lots of advanced driver assistance systems being deployed in our vehicles, making them safer and easier to drive.

- Collaboration: there’s many joint ventures and partnerships being formed. To make all our vehicles perfectly safe and drive themselves is a big task that no single company can take on.

Conferences such as these show how big of an engineering problem it is to make our vehicles safe and ultimately drive themselves under any circumstance. It touches, optics, sensors, intelligent processing and perception, mapping.

The conference also made it clear that there’s no “one sensor to rule them all”. On the contrary actually. Different types of sensors are being introduced, and even “standard image sensors” are changing and becoming more application specific.

One thing is clear: it’s very hard to make vehicles fully autonomous. We’re still at the beginning of this massive change that’s coming. But besides making vehicles fully autonomous, there’s many active safety systems and in-cabin applications being worked on. While progress sometimes seems slow, the appetite for the OEMs to bring better vehicles to consumers is not getting any smaller. Many attendees at the show aren’t working on technology that’s a pipe dream. The ones we spoke to were primarily working on developing systems that will actually make it into the consumer’s hands in a few years.

At videantis we remain very excited to work in this field and see such a strong demand for efficient and flexible deep learning acceleration and visual computing. At videantis we focus on delivering the required complete, high-performance processing solutions at very low power. After all, you can have the best sensor in the world, but if you don’t have the required processing resources to analyze its data, it’s pretty useless.

AutoSens Brussels is scheduled to take place 17-19 September 2019 in Brussels. We’re looking forward to meeting everyone there, or preferably sooner!

Please don’t hesitate to contact us to learn more about our unified deep learning/vision/video processor and software solutions.

Marco Jacobs

Vice President of Marketing, videantis