This blog post was originally published at Synaptics’ website. It is reprinted here with the permission of Synaptics.

While the number and variety of devices we use at home, work and play continue to grow, we are also seeing an on-going evolution in how how we interact with them to derive more value, entertainment or convenience. Consumers are no longer content to have to turn a knob, push a button or flick a switch to engage with their televisions, appliances or home security gear. More and more we want them to recognize our voices and gestures, our faces and movements. Video, camera, voice and audio sensing are quickly becoming a must-have in many consumer electronics.

And our ‘smart’ devices are becoming increasingly intelligent, moving well beyond being able to only recognize intent to interpreting preference and presence in order to provide even higher levels of efficiency. Perceptive intelligence is the watchword in the new era of IoT.

At the same time, consumers are demanding faster response times and more secure and private operation from their electronics. This is driving the adoption of edge computing that reduces the dependency (and security risks) of processing via the cloud. Being able to locally embed machine learning-based intelligence through neural networks on the device itself hold the promise of enabling a new generation of IoT possibilities.

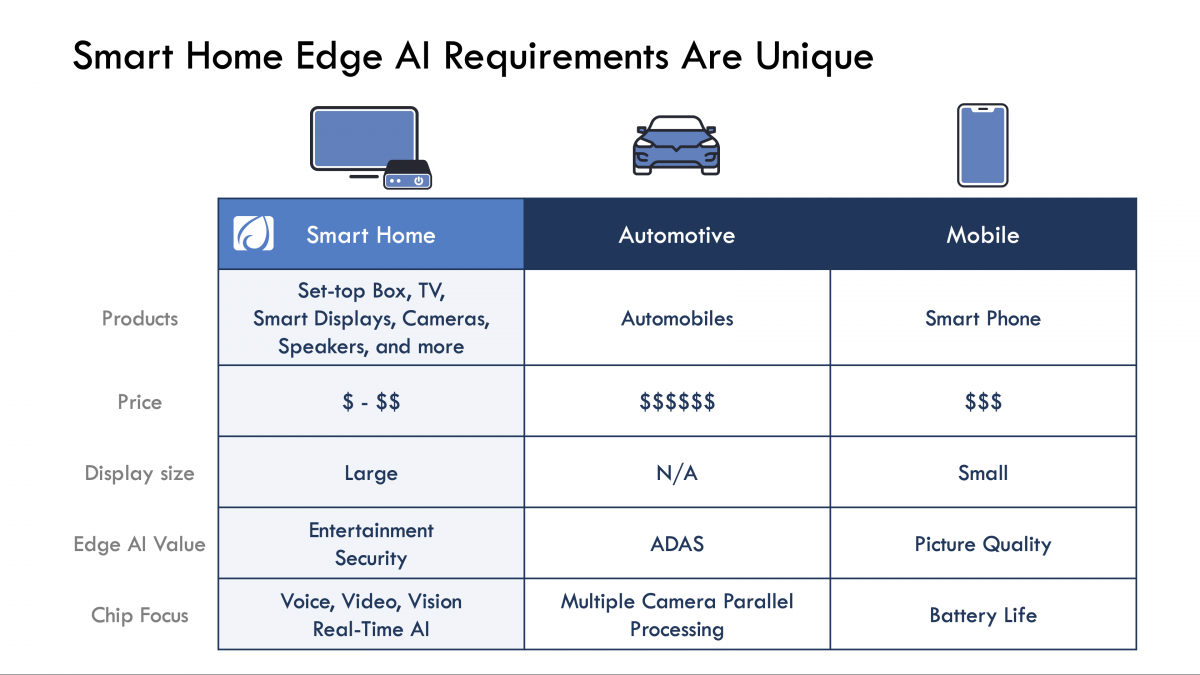

The combination of smarter processing in edge devices introduce new challenges too, and requires highly-advanced chip and software solutions. While this type of Edge AI has seen adoption in a variety of initial uses, it has largely been limited to expensive products, such as smartphones and cars. Synaptics is working toward enabling greater proliferation of edge-based AI devices, with the security, performance and costs required for broader consumer applications.

We offer a series of SoCs (both audio- and video-centric) to support Edge AI applications that are highly targeted for a range of consumer devices. Each SoC in the family integrates the required processing cores together with the appropriate level of integrated AI performance for that application. Our SoC platform integrates multiple types of processor engines: CPU, NPU, GPU and ISP as well as hooks to high-performance cameras and displays. Such an architecture enables the desired combination of highly-secure, low-cost inferencing and real-time, multi-modal performance.

In the competitive consumer electronics sector, time to market and differentiation are also essential. In order to address the challenges of broader proliferation of Edge AI, a full stack approach, which includes necessary development tools to bring AI innovations to an Edge AI SoC, is required. Most importantly, the desired toolset should be compatible with the large and growing user community of AI developers. For example, the toolkit would enable developers to import models created with industry-standard frameworks such as TensorFlow, TensorFlow Lite, Caffe, and ONNX. This allows developers the ability to leverage existing AI innovations and get them working on the targeted SoC quickly and painlessly.

In a recent contribution to EE Times, we look further into the enhancements in how machines can utilize voice, video and visual data to understand and predictively respond to what we do, say, or touch. Such technology is revolutionizing how IoT can deliver unprecedented levels of security, convenience and productivity in our lives.

Read more in EE Times here.

Vineet Ganju

VP of Marketing, Smart Edge AI, Synaptics