This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Qualcomm AI Research’s latest research and techniques for power and compute efficient AI, including Neural Architecture Search (NAS)

AI, specifically deep learning, is revolutionizing industries, products, and core capabilities by delivering dramatically enhanced experiences. However, the deep neural networks of today use too much memory, compute, and energy. To make AI truly ubiquitous, it needs to run on end devices within tight power and thermal budgets.

In this blog post, we will focus on Qualcomm AI Research’s latest model efficiency research, particularly neural architecture search (NAS). In addition, we’ll highlight how the AI community can take advantage of our open-source model efficiency projects, which provide state-of-the-art quantization and compression techniques.

A holistic approach to AI model efficiency

At Qualcomm AI Research, we put a lot of effort into AI model efficiency research for improved power efficiency and performance. We try to squeeze every bit of efficiency out of AI models, even those that have already been optimized for mobile devices by the industry. Qualcomm AI Research is taking a holistic approach to model efficiency research since there are multiple axes to shrink AI models and efficiently run them on hardware. We have research efforts in quantization, compression, NAS, and compilation. These techniques can be complementary, which is why it is important to attack the model efficiency challenge from multiple angles.

Qualcomm AI Research takes a holistic approach to AI model efficiency research.

Over the past few years, we’ve shared our leading AI research on quantization, including post-training techniques like Data Free Quantization and AdaRound, and joint quantization and pruning techniques, like Bayesian Bits, through blog posts and webinars. We would now like to introduce our NAS research, which helps find optimal neural networks for real-life deployments.

NAS to automate the design of efficient neural networks

Optimizing and deploying state-of-the-art AI models for diverse scenarios at scale is challenging. State-of-the-art neural networks are generally too complex to run efficiently on target hardware, and hand designing networks is not scalable due to neural network diversity, device diversity, and cost — both compute and engineering resources.

NAS research was initiated to help address these challenges by creating an automated way to learn a network topology that can achieve the best performance on a certain task. NAS methods generally consist of four components. A search space, which defines what types of networks and components can be searched over. An accuracy predictor, which tells how accurate a given network is expected to be. A latency predictor, which predicts how fast the network is going to run. And a search algorithm, which brings all three together to find the best architecture for a specific use-case.

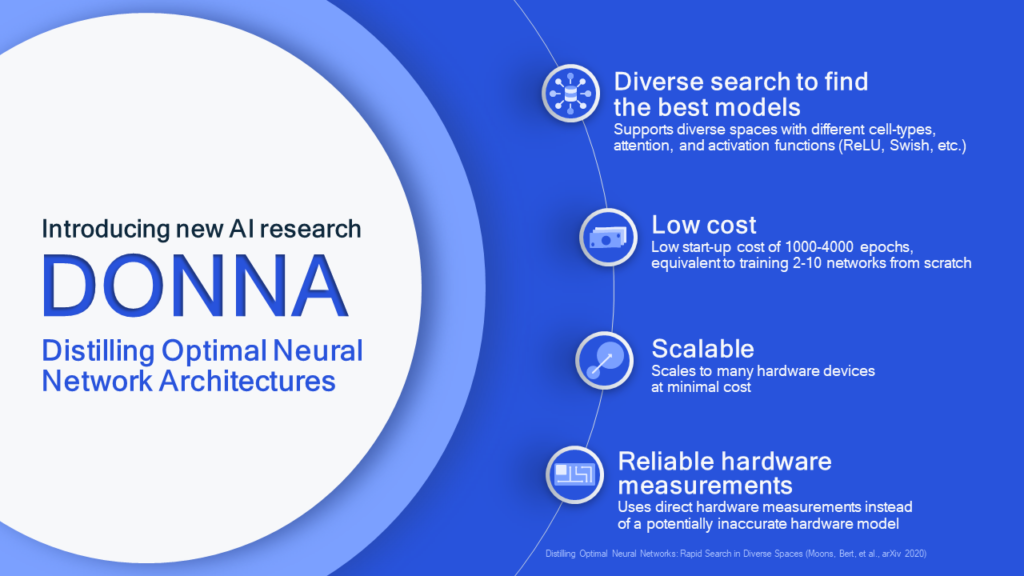

While NAS research has made good progress, existing solutions still fail to address all challenges, notably lacking diverse search spaces, requiring high compute cost, not scaling efficiently, or not providing reliable hardware performance estimates. Our latest NAS research addresses these challenges. We call it DONNA, Distilling Optimal Neural Network Architectures. DONNA is an efficient NAS with hardware-in-the-loop optimization. It is a scalable method that finds optimal network architectures in terms of accuracy and latency for any hardware platform at low cost. Most importantly, it addresses the challenges of deploying models in real scenarios since it includes diverse search, has low compute cost, is scalable, and uses direct hardware measurements that are more reliable than potentially inaccurate hardware models.

DONNA is an efficient NAS method that addresses the challenges for AI deployment at scale.

At a high level, a user would start with an oversized pretrained reference architecture, feed it through the DONNA steps, and receive a set of optimal network architectures for the scenarios they care about. Here’s a few noteworthy aspects about DONNA to highlight:

- Donna’s diverse search space includes the usual variable kernel-size, expansion-rate, depth, number of channels, and cell-type, but can also search over activations and attention, which is key to finding optimal architectures.

- The accuracy predictor is built only once via blockwise knowledge distillation and is hardware agnostic. Its low startup-cost of 1000 to 4000 epochs of training (equivalent to training 2 to 10 networks from scratch) enables NAS to then scale to many hardware devices and various scenarios at minimal additional cost.

- The latency of each model is calculated by running on the actual target device. By running the evolutionary search on the real hardware, DONNA captures all intricacies of the hardware platform and software, such as the run-time version and hardware architecture. For example, if you care most about latency, you will be able to find the real latency rather than a simulated latency that may be inaccurate.

- The search method finds a set of optimal models, so you can pick models at any accuracy or latency you like.

- DONNA pre-trained blocks allow for quick finetuning of the neural network to reach full accuracy, taking 15-50 epochs of training.

- DONNA is a single scalable solution. For example, DONNA applies directly to downstream tasks and non-CNN neural architectures without conceptual code changes.

High-level user perspective of DONNA’s 4-step process to find optimal network architectures for diverse scenarios.

Below are graphs that show that DONNA finds state-of-the-art networks for on-device scenarios. The y-axis is accuracy for all four graphs, while x-axis varies for each graph. The fourth graph shows DONNA results on a mobile SOC, in this case running on the Qualcomm Hexagon 780 Processor in the Qualcomm Snapdragon 888 powering the Samsung Galaxy S21. These are real hardware results rather than simulated, providing accurate measurements of inferences per second. The DONNA results are leading over existing state-of-the-art networks — for example, the architectures found are 20% faster at similar accuracy than MobileNetV2-1.4x on a S21 smartphone. It is also worth noting the performance benefits of running 8-bit inference on hardware with dedicated 8-bit AI acceleration.

DONNA provides state-of-the-art results in terms of accuracy for different scenarios on both simulated and real hardware.

For a much deep dive on DONNA, please tune into our webinar or read the paper.

Open-source projects to scale model-efficient AI to the masses

A key way to share knowledge and scale model-efficient AI to the masses is through open-source projects. Two open-source GitHub projects that leverage the state-of-the-art research from Qualcomm AI Research are the AI Model Efficiency Toolkit (AIMET) and AIMET Model Zoo.

AIMET provides state-of-the-art quantization and compression techniques. AIMET Model Zoo provides accurate pre-trained 8-bit quantized models. By creating these GitHub projects and making it easy for developers to use them, we hope to accelerate the movement to fixed-point quantized and compressed models. This should dramatically improve applications, especially those being run on hardware with 8-bit AI acceleration, for improved performance, lower latency, and reduced power consumption.

Our GitHub open source projects for AI model efficiency.

What’s next for model efficiency

Looking forward, our future research in AI model efficiency will continue to advance our existing techniques in quantization, compression, compilation, and NAS — and explore new areas. We are always focused on shrinking models while maintaining or even improving model accuracy. In addition, we strive to develop tools and techniques that make it easier for researchers and developers to deploy AI and solve real-world problems. Please do check out our research papers and join our open-source projects – we’re excited to see model-efficient AI proliferate and ultimately enhance our everyday lives!

Chirag Patel

Engineer, Principal/Manager, Qualcomm Technologies

Tijmen Blankevoort

Engineer, Senior Staff Manager, Qualcomm Technologies