This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

AI-based compression has compelling benefits for both video and speech.

The world is going digital. With increased demand for multimedia along with the rising trends of AI, IOT, and 5G, a tremendous amount of data is being produced that needs to be compressed for efficient communications. For instance, the scale of video and speech data being created and consumed is massive. A total of 15 billion minutes of talking per day are spent on WhatsApp calls, and it is predicted that 82% of all consumer internet traffic will be online video by 20221. To make this possible, data compression techniques have increased by leaps and bounds over the years due to technical innovation, such as the approximate 1000x reduction in video file size with VVC compression versus a raw file. However, the demand for more data is not stopping any time soon, so the need to advance compression technology is as important today as it has ever been. This blog post explores some of our latest AI-based compression research for video and speech.

Why AI for compression

You may be asking yourself how AI fits into compression. We’ve been doing deep generative model research for unsupervised learning, which is a powerful AI technique that takes unlabeled training data and generates new samples from the same distribution. This technique is broadly applicable to many use cases but can be used for compression and decompression applications since the model itself extracts and learns a low-dimension feature representation of the input data. We’ve found that AI-based compression has many compelling benefits over conventional codecs.

AI-based compression has many compelling benefits over traditional techniques.

For example, AI-based compression can offer a better rate-distortion tradeoff, meaning that for video it can provide the same level of visual quality with fewer bits. This is a key metric that codecs are evaluated on since the ultimate goal is to shrink the data down as much as possible while being able to decode it back to its original state. Another benefit is that it is easier to upgrade, standardize, and deploy new AI codecs since the latest and greatest learned model is trained in a relatively short amount of time and does not require special-purpose hardware other than AI acceleration for deployment. Plus, for new modalities like point clouds, omnidirectional video, and multi-camera setups, neural codecs are easier to develop.

Our latest AI compression research for speech

We’ve applied deep generative models to achieve state-of-the-art speech compression. In our research, we’ve used a feedback recurrent variational auto encoder for end-to-end speech compression to achieve a lower bit rate than conventional codecs. A result that we are really proud of is that using our AI solution we achieve 2.6x improvement in bit-rate relative to the EVS speech codec, which already compresses speech significantly.

We achieve 2.6x the bit-rate compression at the same speech quality with AI versus EVS speech compression.

Our latest AI compression research for video

We’ve applied deep generative models to achieve state-of-the-art video compression as well. Rather than using human-designed algorithms that attempt to compress the significant amounts of spatial and temporal redundancy found in nearby still image frames of a video, we use end-to-end deep learning. We’ve made several advancements in AI compression for both images and video, such as:

- Neural B-frame coding: A B-frame, or bi-directional frame, codes changes in the video based on previous and next frames. This requires more complex computation and coordination but improves the compression rate. Existing AI research methods have flaws when trying to implement a B-frame codec, but our novel solution allows the codec to share weights and be more efficient while providing state-of-the-art rate-distortion results.

- Overfitting through instance-adaptive video compression: There are scenarios where the type of images in a video that are expected to be seen are quite narrow, so it is possible to overfit the AI codec and provide an even more compressed encoded bitstream. Imagine the benefit of having a neural codec for a popular Netflix series that can be streamed at significantly lower bitrate. Our research achieved state-of-the art results, including a 24% BD-rate savings over the leading neural codec by Google. Importantly, our solution is mobile friendly since decoding complexity can be reduced by 72% while still maintaining SOTA results.

- Variable bitrate image compression: Due to limitations in hardware or network conditions, videos and images are often encoded at a variety of bitrates. Variable bitrate image compression offers simpler deployment. There are a variety of solutions to achieve variable bitrates, but ultimately, we’d like a single model to produce a single bitstream that embeds all bitrates. Our variable-bitrate progressive neural image compression solution achieves comparable performance to HEVC Intra but uses only a single model and a single bitstream.

- Semantic-aware image compression: For regions of interest in an image, we would like to allocate more bits to increase the visual quality. Semantic-aware image compression improves the image quality by doing exactly that. Our solution provides state-of-the-art results for rate-distortion tradeoff for images, and our next step is to extend this technique to video.

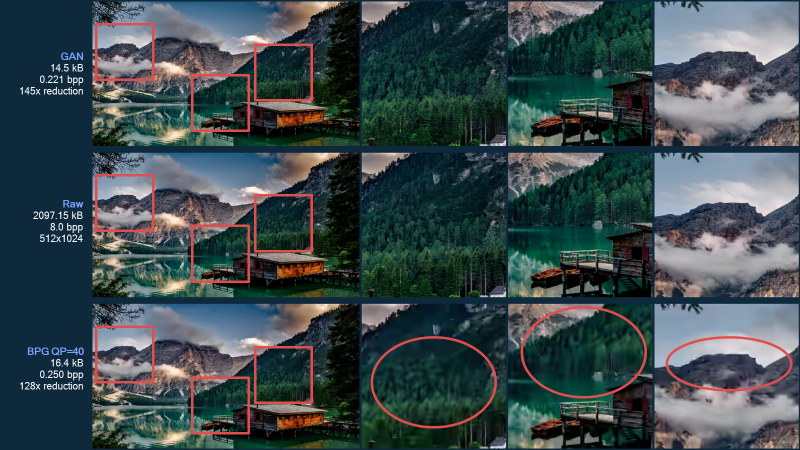

- GAN-based codecs: To create a good image codec, we optimize for the lowest bit rate while reducing image distortion and increasing perceptual quality. Although this often leads to many tradeoffs, generative adversarial networks (GANs) can produce better images compared to traditional codecs since the GAN will create something visually appealing even when there is very little information. Our results are quite compelling, as can be seen in the image below.

GAN-based codec (top row) provides higher compression with less distortion that is much more visually pleasing than the BPG codec (bottom row).

Please watch my webinar where I go into much more detail on our research for these AI-based image and video compression techniques.

Real-time on-device neural decoder demo

Taking AI research from the lab to real-life scenarios is often not easy, and in this case practical deployment of neural video codecs is challenging. At CVPR 2021, Qualcomm AI Research demonstrated the world’s first HD neural video decoder running real-time on a commercial smartphone, leveraging also other key innovations such as the AI Model Efficiency Toolkit (AIMET) to quantize models for low latency and high power-efficiency. Although there is still more research ahead, the fact that we can achieve this feat gives us great confidence in the future of neural codecs and our ability to overcome challenges for mass deployment. And as we continue to blaze a trail in this space, we look to contribute to the leadership that Qualcomm Technologies has long established in both video and speech compression, including significant IP and technology contributions across several generations. We’re very excited about the prospects of AI-based codecs and how they will address the growing demand for increased data compression.

1: Cisco Annual Internet Report, 2018–2023, and WhatsApp blog 4/28/20

Taco Cohen

Staff Engineer, Qualcomm Technologies