This blog post was originally published at Edge Impulse’s website. It is reprinted here with the permission of Edge Impulse.

All great events include exciting announcements, and through a full first day of incredible speaker presentations and demos, followed by two days of virtual workshops and tech talks, this year’s Imagine gathering brought bountiful news to our in-person and online attendees. We’re grateful that numerous partners chose our event to share updates on their new features, releases, developments, partnerships, and more.

Here is everything that kicked off at Imagine, starting with our own extensive list of freshly launched Edge Impulse Studio features. We’ve hyperlinked to time-stamped locations in the video so you can listen along as the presenters explain what’s what.

Edge Impulse

Edge Impulse co-founders Zach Shelby and Jan Jongboom mentioned many new releases and tech updates in their presentations.

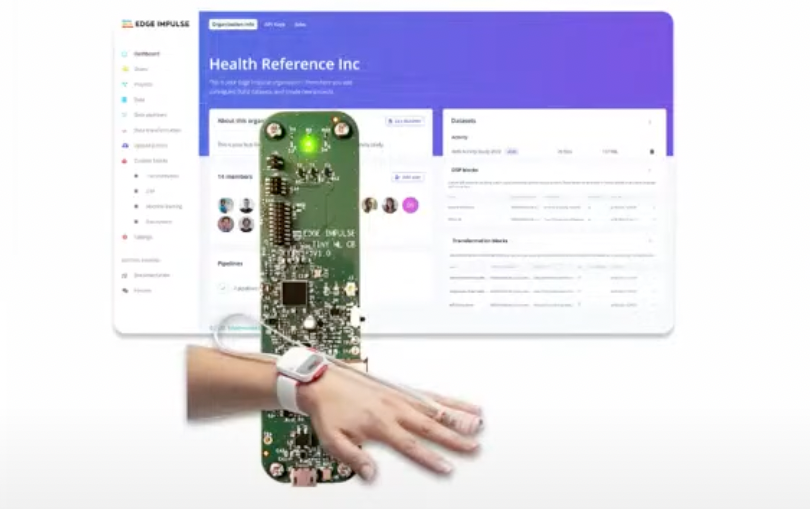

Health Reference Design (First, Zach’s overview, then more tech specifics from Jan.)

This is an end-to-end health solution design, spanning from clinical data collection to on-device ML.

Edge Impulse health reference design, using Nordic, Syntiant, and Tessolve tech

Leveraging on our experience, Edge Impulse is providing a comprehensive Health Reference Design that allows users take advantage of the full infrastructure already available to scale clinical studies to thousands of subjects, use existing reference data, validation pipeline, and pre-built algorithms for several health and wellness related use cases. This reference solution can be seamlessly deployed on to any health monitoring device.

Machine Reference Design

Edge Impulse is providing an all-encompassing Machine Reference Design that provides end-to-end Edge ML development functionalities like active Learning pipeline, on-device tuning, and pre-built algorithms along with practical Industry 4.0 use-cases within Condition Monitoring, and Predictive Maintenance.

New reLoc- and Renesas-powered machine reference design from Edge Impulse

Assisted Labeling

Now in Studio, users are able to label new data — “the interesting samples that come in, stuff that falls outside of a cluster or falls in the middle,” Jan says. “And that reduces labeling time by about 90% for projects that we’ve seen.”

Anomaly Detection 2.0 kNN+ GMM and FOMO AD

Jan explains: “Visual anomaly detection. The user gives examples of normal data only and then we tell you when we see something that’s not like the normal data.”

This additional clip provides even more details. (“Here normal data is in dark (background+Jenga blocks), then anomalies are shown lighter” — Jan.)

Bring Your Own Model Python SDK for TF and PyTorch

Jan: “End-to-end tooling to train your own models locally, or use state-of-the-art ML models (like YOLOv5 or EfficientNet) and use them in Edge Impulse. We then host the training pipeline etc. like any built-in model.”

More details here.

On-Device Calibration

Jan: “Add-on to anomaly detection. Refine the anomaly detection model while running on-device, so you learn what is normal for this device. For example: Bridge vibration monitoring, can calibrate to that specific bridge (e.g. filter out “train running over bridge” events if there’s no trains on this bridge). Always requires a normal anomaly detection model.

New EON Tuner Release

Two notes from Jan: “1. Custom search space (freedom to decide which params to check etc.) 2. Support for custom DSP and ML blocks (very useful for eng. teams that have their own code). Limited availability for free users.”

New EON Compiler JAX to Hardware

Jan: “EON Compiler models now use 5K–8K less flash (so saves money). EON Compiler now supports classical ML — you write your inference method in Python, compile to JAX then compile to C++ using EON. This lets you use scikit-learn models on device easily.”

Performance Calibration Release

Is 95% accuracy in your test set enough to deploy in a performance-critical situation? Performance calibration gives you honest insight into what the performance of your model is, by allowing you to upload a long, annotated sample. Then processing parameters are run on it to see what the actual impact of them will be. Available now for audio projects for all users.

More details available here.

Robotics case for detecting anomaly on only 100 images

Partners

Arduino White Label

Zach and Arduino co-founder Massimo Banzi conversed on stage to announce Arduino as the inaugural Edge Impulse “White Label” partner.

The White Label program offers Edge Impulse Studio to partners as a fully customizable experience. With Arduino, it is integrated directly into Arduino Cloud and designed to help scale industrial ML development using Arduino Pro solutions. Experience it at mltools.arduino.cc.

Conservation X Nature Camera

With our commitment to tech for good, we’re helping bring a new product to market alongside Conservation X — an AI-powered camera for field research that enlists the latest silicon and FOMO technology to allow it to be placed in the field for 10 years between battery replacement, with machine learning available to all of its sensors.

CXL Nature Camera

Nowatch

Nowatch is a new Dutch wearables startup working with Edge Impulse to use AI to monitor mental well-being. Their device uses a novel combination of sensors to measure cortisol, which then can be used to measure stress levels in human beings.

Nowatch internals

Know Labs

Know Labs is another startup working with Edge Impulse, focused on non-invasive blood glucose monitoring using RF emitters and receivers to detect specific molecules. A game changer.

Know Labs’ wristpad blood-glucose monitor

Polyn.AI

A new silicon partner of Edge Impulse, doing configurable, programmable AI analog tooling — designing on-demand analog AI chips.

Weights & Biases

Seann Gardiner and Karan Nisar joined us on stage to share W&B and Edge Impulse’s new integration, and show how our community can use it to streamline ML development..

Jan explains: “Build your ML models locally using your favorite tooling e.g. W&B. Edge Impulse handles data for you (CLI tool to get new data, DSP blocks), and on-device deployment (EON Compiler etc.). Use W&B Sweeps to do hyperparam optimization, or let your ML engineers track progress like they always do. When ready: push to EI (see Bring Your Own Model) and can then retrain and deploy from there.”

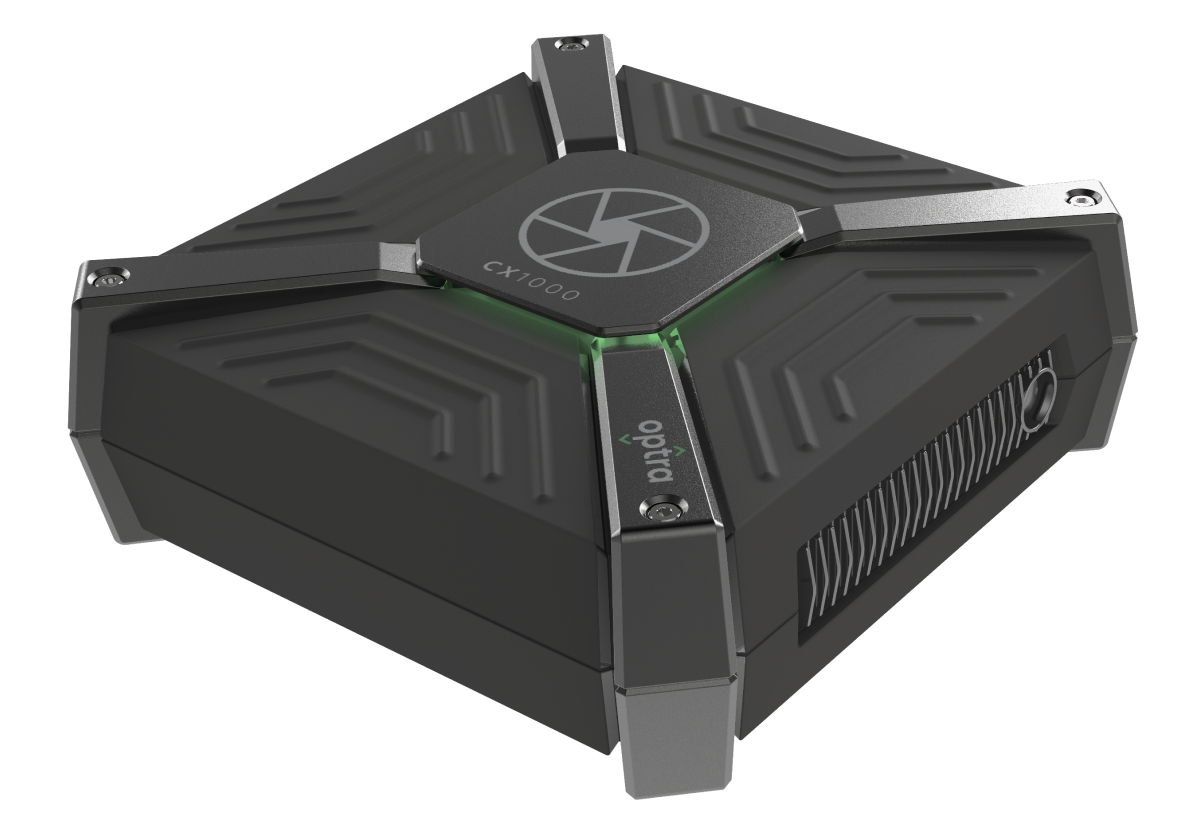

Lexmark

Lexmark took the stage to discuss their new Optra platform, with Edge Impulse integration.

Lexmark Optra Edge

This global provider of printer and imaging solutions has 6.8 million active machines and 1.2 million under managed services contract. As printers are complex, electromechanical systems laden with sensors to control mechanisms, Lexmark wants to find their failures before the customer notices, using predictive maintenance.

In one example, Lexmark has trained models to look at motor torques and internal temperatures to anticipate the life of the printer fuser and proactively dispatch replacement.

In another, Lexmark monitors the timing from paper picking to staging to proactively calling for service. These, plus others, have resulted in a 10% reduction in service costs and 30% reduction in help desk calls.

Lexmark is extending these experiences to a vertically integrated Edge platform called Optra.

Lexmark has partnered with Edge Impulse to deliver a unique pipeline to ingest, train, build, and then deploy and manage a single device or thousands with proven experience with the millions of print assets, enabling cost-effective, scalable business outcomes.

More from the lighting talks

Mid-day at Imagine, we took an extended lunch break and let various partners present about their latest products and features. Synaptics’ Omar Oreifej provided a quick glimpse into the Katana SoC, now fully supported by Edge Impulse, (more details here); while BrainChip’s Rob Telson demonstrated how to leverage the company’s Akida neuromporphic AI processor with Edge Impulse (details here). Other lightning talk presenters included Syntiant, Renesas, Alif, Sony, and Silicon Labs — watch them all here.

Here is the full agenda with links to each talk.

CEO Keynote — Zach Shelby, Edge Impulse

Industry Welcome — Simon Segars

Application of Edge ML in the Supply Chain Logistics Industry — Sandeep Bandil, Brambles

Machine Learning on the Edge: An Industrial Perspective — Hubertus Breier, Balluff

Empathy at the Edge: Preparing for the Next Generation of Smart Home Living — Mark Benson, Samsung SmartThings

Conservation/AI Ethics Panel — Stephanie O’Donnell, Tim van Dam, Sam Kelly, Kate Kallot, Fran Baker, Tom Quigley

Digital Health and Edge ML — Katelijn Vleugels, HealthTech Entrepreneur, Klue, Medtronic

Industrial Automation: The Rise of ML in Manufacturing — Ben Gibbs, Ready Robotics

Enabling Deep Learning on the Edge with Neural Native Processors — Mallik P. Moturi, Syntiant

Now Ready for AI Solutions That Enable Social Implementation, Renesas RZ Family — Mitsuo Baba, Renesas

Computer Vision Techniques for Reducing the Data Collection Pain — Omar Oreifej, Synaptics

Hardware Accelerated ML on Alif Semiconductor’s Ensemble Devices Takes Edge Processing From Watts to Milliwatts — Henrik Flodell, Alif

Sony’s Spresense Edge Computing with Low-Power Consumption — Armaghan Ebrahimi, Sony

Silicon Labs Introduces Its MG24 with an Integrated ML Accelerator — Isaac Sanchez, Silicon Labs

Essential AI — Rob Telson, BrainChip

Optra Edge: Build, Deploy and Scale AI at the Edge — Scott Castle, Lexmark

Edge Impulse Innovation, Demos, and Features — Jan Jongboom, Edge Impulse

Building a System of Record for Edge ML — Seann Gardiner and Karan Nisar, Weights & Biases

Modern Aviation — Chris Anderson, Kittyhawk

We could not be happier with the show, with the amazing presentations, and with how fun and inspiring it was to be around so many impressive partners and audience members for the day.

There were also two great days of online workshops; watch the community discussions and project deep dives here. We’ll be posting more shortly.

And lastly, be sure to save your spot for Imagine 2023. We hope to see you there!