This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems.

Time of flight cameras have revolutionized depth mapping and made autonomous navigation in agricultural vehicles, autonomous mobile robots, AGVs, etc. seamless. Learn what a time of flight sensor is, and the different components of a time of flight camera.

The term Time-of-Flight means the time taken by an object to travel a particular distance through a medium. In projectile motion, this is the total time from when the object is projected to the time it lands on the ground or touches the surface. In imaging technology, the Time-of-Flight technique is used to calculate the depth to a target object or distance between two objects.

In this article, we will learn how Time-of-Flight (ToF) is used in cameras for depth mapping in applications such as autonomous mobile robots, agricultural vehicles, automated dimensioning etc.

What is a ToF sensor, and how does it work?

A ToF sensor is nothing but a sensor that uses Time-of-Flight to measure depth and distance. A camera equipped with a ToF sensor measures distance by actively illuminating an object with a modulated light source (such as a laser or an LED). It uses a sensor that is sensitive to a laser’s wavelength (typically 850nm or 940nm) to capture the reflected light. The sensor measures the time delay ∆T from when the light is emitted and when the reflected light is received by the camera or sensor. This time delay is proportional to twice the distance between the camera and the object. Therefore, depth can be estimated as follows:

d = cΔT/2, where c is the velocity of light.

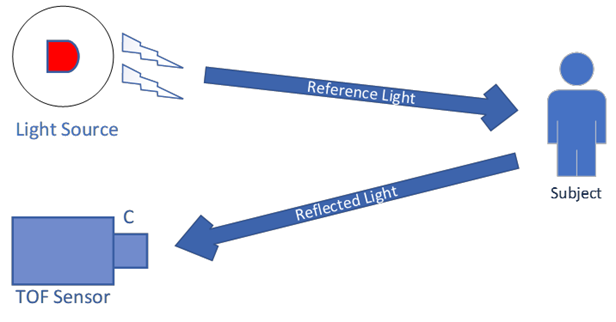

The below image illustrates the working of a ToF camera to measure the distance to a target object.

Figure 1: Illustration of a ToF camera measuring depth

Key components of a Time-of-Flight camera system

A Time-of-Flight camera system predominantly comprises of 3 parts:

- ToF sensor and sensor module

- Light source

- Depth sensor

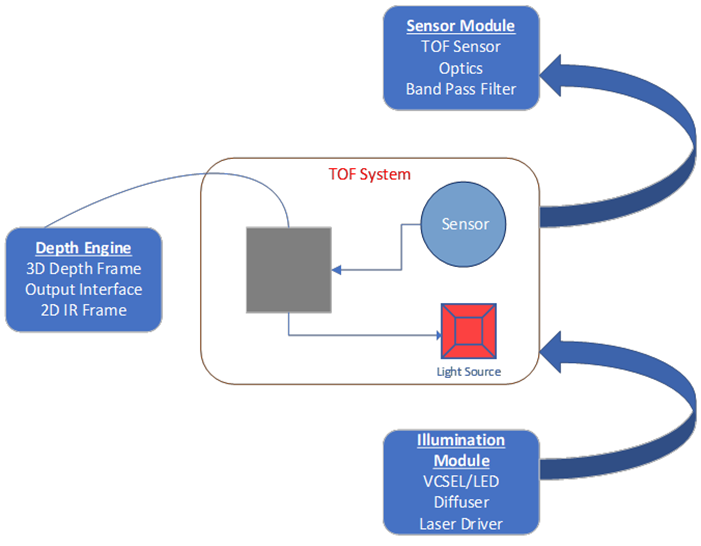

The below image is a good representation of these components in a Time-of-Flight camera system:

Figure 2: Components of a Time-of-Flight camera system

Let us now look at each of them in detail.

ToF sensor and sensor module

The sensor is the key component of a ToF camera system. It is the sensor which collects the reflected light from the scene and converts it into depth data on each pixel of the array. Higher the resolution of the sensor, better would be the quality of the depth map generated. The sensor module in a ToF camera also has the optics mounted on it which typically comes with large aperture for better light collection efficiency. In addition to this, a band-pass filter (designed to pass light with wavelengths 850nm and 940nm) helps to maximize the VCSEL throughput efficiency.

Light source

In a ToF camera, light is generated either by using a laser VCSEL or LED. Typically, NIR (Near Infra-Red) lighting is used with wavelengths 850nm and 940nm. The VCSEL comes with a diffuser which diverges the illumination in front of the image sensor to match with the Field of View (FOV) of the optics. This module also contains a laser driver which controls the optical waveform’s rise and fall times with clean edges.

Depth processor

A depth processor helps in converting the raw pixel data with the phase information from the image sensor into depth information. It also helps in noise filtering in addition to providing passive 2D IR (Infra-red) images which can be used for other end applications.

How e-con Systems is leveraging the Time-of-Flight technology

Designing a ToF based depth sensing camera is often complex owing to various factors such as optical calibration, temperature drifts, VCSEL pulse timing patterns etc. All these elements affect depth accuracy.

e-con Systems has developed a ToF camera with key focus on accuracy and consistency of output. Building a ToF camera system to perfection typically would mean very long design cycles. However, with more than a decade of experience in working with stereo vision based 3D camera technologies and having helped several customers to integrate its depth cameras into their products, e-con Systems has been on a journey of accelerated product development.

Prabu Kumar

Chief Technology Officer and Head of Camera Products, e-con Systems