This blog post was originally published at Namuga Vision Connectivity’s website. It is reprinted here with the permission of Namuga Vision Connectivity.

In the rapidly evolving field of 3D imaging, three primary technologies stand out: Structured Light, Time-of-Flight (ToF) and Stereo Vision. Each offers unique advantages and is suited for specific applications. Let’s explore each technology in detail, examine their applications and see how NAMUGA integrates them into their innovative products.

Stereoscopic Vision

How it works

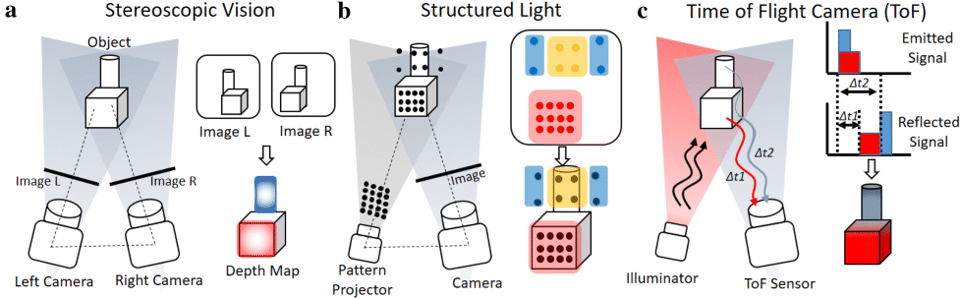

Stereo Vision works by placing two or more cameras at slightly different angles to capture the same scene. The system then analyzes the disparity between the images to calculate depth information, mimicking the way human binocular vision works.

Strengths

Stereo Vision is known for its simple architecture and cost-efficiency. According to ScienceDirect, these systems can operate under ambient light without the need for a laser line or projection pattern, making them less susceptible to interference from sunlight.

In fact, stereo vision has even been used in space exploration, such as NASA’s Mars Pathfinder mission, to generate highly accurate 3D terrain maps.

Limitations

However, stereo vision systems may suffer in low-light conditions. Miniaturization is more difficult, and complex algorithms are required to match image features precisely between views, making processing more computationally intensive.

Structured Light

How it works

Structured Light systems project a known pattern (e.g., grids or dots) onto a surface. A camera captures the deformation of this pattern as it hits the object, and depth is calculated via triangulation.

Strengths

Structured Light is highly precise and offers excellent resolution. A study published on ScienceDirect highlights its capability for “accurate, rapid measurement and active control,” making it ideal for both industrial and research settings.

It performs particularly well in controlled lighting environments and can capture fine detail even on textureless surfaces.

Limitations

Performance can degrade in bright outdoor settings due to light interference, and highly reflective or transparent surfaces can distort the projected pattern.

Time-of-Flight (ToF)

How it works

ToF cameras emit infrared (IR) light and measure the time it takes for the light to bounce back from objects. This time delay is converted into depth information to generate a 3D depth map of the scene.

Strengths

ToF systems can capture high-speed 3D images in real time while simultaneously providing intensity data. This makes them ideal for robotics, industrial automation, and other applications requiring fast response times.

Recent research has shown that ToF systems can record up to 75 depth frames per second, allowing for smooth and accurate tracking of moving objects.

Limitations

Compared to structured light systems, ToF may offer lower depth resolution. Surface reflectivity and color can also affect measurement accuracy.

NAMUGA’s development milestone in 3D camera module technology

A well-known example of stereo-based 3D sensing is Intel’s RealSense camera. It captures depth by comparing two infrared images taken from slightly different viewpoints. Some models also include an IR dot projector to improve performance on low-texture surfaces — making it a hybrid approach that combines stereo vision and structured light.

|

|

Real Sense – Intel

At NAMUGA, we bring extensive development experience across all three 3D sensing technologies.

Our Titan series ToF camera modules are optimized for AR/VR glasses and robot vacuum cleaners, offering compact size and accurate depth perception for spatial understanding and gesture control. In particular, Titan is currently in mass production for Samsung’s robot vacuum cleaner, providing reliable and precise navigation through real-time 3D sensing. Meanwhile, our Pinocchio series is designed for homecare robots and portable projectors, enabling smart environmental awareness and automated functionality in compact consumer electronics.

|

|

Titan 100 & Titan 120 for wearable glasses, robot vacuum

Pinocchio for homecare robot and portable projector product specification

Pinocchio for homecare robot and portable projector product specification

In addition to short-range ToF modules, NAMUGA also develops LiDAR solutions based on ToF technology.

Our Stella series is engineered for automotive and security applications, offering robust 3D perception for autonomous navigation and perimeter detection. Notably, Stella modules are designed to support both short-range and long-range sensing, making them highly adaptable for indoor and outdoor environments across industries such as smart logistics, factory automation, industrial robotics, and spatial monitoring.

Stella series for automotive and security applications

Stella series for automotive and security applications

As the demand for 3D vision continues to rise across industries like augmented reality, robotics, and autonomous mobility, the importance of choosing the right sensing technology grows as well. Whether it’s the precision of structured light, the flexibility of stereo vision, or the real-time performance of ToF, each solution offers distinct value.

With a comprehensive portfolio of 3D camera modules and a track record of successful integration in mass-production environments, NAMUGA is your trusted partner for next-generation depth-sensing solutions. We remain committed to advancing imaging technology that powers smarter, safer, and more immersive experiences.