Object Identification Functions

Capgemini Leverages Qualcomm Dragonwing Portfolio to Enhance Railway Monitoring with Edge AI

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. AI device powered by Qualcomm Dragonwing boosts productivity and reduces cloud dependence in Capgemini’s monitoring application for grade crossings Capgemini moved from their previous hardware solution to an edge AI device powered by the Qualcomm® Dragonwing™ QCS6490

Why 4K HDR Imaging is Required in Front View Cameras?

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Mobility systems require front-view cameras that depend on visual intelligence. Hence, the camera’s input is the starting point for every decision. Learn why 4K HDR imaging is critical in front-view cameras and explore five major

Snapdragon Ride: A Foundational Platform for Automakers to Scale with the ADAS Market

This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm. The automotive industry is well into the transformation of vehicle architectures and consumer-driven experiences. As the demand for advanced driver assistance systems (ADAS) technologies continues to soar, Qualcomm Technologies’ cutting-edge Snapdragon Ride Platforms are setting a new standard for automotive

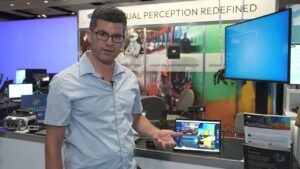

“Visual Search: Fine-grained Recognition with Embedding Models for the Edge,” a Presentation from Gimlet Labs

Omid Azizi, Co-Founder of Gimlet Labs, presents the “Visual Search: Fine-grained Recognition with Embedding Models for the Edge” tutorial at the May 2025 Embedded Vision Summit. In the domain of AI vision, we have seen an explosion of models that can reliably detect objects of various types, from people to… “Visual Search: Fine-grained Recognition with

How High-resolution Cameras Are Transforming Traffic Enforcement and Monitoring

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Smart traffic systems leverage embedded camera solutions to help manage roadways, record violations, and detect traffic anomalies. Get expert insights on how cameras work in these systems, their top use cases applications, and key imaging

Texas Instruments Demonstration of Edge AI Inference and Video Streaming Over Wi-Fi

The demonstration shows how to use Texas Instruments’ AM6xA to capture live video, perform machine learning, and stream video over Wi-Fi. The video is encoded with H.264/H.265, and streamed via UDP over Wi-Fi using the CC33xx. At the receiver side, the video is decoded and displayed on a screen. The receiver side could be a

The Role of Embedded Cameras in Ensuring Perimeter Security

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Any breach along the perimeter, from industrial plants to data centers, results in major threats. That’s why embedded vision is so important. Discover how cameras work in these systems, their must-have features, as well as

Machine Vision Defect Detection: Edge AI Processing with Texas Instruments AM6xA Arm-based Processors

Texas Instruments’ portfolio of AM6xA Arm-based processors are designed to advance intelligence at the edge using high resolution camera support, an integrated image sensor processor and deep learning accelerator. This video demonstrates using AM62A to run a vision-based artificial intelligence model for defect detection for manufacturing applications. Watch the model test the produced units as

“Introduction to Radar and Its Use for Machine Perception,” a Presentation from Cadence

Amol Borkar, Product Marketing Director, and Vencatesh Subramanian, Design Engineering Architect, both of Cadence, co-present the “Introduction to Radar and Its Use for Machine Perception” tutorial at the May 2025 Embedded Vision Summit. Radar is a proven technology with a long history in various market segments and continues to play an increasingly important role in

Alif Semiconductor Demonstration of Face Detection and Driver Monitoring On a Battery, at the Edge

Alexandra Kazerounian, Senior Product Marketing Manager at Alif Semiconductor, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Kazerounian demonstrates how AI/ML workloads can run directly on her company’s ultra-low-power Ensemble and Balletto 32-bit microcontrollers. Watch as the AI/ML AppKit runs real-time face detection using an

Inuitive Demonstration of On-camera SLAM, Depth and AI Using a NU4X00-based Sensor Module

Shay Harel, Field Application Engineer at Inuitive, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Harel demonstrates one of several examples his company presented at the Summit, highlighting the capabilities of its latest vision-on-chip technology. In this demo, the NU4X00 processor performs depth sensing, object

STMicroelectronics Demonstration of Real-time Object Detection and Tracking

Therese Mbock, Product Marketing Engineer at STMicroelectronics, and Sylvain Bernard, Founder and Solutions Architect at Siana Systems, demonstration the companies’ latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Mbock and Bernard demonstrate using STMicroelectronics’ VD66GY and STM32N6 for real-time object tracking, ideal for surveillance and automation.

STMicroelectronics Demonstration of Real-time Multi-pose Detection

Therese Mbock, Product Marketing Engineer at STMicroelectronics, and Sylvain Bernard, Founder and Solutions Architect at Siana Systems, demonstration the companies’ latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Mbock and Bernard demonstrate using STMicroelectronics’ VD55G1 and STM32N6 to detect real-time human poses, ideal for fitness, gestures, and gaming.

Robot-based Shelf Monitoring Cameras for Retail Operation Efficiency

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Robot-based shelf monitoring systems integrate camera systems with autonomous robotics. It helps ensure seamless inventory tracking, planogram compliance, and shelf organization. Discover how these camera-based systems work and their must-have imaging features. Retail management has

Nextchip Demonstration of Various Computing Applications Using the Company’s ADAS SoC

Jonathan Lee, Manager of the Global Strategy Team at Nextchip, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Lee demonstrates various computing applications using his company’s ADAS SoC. Lee showcases how Nextchip’s ADAS SoC can be used for applications such as: Sensor fusion of iToF

Microchip Technology Demonstration of Real-time Object and Facial Recognition with Edge AI Platforms

Swapna Guramani, Applications Engineer for Microchip Technology, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Guramani demonstrates her company’s latest AI/ML capabilities in action: real-time object recognition using the SAMA7G54 32-bit MPU running Edge Impulse’s FOMO model, and facial recognition powered by TensorFlow Lite’s Mobile

3LC Demonstration of Debugging YOLO with 3LC’s Training-time Truth Detector

Paul Endresen, CEO of 3LC, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Andresen demonstrates how to uncover hidden treasures in the COCO dataset – like unlabeled forks and phantom objects – using his platform’s training-time introspection tools. In this demo, 3LC eavesdrops on a

VeriSilicon Demonstration of a Partner Application In the iEVCam

Halim Theny, VP of Product Engineering at VeriSilicon, demonstrates the company’s latest edge AI and vision technologies and products at the 2025 Embedded Vision Summit. Specifically, Theny demonstrates a customer’s SoC, featuring a collaboration with a premier camera vendor using an event-based sensor to detect motion, and processed by VeriSilicon’s NPU and vision DSP. This