This blog post was originally published by the Association for Unmanned Vehicle Systems International. It is reprinted here with the permission of AUVSI.

Autonomous devices are reliant on vision processing to enable them to safely move about their environments and meaningfully interact with objects around them. An industry alliance assists product creators in incorporating practical computer vision capabilities into their autonomous device designs.

Vision technology is now enabling a wide range of products that are more intelligent and responsive than before, and thus more valuable to users. In particular, vision processing is a critical characteristic for robust device autonomy, and diverse types of autonomous devices are therefore collectively becoming a significant market for vision technology. An analogy with biological vision can help us understand why this is the case. In a recent lecture, U.C. Berkeley professor Jitendra Malik pointed out that in biological evolution, “perception arises with locomotion.” In other words, organisms that spend their lives in one spot have little use for vision. But when an organism can move, vision becomes very valuable.

In the technological world, by analogy, while it’s theoretically possible to build autonomous mobile devices without vision, it rarely makes sense to do so. Just as in the biological world, vision becomes essential when we create devices that move about. In order for autonomous devices to meaningfully interact with objects around them as well as safely move about their environments, they must be able to discern and interpret their surroundings. A few real-life examples, described in the following sections, will serve to tangibly illustrate this key concept.

Such image perception, understanding, and decision-making processes have historically been achievable only using large, heavy, expensive, and power-draining computers and cameras. Thus, computer vision has long been restricted to academic research and relatively low-volume production designs. However, thanks to the emergence of increasingly capable and cost-effective processors, image sensors, memories and other semiconductor devices, along with robust algorithms, it's now practical to incorporate computer vision into a wide range of systems. The Embedded Vision Alliance uses the term “embedded vision” to refer to this growing use of practical computer vision technology in embedded systems, mobile devices, PCs, and the cloud.

Autonomous Vehicles and Driver Assistance

We've been hearing a lot about autonomous cars lately – and for good reasons. Driverless cars offer enormous opportunities for improved safety, convenience, and efficiency. Their proliferation may have as profound an impact on our society as conventional automobiles have had over the past century. Widespread deployment of autonomous cars widely is going to take a while, given the complexity of the application and the associated technological and regulatory challenges. However, ADAS (advanced driver assistance systems) implementations are becoming increasingly widespread and increasingly robust today. And vision-based ADAS systems are common element in current production designs.

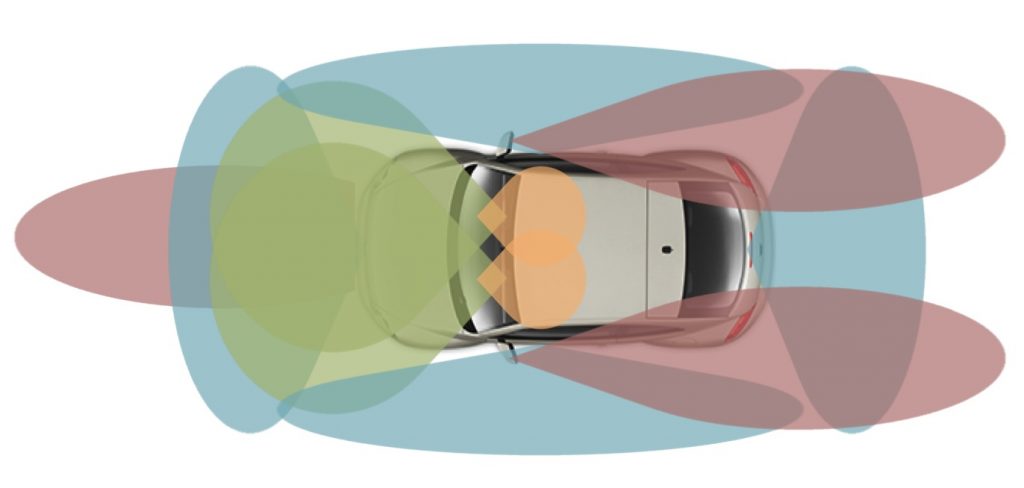

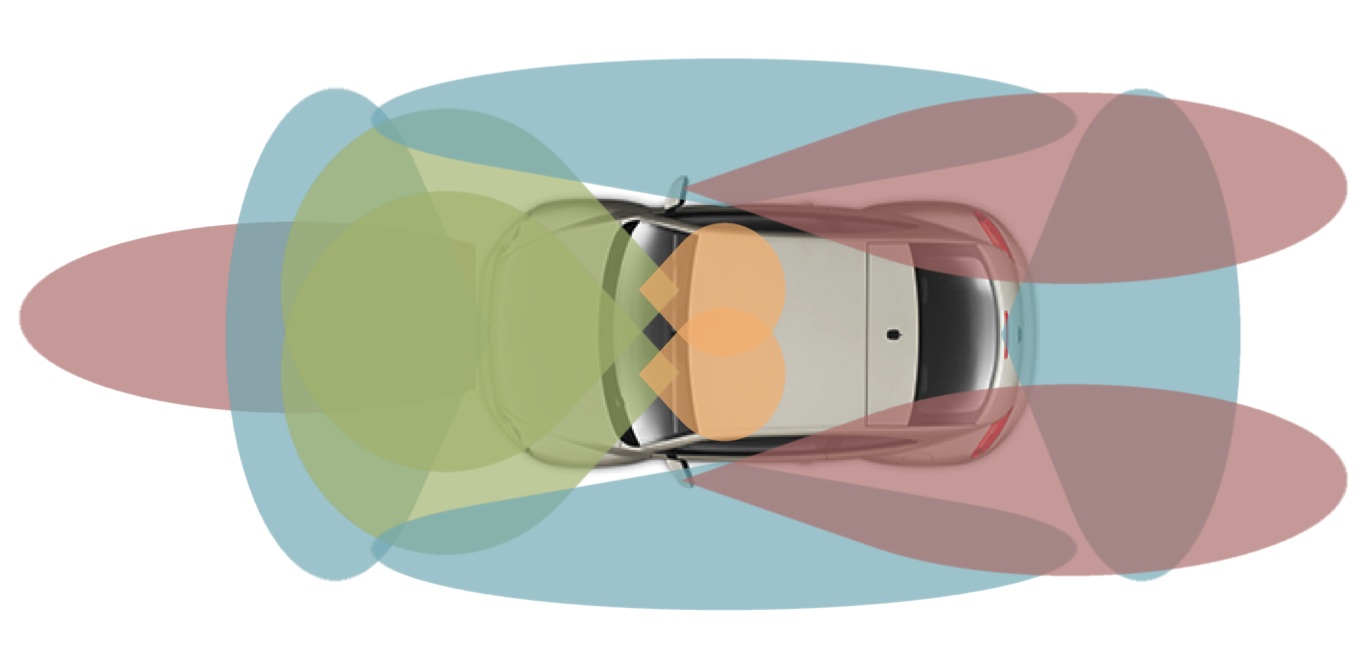

From its humble origins as a passive rear-view camera, visually alerting a driver to objects behind the vehicle, vision-based ADAS has become more active (e.g. applying brakes to prevent collisions), has extended to cameras mounted on other areas outside a vehicle (for front collision avoidance purposes, for example), and has even expanded to encompass the vehicle interior (sensing driver drowsiness and distraction, implementing gesture interfaces, etc.) (Figure 1).

Figure 1. Cameras mounted both in the vehicle interior and around its exterior enable robust driver assistance and fully autonomous capabilities (courtesy videantis).

Other sensor technologies such as radar or LIDAR (light detection and ranging) will likely continue to be included along with cameras in future vehicles, since computer vision doesn't perform as well after dark or in poor weather situations. But only vision enables a vehicle to discern the content of a traffic sign, for example, or to uniquely identify the person approaching a door or sitting behind the wheel. Vision also greatly simplifies the task of discerning between, say, a pedestrian and a tree trunk.

Drones

UAVs (unmanned aerial vehicles), i.e. drones, are a key future growth market for embedded vision. A drone's price point, therefore cost, is always a key consideration, so the addition of vision processing capabilities must not incur a significant bill-of-materials impact. Maximizing flight time is also a key consideration for drones, so low incremental weight and power consumption are also essential for vision processing hardware and software. Fortunately, the high performance, cost effectiveness, low power consumption, and compact form factor of various vision processing technologies have now made it possible to incorporate practical computer vision capabilities into drones. A rapid proliferation of the technology is therefore already well underway, exemplified by latest generation drones such as DJI's Phantom 4, Inspire 2 and Mavic Pro (Figure 2).

Figure 2. Modern consumer and professional drones such as DJI's Phantom 4 (top), Inspire 2 (middle) and Mavic Pro (bottom) harness computer vision for image stabilization, object tracking, collision avoidance, terrain mapping and other capabilities (courtesy DJI).

Digital video stabilization and other computational photography techniques, for example, can be used to improve the quality of the images captured by a drone's camera(s), without need for expensive and bulky alternative optical image stabilization hardware. And once the improved-quality footage is captured, it can find use not only for legacy YouTube, etc. archive, viewing and sharing purposes, but also processed and employed by the drone to autonomously avoid collisions with other objects, to automatically follow a person being filmed, and to analyze the drone's surroundings. As such, vision processing can make efficient use of available battery charge capacity by autonomously selecting the most efficient route from Point A to Point B.

Vision Resources for Autonomous System Developers

The Embedded Vision Alliance, a worldwide organization of technology providers, provides product creators with practical education, information and insights to help them incorporate vision capabilities into products. The Alliance’s annual technical conference and trade show, the Embedded Vision Summit, will be held May 1-3, 2017 in Santa Clara, California. Designed for product creators interested in incorporating visual intelligence into electronic systems and software, the Summit provides how-to presentations, inspiring keynote talks, demonstrations, and opportunities to interact with technical experts from Alliance member companies. Online registration and additional information on the 2017 Embedded Vision Summit are now available.

The Embedded Vision Alliance website provides tutorial articles, videos, and a discussion forum. The Alliance also offers a free online training facility for vision-based product creators: the Embedded Vision Academy. This area of the Alliance website provides in-depth technical training and other resources to help product creators integrate visual intelligence into next-generation software and systems. Course material in the Academy spans a wide range of vision-related subjects, from basic vision algorithms to image pre-processing, image sensor interfaces, and software development techniques and tools. Access is free to all through a simple registration process.