The brand-new iPhone X has integrated neural engines for face recognition, but this is just the beginning. Embedded neural engines and dedicated intelligence processors are bringing artificial intelligence (AI) to the edge devices, breaking the dependence on the cloud. The benefits of processing on the edge include reduced latency, full network coverage, increased privacy and security, and reduced communication with the cloud, resulting in lower cost. With these benefits, mobile devices can harness the power of AI to do things that would have been considered science fiction not too long ago.

Machines of the past are now real-time data centers

I just got back from our annual symposium, where we experienced firsthand the level of engagement with AI in the embedded world. Machines that were once purely mechanical, like cars, drones and robots, are now becoming intelligent with the ability to see, sense, track, classify, detect, recognize, and much more. Today, these devices use computer vision and sensor fusion to collect and process data, and make real-time decisions. In some cases, as in autonomous cars and drones, the decision making is so critical that the latency of processing in the cloud can lead to unacceptable response times. With on-board intelligence, these machines are more accurately defined as data centers.

AI at the edge must process vast amounts of information in real time and with low power (Source: CEVA)

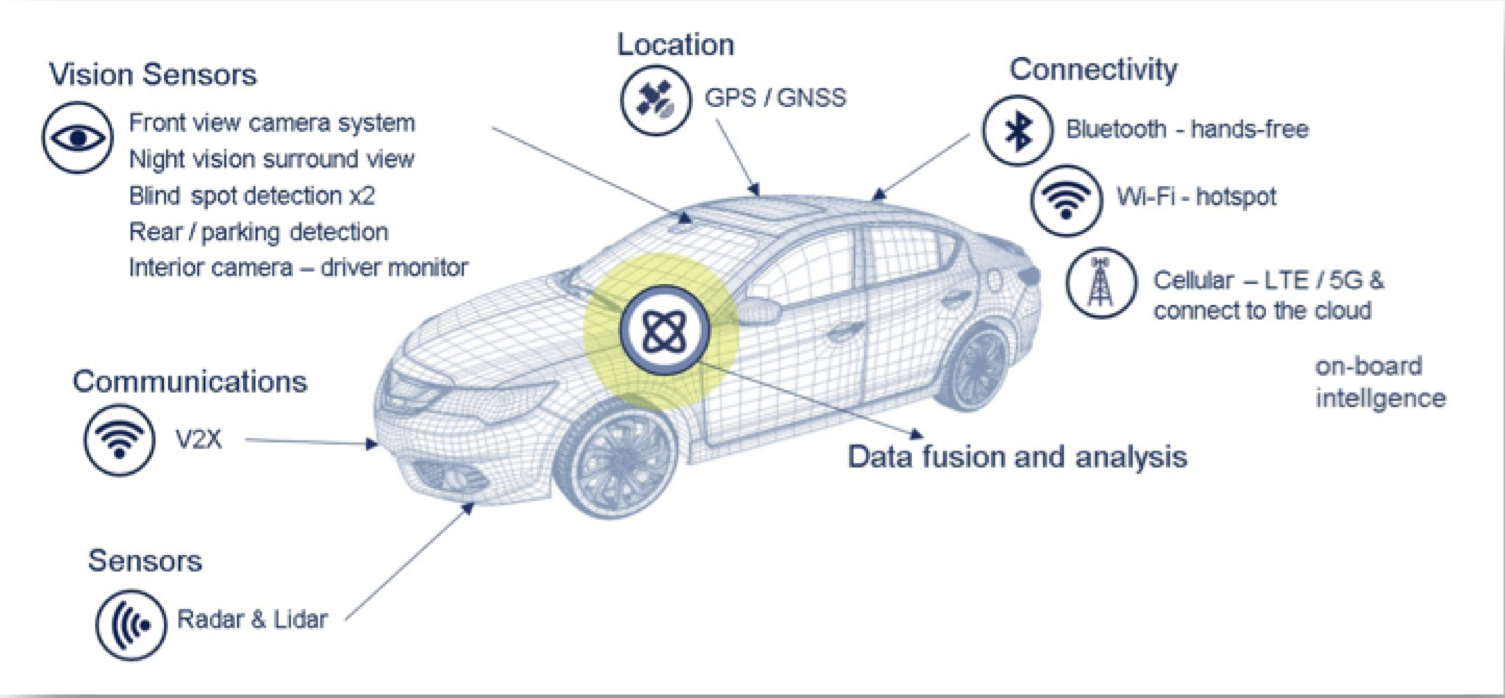

Take self-driving vehicles, for instance. This is a great example that requires a multitude of vision and other sensors, as well as satellite location and various connectivity solutions. There must also be a “brain” for data fusion and analysis. While cloud-based processing and information will also play a role in the self-driving functionality, there must be on-board processors that can make split-second decisions. It is critical that even in cases of spotty coverage, there is no danger of the car malfunctioning. In these cases, a processor that can handle intense deep learning computations is a necessity, and not an optional feature.

AI at the edge for automotive requires a high level of on-board intelligence (Source: CEVA)

Neural Network Processing at the Edge is Going Mainstream

In the field of smartphones, Apple is the touchstone by which features are considered mainstream must-haves or niche-market gadgets. So, the announcement that their new flagship, the iPhone X, has a dedicated neural engine for processing AI at the edge is a big deal. It means, as my colleague predicted before the announcement of the latest iPhones, that soon every camera-enabled device will include a vision DSP or other dedicated neural network processor. The neural engine in the iPhone X is the enabler of the Face ID technology that lets users unlock their iPhones with a glance. The demand for ultra-fast response time together with concern for privacy and level of security all dictate that the recognition processing must be done on the phone. Now that the power of AI is in the device, many more exciting AI features are sure to follow.

Google also added capabilities to its latest flagship, the Pixel 2, with a processor the company calls the Pixel Visual Core. In the competitive smartphone arena, Google must differentiate itself from the herd. One of the ways the company has done this is with superior software for the cameras in the Pixel smartphones. But, the intense computations needed to enhance images, create single-lens bokeh effect, and improve dynamic range of photos are not handled efficiently enough by the standard Application Processors found in most leading smartphones. Therefore, Google’s decision to add a secondary chip for these features could be a major differentiator down the road by adding also AI based features. Huawei also recently announced a neural engine inside the Kirin 970, and many others are joining in this race.

How do Vision DSP-based engines enable on-board intelligence?

While the benefits of edge processing are clear, it also comes with challenges. The problem is how to perform the kind of data-crunching that can be done on huge servers, but on a tiny device in the palm of your hand, with a battery that is already handling many other tasks. This is the reason that vision DSPs are vital to the success of edge AI. The slim and efficient, yet powerful, vectorized mathematical performance of DSPs make them the best candidate to handle the workload of neural engines.

Another challenge is the porting of an existing neural networks to an embedded DSP environment. This can potentially consume a lot of development time and be very costly. But, automatic tools can enable a push-button, hassle-free transition that analyzes and optimizes the networks for the embedded environment. The important thing for a tool like this is to cover a large number of the most advanced networks, to ensure that any network can be easily optimized to run on the embedded device at the edge.

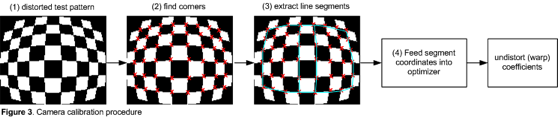

Faster RCNN – CEVA’s fully automatic Network Generator can significantly reduce bandwidth and keep bit accuracy (Source: CEVA)

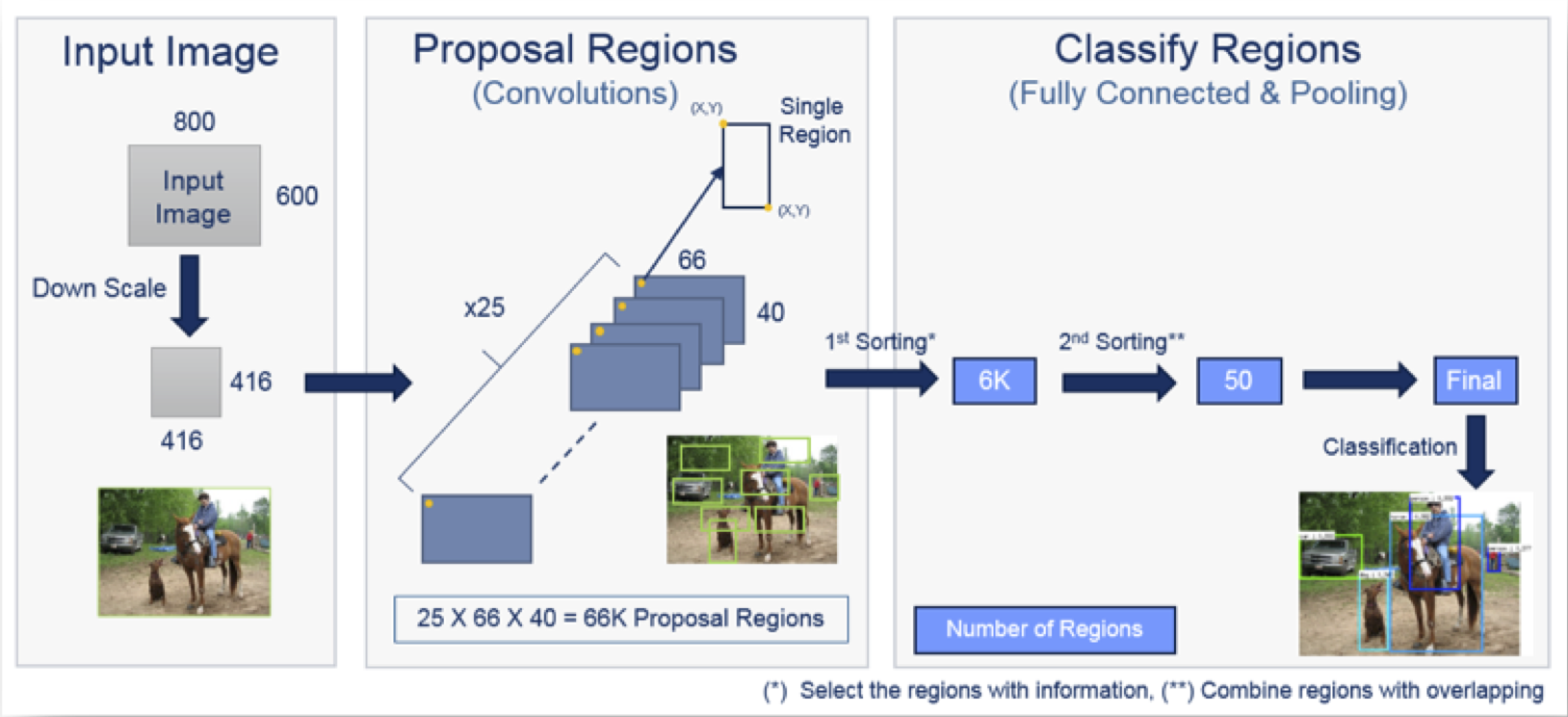

Once the porting and optimization process is complete, typically the input data will be downscaled to enable faster processing with minimal loss of information. For example, in the Faster RCNN (PDF) flow, we have two phases, the proposal regions and the classify regions.

Example: Faster RCNN flow (Source: CEVA)

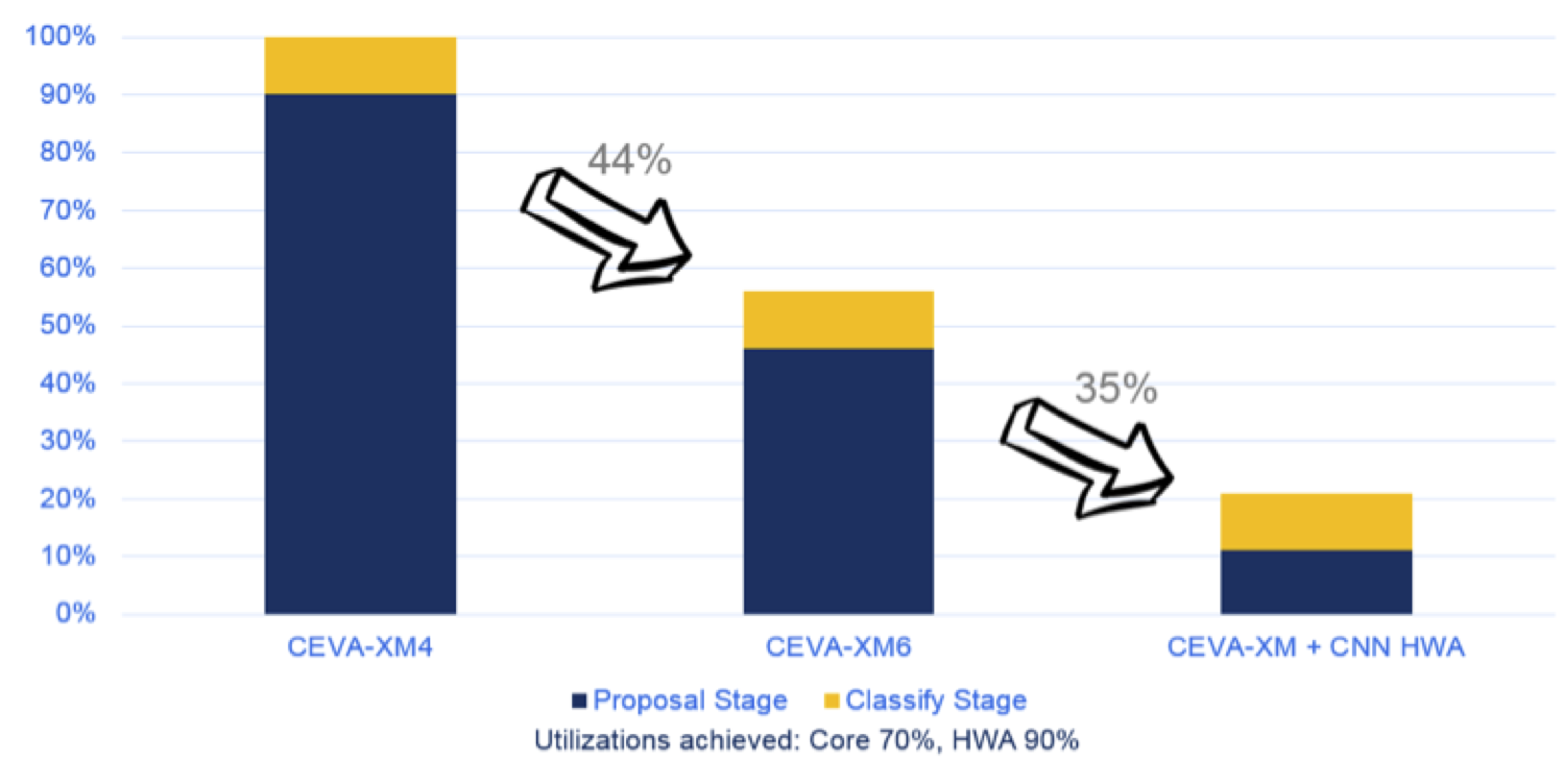

The CEVA-XM family cores are super-low-power vision DSPs that are ideal for this type of workload. Performance can be improved even more by adding the CEVA-CNN Hardware Accelerator (HWA) which gives a boost to NN processing, such as Faster RCNN. As you can see in the graph below, our fifth generation vision processor, the CEVA-XM6, significantly improved performance as compared to its award-winning predecessor, the CEVA-XM4. Adding the CEVA-CNN HWA takes the performance another huge step forward.

Faster RCNN performance using CEVA-XM vision DSP family (Source: CEVA)

Deep-learning-based AI can enable endless opportunities to handheld devices: image enhancements resulting in DSLR-quality photographs, augmented and virtual reality applications, sense, avoid and navigate, detection, tracking, recognition, classification, segmentation, mapping, position, video enhancement and beyond. With that kind of power in the palm of your hand, it may seem like the phone in your smartphone is just a side feature.

AI Fuels Visually Intelligent Applications (Source: CEVA)

Learn more

Learn more about smart imaging and computer vision for camera-enabled devices here.

Or click on the following links to find out more about efficient processing with the CEVA-XM6 vision processor and the powerful CDNN toolkit that simplifies the transition to an embedded environment.

By Liran Bar

Director of Product Marketing, Imaging & Vision DSP Core Product Line, CEVA