This blog post was originally published at Cadence's website. It is reprinted here with the permission of Cadence.

This week it is the Embedded Vision Summit in Santa Clara. Over the last few years, vision has gone from something esoteric to a mainstream part of many systems, with ADAS in automobiles, smart security cameras that analyze the video stream, drones that can avoid obstacles, and more. What is especially important is embedded vision, typically using cloud datacenters to do the training, but then doing all the recognition on-device, meaning on the SoC.

This week it is the Embedded Vision Summit in Santa Clara. Over the last few years, vision has gone from something esoteric to a mainstream part of many systems, with ADAS in automobiles, smart security cameras that analyze the video stream, drones that can avoid obstacles, and more. What is especially important is embedded vision, typically using cloud datacenters to do the training, but then doing all the recognition on-device, meaning on the SoC.

I have been writing about vision since I started the Breakfast Bytes blog. Indeed, one of the earliest posts I wrote was How Is Google So Good at Recognizing Cats? That was my first post on neural networks, artificial intelligence, deep learning, convolutional neural nets, and the other scaffolding that gets put together to make effective vision-based systems. As Arm's Tim Ramsdale memorably said at last year's Embedded Vision Summit:

It's a visual world. If I had a colleague come and drop a stack of plates at the back of the room, you'd all look round to see what happened, not listen harder.

The two keynotes were by Kateo Tanada, who has been in computer vision for as long as computer vision has existed, and Dean Kamen, who is most famous for the Segway, but has a long track record of inventing the first accurate insulin pump, the first home dialiysis machine, and a wheelchair that can climb stairs, and then sit up on two wheels so he could talk to us over the top of the podium. I'll cover the keynotes in a separate post.

Jeff Bier

Jeff talked about The Four Key Trends Driving the Proliferation of Visual Perception. He said that he wanted to add "and number 3 will amaze you" to the end of his title, but the organizers of the conference were too old-fashioned to go for such a click-bait title. The joke being the Jeff is the founder of the Embedded Vision Alliance and so is the head of the organization running the summit.

Jeff started with a couple of slides that he'd pulled from other people's presentations the day before, showing the exploding growth in investments in vision companies in both China and the US, and another graph showing how revenue in the segment is expected to increase by 25X from 2016 to 2025.

Computer vision has reached the point that it increasingly works well enough to be useful in real-world applications. Further, it can be deployed at low cost and low power consumption. Importantly, it is increasingly usable by non-specialists. This change in the market is underlaid by the four trends.

The first trend is that deep learning has, in many cases, surpassed human performance. Jeff had a chart showing ImageNet performance over the years (despite the confusing name, ImageNet is actually a tagged database of millions of images, not a network) with the error rate coming down until it is better than humans can do. This means that most vision no longer requires specialized vision algorithms honed for years for each application, and so it is much more scalable. It is not so much about the algorithm as the training data.

In some hard tasks, like images recorded in very low light, humans are not even that good. But vision algorithms can "learn to see in the dark."

The next trend is processors are improving. Jeff showed a similar calculation last year, but the numbers have gone up. Vision algorithms will improve by 2-37X (average 18X) in the next 3 years or so. The processors themselves will improve by 15X-300X (average 157X). Middleware, tools and frameworks will improve 3-14X (average 8X). But these are multiplicative so in total the improvement comes to 26,000X. This is a revolution, an improvement of 3 or 4 orders of magnitude.

There are roughly 50 companies working on processors for deep learning or for inference (mostly inference). With the recent announcement of the Tensilica Q6 processor (see my post A New Era Needs a New Architecture: The Tensilica Vision Q6 DSP) Cadence is one of the leaders in the space. More information coming up later in this post. In the recent computer vision survey that Jeff had run (see my post Tools and Processors for Computer Vision) about 1/3 of people surveyed were using specialized vision processors, a category that didn't even exist a few years ago.

The final trend is democratization. It is getting easier to do computer vision without heing a computer vision specialist, which is just as well since there is a very limited supply of them. It is not just getting easier to create solutions, but also to deploy them at scale. Often you can just select a deep neural network from the literature, train it on your own data. With ubiquitous cloud computing, this reduces the dime to deployment. As Jeff put it "in the time it takes you to get an embedded board set up and booted, you can have it all working in the cloud". Obviously, you also have as much compute power as you need for the big peak of doing the training.

Jeff finished with some fun videos. Google Clips, which is an AI-based camera that automatically takes pictures of "things that are interesting". Keynote speaker Dean Kemen's iBot 2 will have autonomous navigation. Of course self-driving cars are coming…whenever. But there are lots and lots of other autonomous machines that are being deployed in the meantime. Even the latest Roomba's have vision.

And the ProPilot Nissan auto parking:

Megha Daga

Cadence's Megha Daga talked about Enabling Rapid Deployment of DNNs on Low-cost Low-power Processors. She started talking about the trend towards doing on-device inference, which has the challenges that it has to be low power, low area (cost), programmable, and with both low latency and efficient memory management.

Cadence's Megha Daga talked about Enabling Rapid Deployment of DNNs on Low-cost Low-power Processors. She started talking about the trend towards doing on-device inference, which has the challenges that it has to be low power, low area (cost), programmable, and with both low latency and efficient memory management.

The big challenge is that once you have the hardware, how do you program it? You want an optimal implementation, but market needs change, the DNNs are changing, new research is being published, so you need to not just make it optimal once, but keep up with changes.

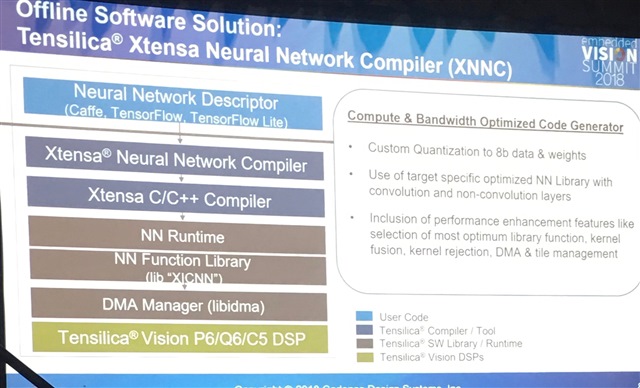

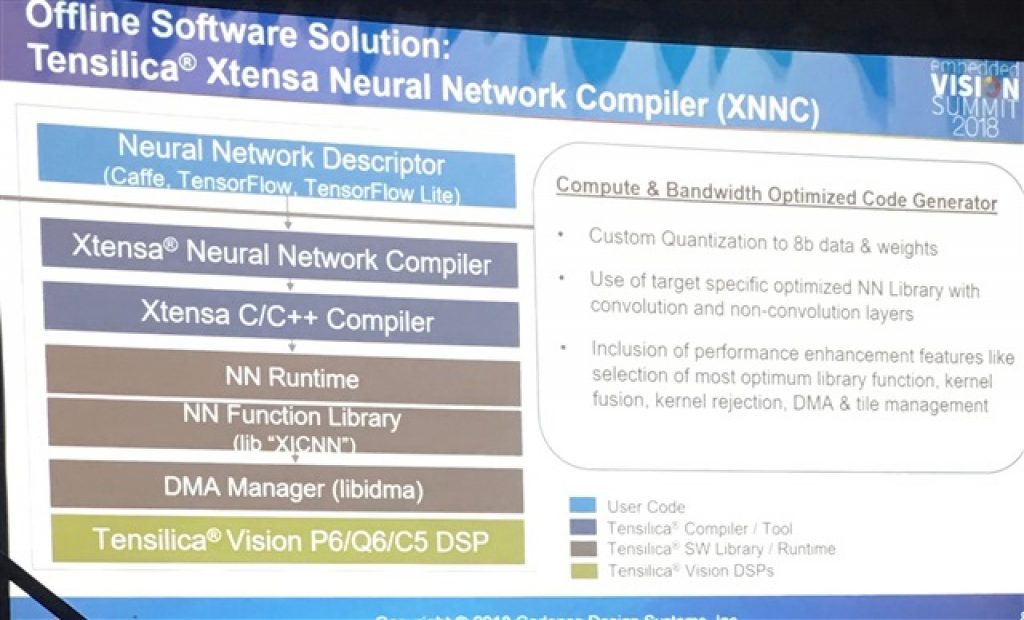

One of the things that I don't think most of us outside of the field anticipated was the massive amount of quantization that can be done with negligible accuracy impact. Most nets are trained in the cloud using GPUs which use 32-bit floating point. So it seems "obvious" that inference is going to require a lot of 32-bit floating point. But it turns out that with care, the network can be automatically reduced to 8-bit (and often even lower). Cadence has our own custom quantizaton approaches which are built into the Tensilica Xtensa Neural Network Compiler (XNNC). The error is about 1% for the top 1 error, and 0.5% for the top 5 errors. It goes without saying that doing all the computations in 8-bit requires a lot less power and can be done a lot faster than 32-bit floating point (or even 16 bit fixed point). The Vision Q6 DSP is optimized for doing huge numbers of 8-bit MACs in parallel and efficiently feeding the data through DMA interfaces.

The demo that we have been using this year (at Embedded World, Mobile World Congress, and now the Embedded Vision Summit) has taken two different neural nets trained on ImageNet and run them through XNNC and the rest of the Tensilica tool flow and run on a DreamChip SoC. This actually contains 4 Vision P6 processors, but the demo just uses one of them, and you can see it in action recognizing images.

So the overall trend is to "see, hear, and speak more clearly with on-device AI".