| LETTER FROM THE EDITOR |

|

Dear Colleague,

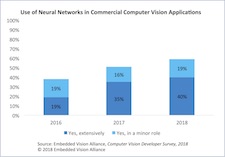

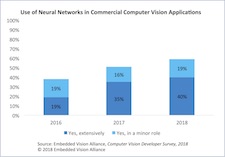

Every year the Embedded Vision Alliance surveys computer vision developers to understand what chips and tools they use to build visual AI systems. This is our sixth year conducting the survey and we want to make sure we have your input, since many technology suppliers use the survey results to guide their product roadmaps. We share the results from the Computer Vision Developer Survey at Embedded Vision Alliance events and in white papers (results from last year's survey are here) and presentations made available throughout the year on the Embedded Vision Alliance website. I’d really appreciate it if you’d take a few minutes to complete this year's survey. As a thank you, we will send you a coupon for $50 off the price of a two-day Embedded Vision Summit ticket (to be sent when registration opens in December). In addition, after completing the survey you will be entered into a drawing for one of two Amazon gift cards worth $100! Thank you in advance for your perspective. Start the survey.

Deep Learning for Computer Vision with TensorFlow 2.0 is the Embedded Vision Alliance's in-person, hands-on technical training class. The next session will take place November 1 in Fremont, California, hosted by Alliance Member company Mentor. This one-day hands-on overview will give you the critical knowledge you need to develop deep learning computer vision applications with TensorFlow. Details, including online registration, can be found here.

Are you interested in learning more about key trends driving the proliferation of visual AI? The Embedded Vision Alliance will deliver a free webinar on this topic on October 16. Jeff Bier, founder of the Alliance and co-founder and President of BDTI, will examine the four most important trends that are fueling the development of vision applications and influencing the future of the industry. He will explain what's fueling each of these key trends, and will highlight key implications for technology suppliers, solution developers and end-users. He will also provide technology and application examples illustrating each of these trends, including spotlighting the winners of the Alliance's yearly Vision Product of the Year Awards (see below for more information on the awards). Two webinar sessions will be offered: the first will take place at 9 am Pacific Time (noon Eastern Time), timed for attendees in Europe and the Americas, while the second, at 6 pm Pacific Time (9 am China Standard Time on October 17), is intended for attendees in Asia. To register, please see the event page for the session you're interested in.

Brian Dipert

Editor-In-Chief, Embedded Vision Alliance

|

| INTELLIGENT CAMERA DEVELOPMENT |

|

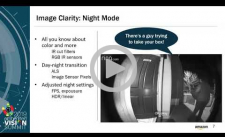

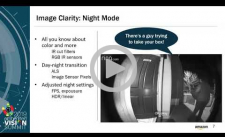

Designing Home Monitoring Cameras for Scale

In this talk, Ilya Brailovskiy, Principal Engineer, and Changsoo Jeong, Head of Algorithm, both of Ring, discuss how Ring designs smart home video cameras to make neighborhoods safer. In particular, they focus on three key elements of the system that are critical to the overall quality of the product. First, they discuss creating a reliable and robust motion detection algorithm, which is used to detect security events and provide alerts to users. A central challenge here is creating a motion detection algorithm that’s reliable and robust under all environmental conditions: indoor or outdoor, day or night, sun or rain, etc. Next, they examine another challenge related to environmental conditions: rendering video content that people can usefully view across this wide range of imaging conditions. Finally, they explore the challenge of making video streaming work reliably under different network conditions. For each of these challenges, they present techniques that are scalable for mass-production devices.

Accelerating Smart Camera Time to Market Using a System-on-module Approach

Bringing a vision system product to market can be costly and time-consuming. Teknique’s Oclea System-on-Module (SoM) is an off-the-shelf, integrated camera module that enables device makers to streamline the development process, reducing development risk, cost and time. In this presentation, Ian Billing, the company's Quality Assurance Manager, demonstrates how the Oclea SoM enables product developers to use modular components to quickly integrate a camera into their next product. This approach allows developers to focus on their product’s unique capabilities, without having to spend time on details such as image tuning and camera sensor drivers. Using an Oclea SoM, cameras can be integrated and up and running in a new system within weeks, in contrast to a custom- camera approach, which can take up to 12 months. Billing presents the capabilities of the Oclea SoM and shows how this ground-breaking platform is being used in a variety of smart camera applications, enabling companies to bring vision- enabled products to market much faster, and with much lower development costs and risk compared to traditional approaches.

|

| TEMPORAL IMAGE UNDERSTANDING TECHNIQUES |

|

Beyond CNNs for Video: The Chicken vs. the Datacenter

The recent revolution in computer vision derives much of its success from neural networks for image processing. These networks run predominantly in datacenters, where the training data consists mostly of photographs. Because of this history, the networks used for image processing fail to exploit temporal information. In fact, convolutional neural networks are unaware that time exists, leading to overly complex networks with strange artifacts. Remarkably, even the lowly chicken knows better, bobbing its head while walking to integrate information over time in modeling the world. Isn’t it time we learned from the chicken? In this presentation, Steve Teig, Chief Technology Officer at Xperi, explores how we can.

Neuromorphic Event-based Vision: From Disruption to Adoption at Scale

Neuromorphic event-based vision is a new paradigm in imaging technology, inspired by human biology. It promises to dramatically improve machines’ ability to sense their environments and make intelligent decisions about what they see. Like human vision, Prophesee’s event- based vision technology dynamically captures only the most useful and relevant events in a scene. This allows for much lower power consumption, latency and data processing compared with traditional frame-based systems. In this talk, Luca Verre, the company's co-founder and CEO, explains the underlying operation of Prophesee’s event-based sensors and vision systems. He also demonstrates how these innovative products open up vast new potential in applications such as autonomous vehicles, industrial automation, IoT, security and surveillance and AR/VR—improving safety, reliability, efficiency and user experiences across a broad range of use cases.

|

| UPCOMING INDUSTRY EVENTS |

|

Embedded Vision Alliance Webinar – Key Trends in the Deployment of Visual AI: October 16, 2019, 9:00 am PT and 6:00 pm PT

Technical Training Class – Deep Learning for Computer Vision with TensorFlow 2.0: November 1, 2019, Fremont, California

Renesas Webinar – Renesas' Dynamically Reconfigurable Processor (DRP) Technology Enables a Hybrid Approach for Embedded Vision Solutions: November 13, 2019, 10:00 am PT

Embedded AI Summit: December 6-8, 2019, Shenzhen, China

More Events

|

| VISION PRODUCT OF THE YEAR SHOWCASE |

|

Intel OpenVINO Toolkit (Best Developer Tools)

Intel's OpenVINO Toolkit is the 2019 Vision Product of the Year Award Winner in the Developer Tools category. The OpenVINO toolkit (available as either the Intel Distribution or the Open Source version) is a free software developer toolkit for prototyping and deploying high-performance computer vision and deep learning (DL) applications from device to cloud across a broad range of Intel platforms. The toolkit helps to speed up computer vision workloads, streamline DL deployments, and enables easy heterogeneous execution across Intel processors and accelerators. This flexibility offers developers multiple options in choosing the right combination of performance, power, and cost efficiency for their solution’s needs.

Please see here for more information on Intel and its OpenVINO Toolkit product. The Vision Product of the Year Awards are open to Member companies of the Embedded Vision Alliance and celebrate the innovation of the industry's leading companies that are developing and enabling the next generation of computer vision products. Winning a Vision Product of the Year award recognizes leadership in computer vision as evaluated by independent industry experts.

|

| FEATURED NEWS |

|

CEVA Introduces New AI Inference Processor Architecture for Edge Devices with Co-processing Support for Custom Neural Network Engines

Upcoming Infineon Conference Explores Advanced Technologies That Link the Real and Digital Worlds

Morpho Surpasses 3 Billion Mark in Image Processing Software Licenses

Huawei Unveils Next-Generation Intelligent Product Strategy and New +AI Products

ON Semiconductor Demonstrates Fully Integrated In-Cabin Monitoring System with Ambarella and Eyeris

More News

|