How an Automotive Tier 1 Supplier Used Atlas to Improve Computer Vision Accuracy By Up to 48% mAP Within Days

This blog post was originally published at Algolux’s website. It is reprinted here with the permission of Algolux.

Computer vision is a fundamental technology in automotive Advanced Driver Assistance Systems (ADAS) for pedestrian, vehicle, or road sign detection. However, today’s vision architectures (optics, sensor, and Image Signal Processor) are usually designed and tuned manually for pleasing visual image quality (IQ). ISP tuning must also be done for each new lens-sensor configuration.

Image processing that produces visually pleasing images for the human eye is not optimal for computer vision. Capturing and annotating a new training dataset with that specific camera configuration is also hugely time-consuming, costly, and impractical. Instead, an ISP optimization approach that automatically maximizes computer vision metrics is needed.

In this case study, we applied the Atlas Camera Optimization Suite to automatically maximize computer vision accuracy for a front-facing vision system from a leading automotive Tier 1. Atlas was used to optimize the image processing functions of the system’s Renesas V3H ISP paired with the Sony IMX490 High Dynamic Range (HDR) image sensor to determine an optimal ISP configuration for two pre-trained object detection models.

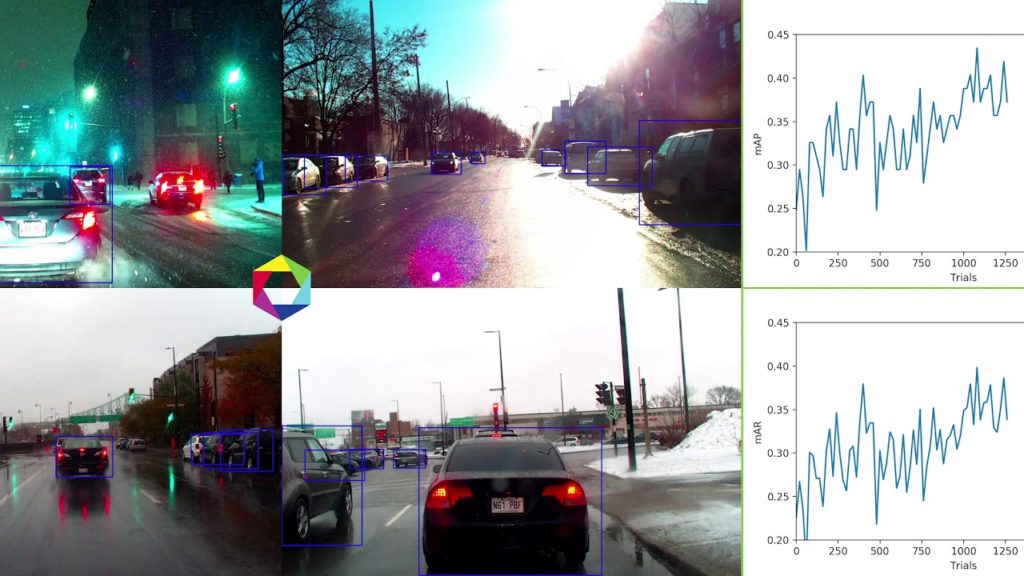

The Atlas-optimized ISP configuration improved the accuracy of the customer’s YOLOv4 model by up to 28% Mean Average Precision (mAP) points compared to the original configuration. Optimizing the ISP with another application-specific embedded vision model improved the detection accuracy further by up to 48% mAP points.

In addition to improved accuracy, the automated workflow optimized the system in a few days compared to several months of expert ISP tuning effort typically seen when using today’s manual tuning workflows.

KEY TAKEAWAYS

- Computer vision accuracy can be improved by optimizing – rather than tuning – camera ISPs for either AI or classical computer vision models.

- Any lens, sensor, and ISP combination can be supported to optimize visual image quality or maximize computer vision results.

- Atlas optimization is highly scalable and can be done in days vs. today’s costly and months-long manually intensive ISP tuning process.

Today’s Camera ISP Tuning Process

Cameras are designed and must be tuned every time a new lens or sensor is integrated with the ISP to provide subjectively pleasing image quality (IQ) for viewing, with growing use for computer vision in many safety-critical applications.

The camera’s ISP has hundreds of parameters that control each pipeline block.

Typical Automotive Image Signal Processor

Image quality tuning is a highly iterative and complex manual process requiring a team of imaging experts over many weeks or months to determine the best parameter settings for each ISP block within the program schedule.

Typical ISP tuning process

Tuning Challenges for Computer Vision

For human viewing, this can still achieve visually good IQ results but requires scarce expert resources with specific ISP domain knowledge. Outsourcing can address some gaps, but those teams are also resource-limited. As visual IQ is ultimately subjective, it is difficult to know when you are complete, and tuning must be done every time a camera component is changed. Costs may exceed $50,000 per camera program and run many months. This approach is not scalable or predictable.

This process also does not provide the optimal output for computer vision.

- Imaging teams cannot subjectively see, and manually tune for, the best image quality for specific vision algorithms

- “Rules of thumb”, such as increasing contrast and sharpness, do no generalize to model architecture and training sets

- The very large ISPs parameter space can’t be evaluated within a practical timeframe

An alternative optimization-based approach is required to maximize computer vision results.

Atlas Camera Optimization for Computer Vision

Accuracy and robustness in all conditions is the objective for computer vision, but robust accuracy in all conditions is paramount for safety-critical applications, such as automotive. But as we’ve seen, today’s subjective and manually intensive ISP tuning does not work – and so this requires a move away from traditional tuning workflows towards automated and metric-driven ISP parameter optimization provided by the Atlas Camera Optimization Suite.

Atlas Camera Optimization Suite to Maximize Computer Vision Results

Atlas is the only camera / ISP optimization solution that addresses this challenge and is commercially available. It applies novel solvers to handle the massive and very rugged parameter space to maximize computer vision metrics for any camera and vision task. Learn more about Atlas.

The Atlas Workflow

A small dataset of field RAW images is captured with the target camera module and annotated. It must contain a distribution of images that exercise the operational range of the camera and use case scenarios. The distribution covers the exposure times and gains the sensor is capable of in the expected low to bright light scenes, including High Dynamic Range (HDR), and low to high contrast. The dataset also needs to have good coverage of the target detector model’s classes and object sizes. If image quality metrics are to be evaluated or optimized, a small set of RAW lab chart images would also be captured.

Since the objective is to optimize an ISP and not train a neural network, this dataset can be orders of magnitude smaller than datasets required for training neural networks, i.e only several hundreds or thousand images rather than hundreds of thousands or millions of annotated images, which is what you’d typically use for training a network.

Each frame is tagged with exposure and gain information from the sensor module so the sensor state is known for each image. That metadata is also used in the optimization process so the ISP parameter modulation functions can be built to control the image quality for the particular capture conditions.

This small RAW image dataset is run through the ISP and into the detector model. Computer vision accuracy metrics, such as average precision and recall of the detected object classes, are then evaluated for each trial ISP configuration.

Algolux Atlas Camera Optimization Suite Workflow for Computer Vision

Visual image quality KPIs can be directly measured on the image, but for computer vision-only systems, how visually pleasing the images look is unimportant so long as the final ISP parameter configuration enables the vision model to produce more accurate detections.

This novel optimization framework is applied to find the best ISP parameters to use for a specific pre-trained deep learned or classical computer vision model by minimizing the loss function of those accuracy KPIs. One key breakthrough of the Atlas optimizer is being able to optimize this very rugged, non-convex ISP parameter space.

These ISP pipelines are not differentiable and do not necessarily optimize well with typical gradient-based approaches. There can be null spaces in registers or non-linear stepwise behaviors as an ISP parameter crosses a threshold that is internal to an algorithm, so the optimizer needs to be robust and be able to split up and explore the solution space to escape the massive number of local minima.

Atlas projects require some initial optimizations to ensure proper ISP configuration and convergence, and then a final optimization to maximize results. This typically takes anywhere from 4000 to 8000 trials (or iterations) over a few days to explore the parameter space and converge on an optimized result, depending on such factors as the number of parameters and KPI objectives that are being optimized.

This optimization process can also be applied to automate and significantly accelerate visual image quality tuning or optimize for both computer vision and visual image quality goals.

See here to download the case study.