This market research report was originally published at Yole Développement’s website. It is reprinted here with the permission of Yole Développement.

Deep neural networks are playing an increasingly important role in machine vision applications.

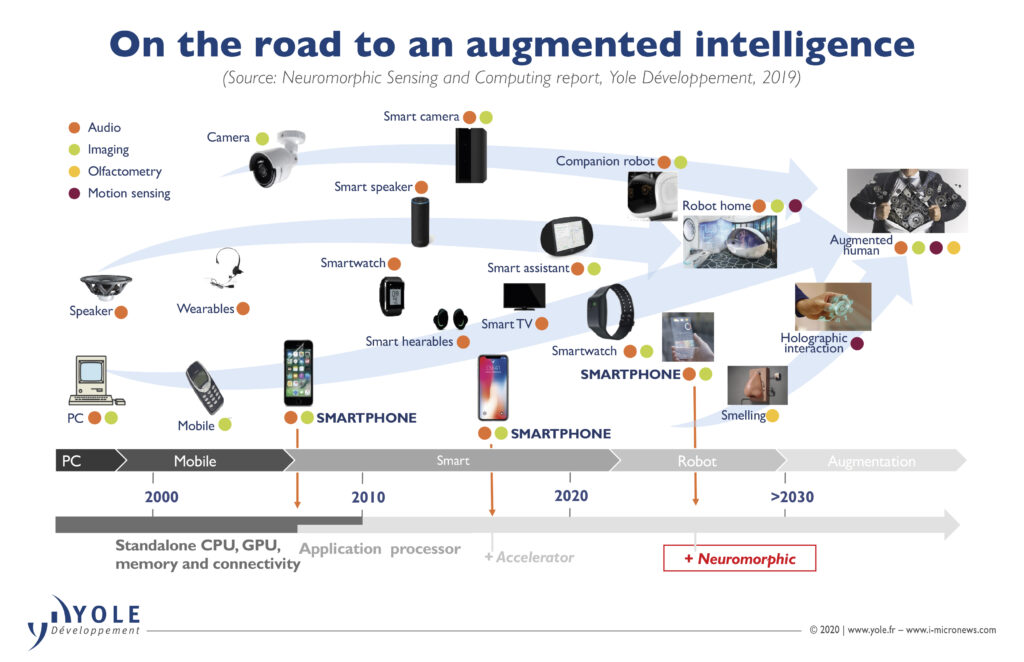

An article written by Adrien Sanchez, Technology and Market Analyst within the Computing & Software division at Yole Développement (Yole), for Vision Spectra Magazine – Increasing volumes of data required for smart devices are prompting a reevaluation of computing performance, inspired by the human brain’s capabilities for processing and efficiency. Smart devices must respond quickly, yet efficiently, thereby relying on processor cores that optimize energy consumption, memory access, and thermal performance.

Central processing units (CPUs) address computing tasks sequentially, whereas the artificial intelligence (AI) algorithm for deep learning is based on neural networks. Its matrix operations require parallel processing performed by graphics processing units (GPUs), which have hundreds of specialized cores working in parallel.

The deep neural networks powering the explosive growth in AI are energy hungry. As a point of comparison, a neural network in 1988 had ~20,000 neurons; today that figure is 130 billion, escalating energy consumption. This requires a new type of computing hardware that can process AI algorithms efficiently and overcome obstacles that limit logarithmic growth, as well as overcome physical constraints imposed by Moore’s law, memory limitations, and the challenge of thermal performance in densely populated devices.

Inspired by human physiology, neuromorphic computing has the potential to be a disruptor.

Chips based on graph processing and manufactured by companies such as Graphcore and Cerebras are dedicated to neural networks. These GPUs, accelerators, neural engines, tensor processing units (TPUs), neural network processors (NNPs), intelligent processing units (IPUs), and vision processing units (VPUs) process multiple computational vertices and points simultaneously.

Mimicking the brain

In comparison, the human brain has 100 billion neurons and 100 to 1000 synapses per neuron, totaling a quadrillion synapses. Yet its volume is equivalent to a 2-L bottle of water. The brain performs 1 billion calculations per second, using just 20 W of power. To achieve this level of computation using today’s silicon chips would require 1.5 million processors and 1.6 PB of high-speed memory. The hardware needed to achieve such computations would also occupy two buildings, and it would take 4.68 years to simulate the equivalent of one day’s brain activity, while consuming 10 MWh of power.

See here for the remainder of the article.