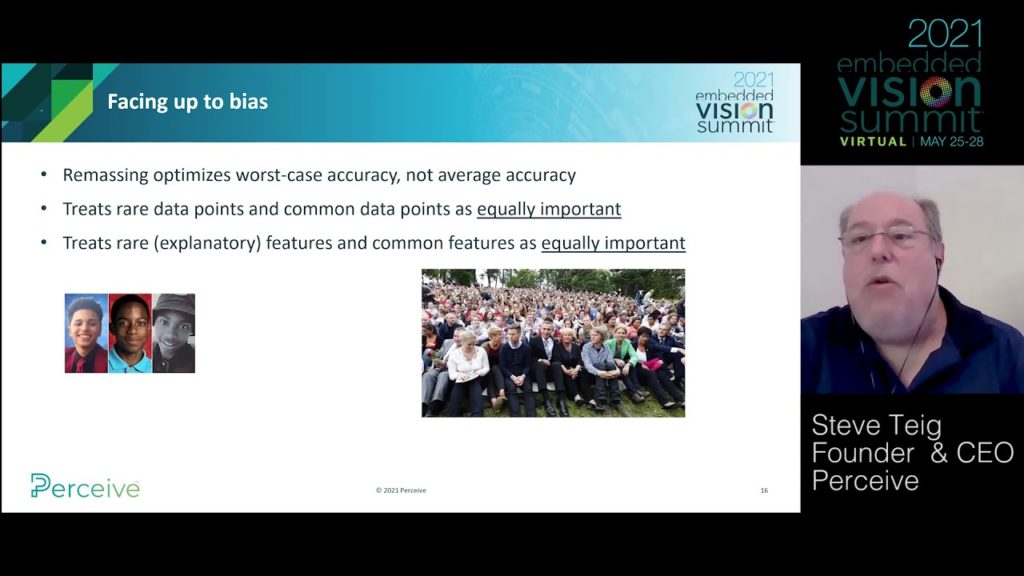

Steve Teig, CEO of Perceive, presents the “Facing Up to Bias” tutorial at the May 2021 Embedded Vision Summit.

Today’s face recognition networks identify white men correctly more often than white women or non-white people. The use of these models can manifest racism, sexism, and other troubling forms of discrimination. There are also publications suggesting that compressed models have greater bias than uncompressed ones.

Remarkably, poor statistical reasoning bears as much responsibility for the underlying biases as social pathology does. Further, compression per se is not the source of bias; it just magnifies bias already produced by mainstream training methodologies. By illuminating the sources of statistical bias, we can train models in a more principled way – not just throwing more data at them – to be more discriminating, rather than discriminatory.

See here for a PDF of the slides.