This blog post was originally published at Imagination Technologies’ and Visidon’s websites. It is reprinted here with the permission of Imagination Technologies and Visidon.

This blog post is a result of a collaboration between Visidon, headquartered in Finland and Imagination, based in the UK. Visidon is recognised as an expert in algorithms for camera image enhancement and analysis and Imagination has a series of world-beating neural network accelerators (NNA) with performance up to 100 TOPS per second per core.

The problem tackled in this blog post is denoising images from conventional colour cameras. The solution is in two parts:

- Algorithms that remove the noise without damaging image detail.

- A high-performance convolution engine capable of running a trained neural network that takes a colour image as input and outputs a denoised colour image.

An example of a Visidon deep neural network

The process of denoising images has a long history. The way modern CMOS imagers work can be thought of as an array of photon counters. Photons arrive at the sensor at an average rate: fewer in dark regions with relatively high fluctuations, but more in brighter regions with relatively lower fluctuations, i.e., better signal-to-noise ratio. The fluctuations are the noise (with Poisson statistics) due to the physics of light and cannot, in general, be avoided. We can, however, remove the noise with further processing. The key thing is to do this without damaging the picture content.

Over the years many solutions have been proposed. These include simply blurring a picture slightly, sophisticated approaches with a bilateral filter, Beltrami filters based on manifold theory, scale-space Kalman filters, etc.

The interesting points about denoising are firstly that noise is most obvious (to us) in flat regions of an image and noise is less visible (to us) near edges. Edges, though, are most likely to be blurred or damaged in some other way by many denoising algorithms. Damaged edges are as bad perceptually as noise!

Visidon has created a convolutional neural network model (adjacent) that achieves exactly the required objectives – remove noise and simultaneously preserve edges in colour images.

Imagination’s IMG Series3NX and IMG Series4 multi-core NNA’s provide a high-performance compute solution when executing Visidon’s denoising network, and at the same time, are best-in-class for low power and low area.

An example set of results is shown in Figure 1. All images used in this work were 4,096 by 3,072. This image has bright white and blue lights on a dark night-time background; the original is shown in Figure 1a and the RMS error for each pixel x100 in Fig. 1b. The error is taken between a floating-point result from the network and a result generated by quantising the network to 16 bits and running the network on Imagination’s Series3NX NNA.

Figure 1 (below) shows a particularly difficult image – nevertheless, the maximum difference in any 8-bit colour channel is +/-1. We also show (Fig. 1c) crops of the original noisy image, d) output from the floating-point network, e) the quantised network and f) a result provided by Visidon’s floating-point implementation of the network. There is no colour distortion, the noise is effectively eliminated, and the edges remain undamaged.

In the ten example images we have looked at, all the results follow a similar pattern. In the daylight scene shown in Figure 2, no errors beyond +/- 1 per pixel per colour channel occurred. Noise in this image is less obvious but we have chosen crops to demonstrate that the denoising does not create artefacts at edges of any colour.

In the next image, (Figure 3), we show a brightly lit test chart. Again, there were no errors larger than +/- 1 and the detailed crops show the lack of edge artefacts that are a characteristic of Visidon’s algorithm.

The final image result, (Figure 4), shows a test chart taken in low light – another very difficult image. Here, we clearly see the noise (the signal-to-noise ratio for data with Poisson statistics gets worse as the mean level decreases). In the detail of the original image (Fig. 6c) the severe colour noise is obvious in both the brighter and darker regions. This is a consequence of the uncorrelated fluctuations in the separate red, green, and blue channels producing random variations in colour (approximately Rician statistics in three dimensions). Visidon’s denoising algorithm eliminates the noise in the luma (brightness) and chroma (colour) whilst simultaneously preserving the edges. Remarkably, there is no discernible difference between the floating-point results from the network and the 16-bit quantised results from the network and again the maximum difference between these two results is +/-1 at any pixel and any colour channel.

IMG Series3NX and IMG Series4 multi-core

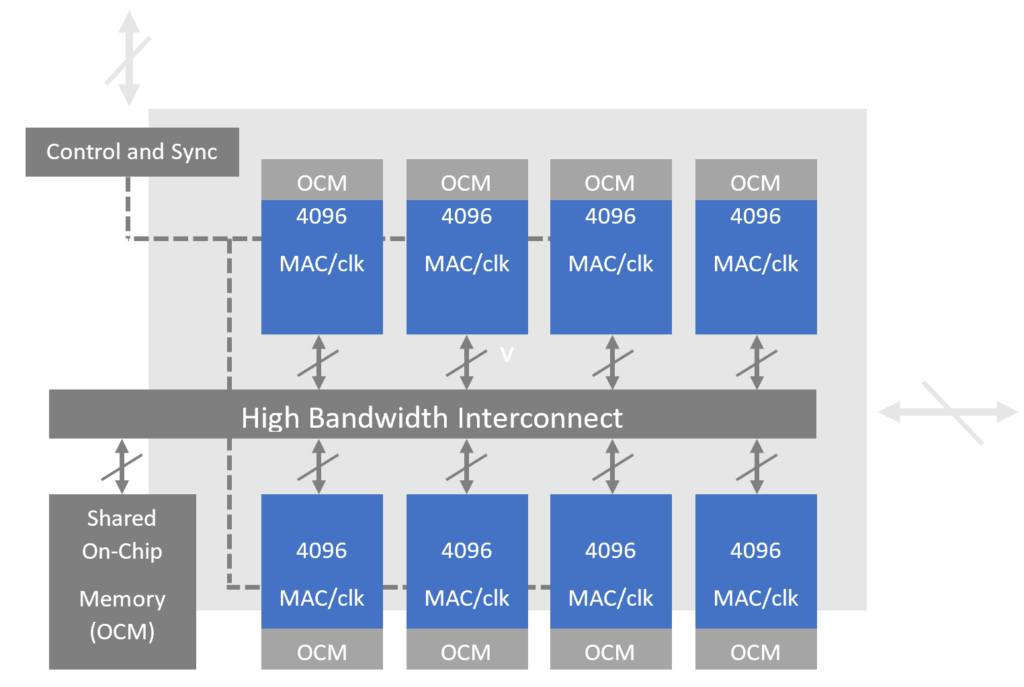

The IMG Series3NX NNA in Figure 5 has sixteen convolution engines that can be configured to each have 128-wide MAC (multiply-accumulate) engines at 16-bits, or sixteen 256-wide MAC engines at 8-bits, giving a total of 4,096 MACs per clock cycle.

The IMG 4NX-MC8 in Figure 6 is similar, with advanced architecture features and comes in a range of multi-core versions, each core being an enhanced Series3NX. The 4NX-MC1 has a single core with 16 convolution engines capable of ~12 TOPS (1 TOPS = 1 million, million operations per second). A 4NX-MC2 has two of these cores, etc.

The Visidon denoising network, shown schematically at the top of the post, requires ~5 x 1011 MAC’s per 4,096 by 3,072 image that is to be denoised. The performance of Imagination’s Series3NX, 4NX-MC1, 4NX-MC4 and 4NX-MC6 cores are shown in Table 1 below.

| Inferences/s | Bandwidth/inference (MB) | Local OCM+ Shared OCM (MB) | |

| 3NX | 1.3 | 11000 | 1 + 0 |

| 4NX-MC1 | 1.7 | 11200 | 1 + 0 |

| 4NX-MC4 | 5.2 | 10600 | 2 + 4 |

| 4NX-MC6 | 9.5 | 9000 | 3 + 6 |

Table 1. Performance and configuration of Imagination NNA cores on the Visidon Denoising network (3NX@1200 MHz, 4NX-MC @1,500MHz)

The performance results of Table 1 were generated with Imagination’s standard (available) toolchain. We avoided using prototype tools or tools available in research. 4NX-MC6 approaches an inference rate of ~10 inferences per second at 1,500MHz and is available at 16nm, 7nm and 5nm feature sizes.

The memory configurations are shown. For multi-core versions of 4NX, each core of sixteen convolution engines has local on-chip memory (OCM) and a shared-OCM available to all cores. This network is compute-bound and the results show a nearly linear speed-up with increasing cores. 4NX-MC6 achieves about 93% of the speed-up predicted by considering 4NX-MC1 scaled up by a factor of six (clock frequency and OCM also have an impact).

Conclusion

To summarise, this is a very interesting example of a deep-convolutional neural network that produces an image as output from an image as input.

The performance in terms of image quality is remarkable because denoising is a difficult problem when dealing with high-quality images. The fidelity of the results reflects the quality of Visidon’s algorithm and in particular their choice of network architecture and the way it has been trained. It also demonstrates very clearly that a quantised network, in this case, 16-bits, can give results near-identical to a floating-point network.

It is worth mentioning here that we did look at lower bit depths. The errors in most neural networks will increase a little when executed in 8-bits. Depending on the application a small increase in error might be acceptable. In the context of high-quality image enhancement, a very small error becomes visible to a human observer. Banding in flat regions was evident with 8-bit data (activations) and colour distortion was evident with 8-bit weights. Both artefacts are exquisitely visible to our sensitive eyes.

Running the network with 16-bit data and 16-bit weights leaves no visible artefacts at all and differences are +/- 1 in any pixel and colour in the output images.

At up to 10 inferences per second on 4,096 by 3,072 RGB images, running Visidon’s denoising network, the performance of Imagination’s NNA demonstrates its capability beyond conventional AI applications and its applicability to image enhancement.

Tim Atherton

Director of Research in AI, Imagination Technologies

James Imber

Research Manager, IMG Labs, Imagination Technologies