Erik Chelstad, CTO and co-founder of Observa, presents the “Maintaining DNN Accuracy When the Real World is Changing” tutorial at the May 2021 Embedded Vision Summit.

We commonly train deep neural networks (DNNs) on existing data and then use the trained model to make predictions on new data. Once trained, these predictive models approximate a static mapping function from their input onto their predicted output. However, in many applications, the trained model is used on data that changes over time. In these cases, the predictive performance of these models degrades over time.

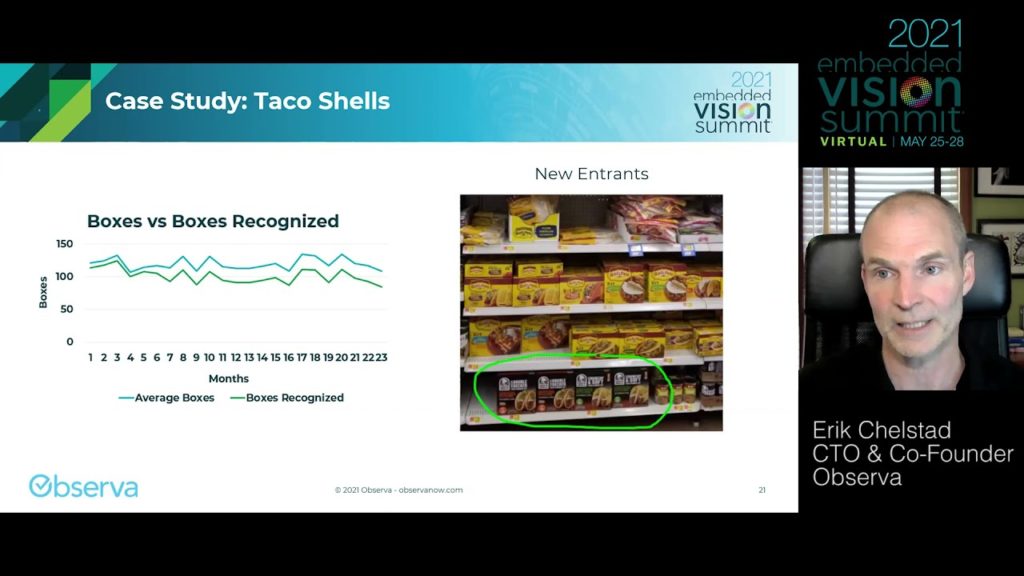

In this talk, Chelstad introduces the problem of concept drift in deployed DNNs. He discusses the types of concept drift that occur in the real world, from small variances in the predicted classes all the way to the introduction of a new, previously unseen class. He also discusses approaches to recognizing these changes and identifying the point in time when it becomes necessary to update the training dataset and retrain a new model. The talk concludes with a real-world case study.

See here for a PDF of the slides.