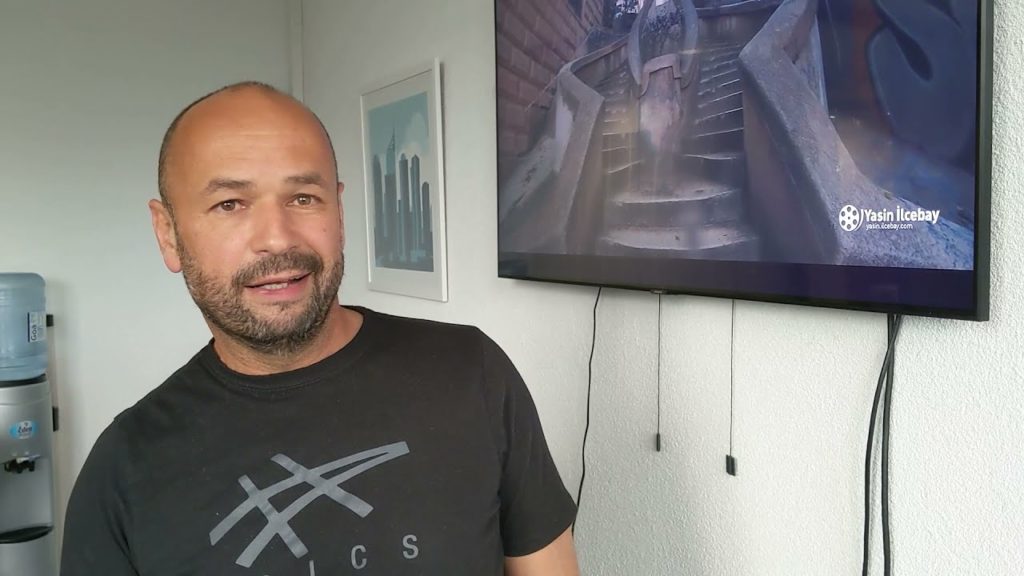

Zafer Diab, Director of Product Marketing at Synaptics, demonstrates the company’s latest edge AI and vision technologies and products at the 2021 Embedded Vision Summit. Specifically, Diab demonstrates real-time video post-processing using machine learning.

Enhancements in AI processing capabilities on edge devices are enabling significant enhancements in video scaling and post-processing, compared to what had been possible with traditional scaling integrated in SoCs. These enhancements enable the scaling and post-processing to be theme-based. Scaling for sports content can be adjusted for the high motion content, for example, while scaling for video conferencing can be optimized for the (mostly) static and moving face content.

Synaptics SoCs integrate an internally developed machine learning engine called QDEO.ai, which performs super-resolution scaling that takes the theme of the video into consideration. This demonstration shows a side-by-side comparison of scaling using the traditional hardware scaler and the QDEO.ai-scaled video. Quality enhancements are accentuated when performed on lower bitrate input video, such as with videoconferencing.