This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Our fundamental AI research is fueling wireless innovation by combining the strengths of machine learning with wireless domain expertise.

Artificial Intelligence (AI) for wireless is already here, with applications in areas such as mobility management, sensing and localization, smart signaling, and interference management. Recently, Qualcomm Technologies prototyped the AI-enabled air interface and announced the Snapdragon X70 5G modem-RF, the world’s first 5G modem with a dedicated AI processor. These developments are possible thanks to our expertise in both wireless and machine learning (ML) based on more than a decade of foundational research.

It turns out that wireless and ML have complementary strengths, but it takes domain knowledge in both to create optimal wireless solutions. Existing wireless technology enables scalable and interpretable solutions, while ML algorithms perform well for complex tasks and generative processes.

There are numerous facets of wireless technology capabilities that can be enhanced with AI including, but not limited to, power saving, channel estimation, positioning, MIMO detection, environmental sensing, beam management, and optimization. In our webinar “Bringing AI research to wireless communication and sensing,” we zoom in on our fundamental research in the areas of ML for improving communications (i.e., generative modelling and neural augmentation) and ML for enabling RF sensing (i.e., self-supervised and unsupervised learning for positioning).

AI is enhancing wireless communications

Channel models are central to our wireless design, especially for building and evaluating ML solutions. Classical channel models require cumbersome field measurements, contain hard-coded assumptions about propagation characteristics, and model generic scenarios. Neural channel models, by contrast, can accurately match complex field data distribution, be sampled quickly for prototyping purposes, and built from simple traces. With neural augmentation, our MIMO-GAN (generative adversarial network) solution learns the 3GPP communication channel models precisely, using simple traces achieving mean absolute error of less than -18.7 dB for channel gains and less than 3.6 ns for channel delays.

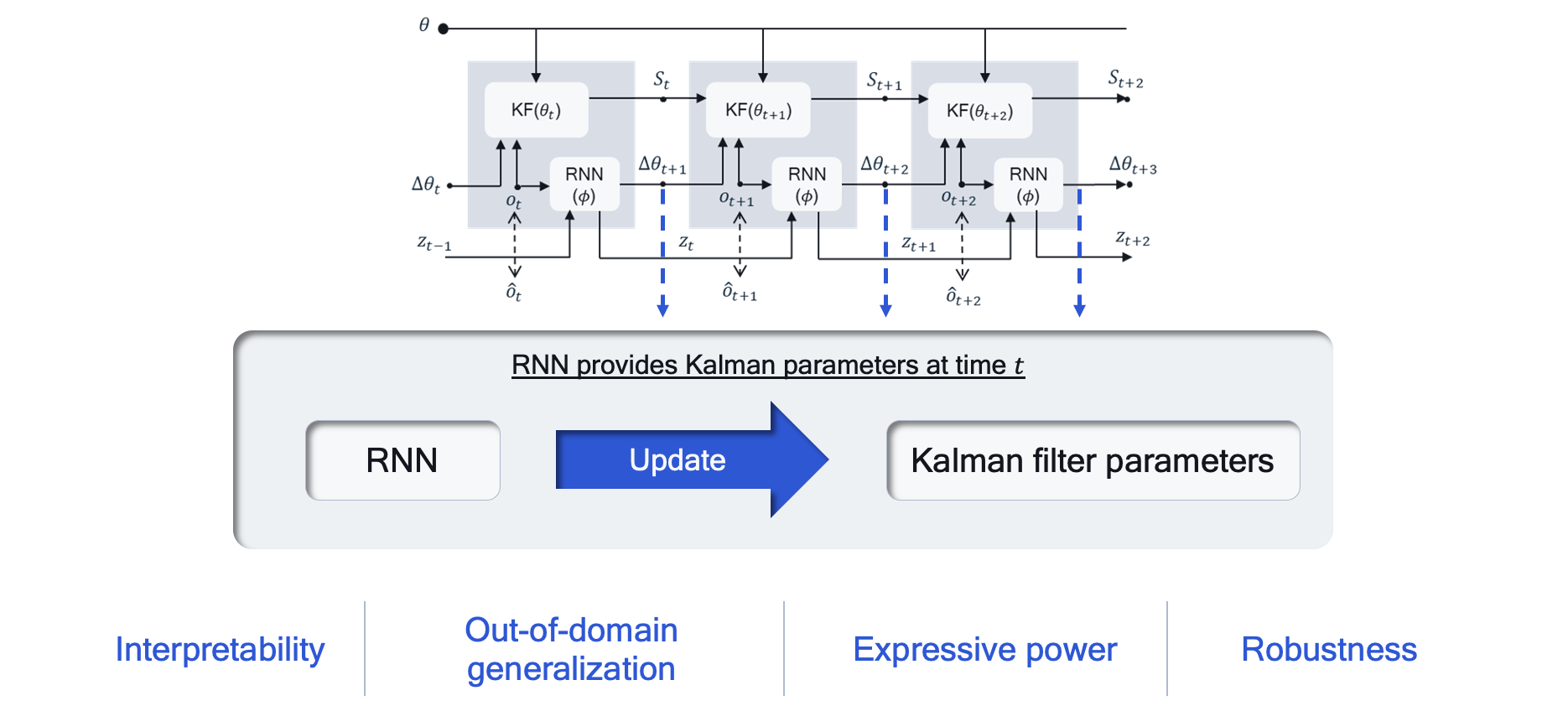

Communication channels are hard to accurately estimate with all variations in propagation characteristics. Tracking time-varying channels can typically be done with classical Kalman filters, which are interpretable and perform well with arbitrary signal-to-noise ratios and pilot patterns. Their limitations, however, are that the filter parameters vary with Doppler values and a single Kalman filter should not be used for all the Doppler values. Standalone ML solutions also have limitations, such as not generalizing well and not being interpretable. We use neural augmentation again, a concept that we feel provides many beneficial design guidelines, to create the neural-augmented Kalman filter. It outperforms the other two methods by capturing the best of both worlds and generalizes to unseen cases.

Neural augmentation of Kalman filters offers the best of both worlds.

AI is enabling RF sensing

When we talk about Qualcomm AI Research’s work in enabling RF sensing, we make the distinction between active positioning (with a communications device) and passive positioning (where access points alone are used to determine the position of a device-less person or object).

Active positioning is especially useful indoors and in other locations without a clear line-of-sight to global navigation satellite system (GNSS). Examples of applications of active positioning with RF sensing include indoor navigation, vehicular navigation, AGV tracking, and asset tracking. This technology has already been demonstrated in real-life scenarios, such as precise positioning for the factory of the future.

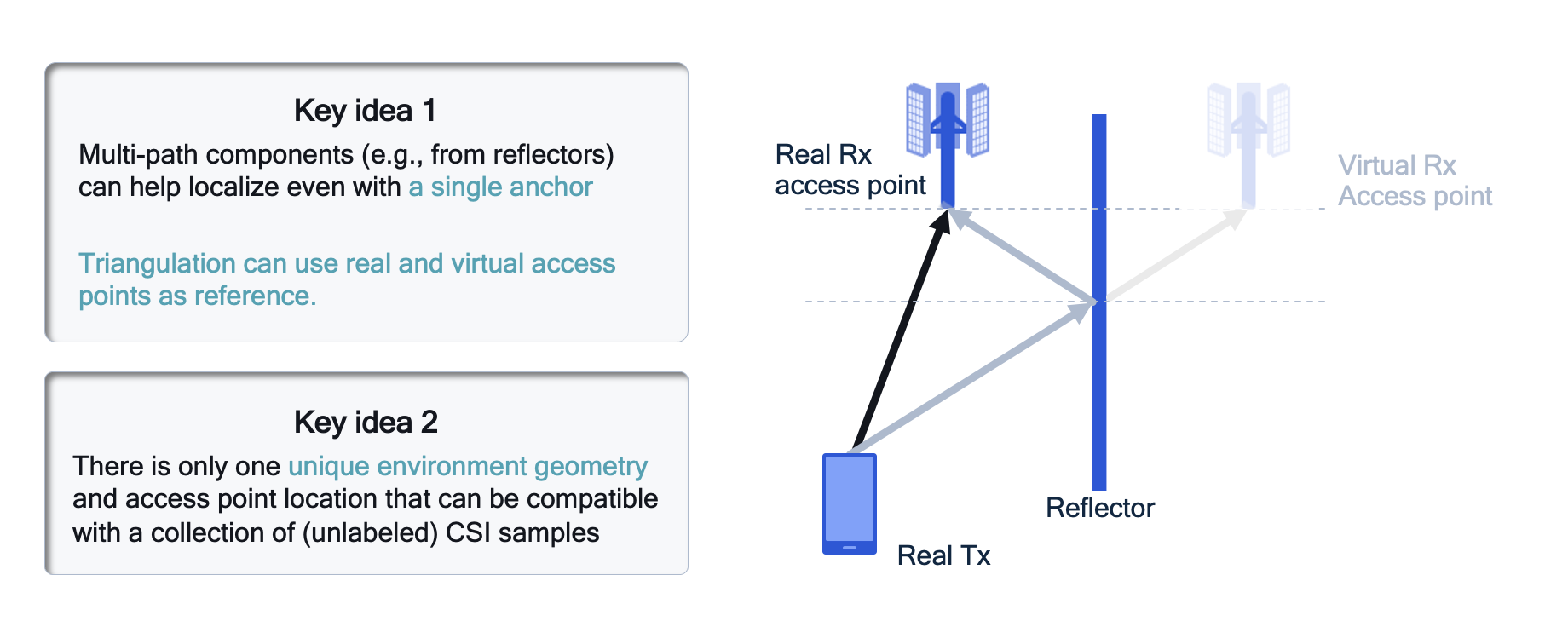

Current classical precise positioning methods, such as time difference of arrival (TDOA), do not require labels but are not very accurate in non-line-of-sight conditions and do not use multipath information. Current ML methods, such as RF finger printing (RFFP), are very accurate but require a lot of labels and lack robustness when the environment changes. Our industrial precise positioning method, called Neural RF SLAM, achieves the best of the two technologies — in our experiments with unlabeled channel state information (CSI), it achieves on average 43.4 cm accuracy for 90% of users. Using unlabeled data is key to making this precise positioning technology feasible at scale.

With enough unlabeled CSI samples, we can learn the geometry of the environment without labels.

Passive positioning with RF sensing also has a variety of use cases across industries, from touchless control for devices to presence detection and sleep monitoring. Existing technology solutions often do not fare well in real-world scenarios or are unfeasible for deployments at scale. Our solution, called WiCluster, is the first weakly supervised passive positioning technique and addresses the existing deployment challenges. It works in strong non-line-of-sight, across multiple floors, and only requires a few labels. For example, in a work environment with offices and conferences rooms, WiCluster achieved precise positioning with mean errors less than 1.1 m and 2.1 m respectively across these room types, and generally achieved test performance comparable to supervised training with more expensive labeling.

We’re excited to highlight our state-of-the-art research in wireless communication and RF sensing and overcoming the challenges of current methods. This will ultimately lead to better user experiences with improved communications and from our devices better understanding the environment.

Arash Behboodi

Senior Staff Engineer and Manager, Qualcomm Technologies Netherlands B.V.

Daniel Dijkman

Principal Engineer, Qualcomm Technologies Netherlands B.V.