This blog post was originally published at STMicroelectronics’ website. It is reprinted here with the permission of STMicroelectronics.

The STM32N6 is our upcoming general-purpose microcontroller with our Neural-Art Accelerator, a Neural Processing Unit. It got a lot of attention at Embedded World. Hence, we sat down with Miguel Castro, who talked to attendees about the device.

The ST Blog: Could you introduce yourself?

Miguel Castro: My name is Miguel Castro, and I am responsible for the Product and Architecture teams of ST’s AI solutions Business Line in the Microcontrollers and Digital ICs Group. I had the privilege to present the live demo of our first generation of AI hardware accelerated STM32 microcontrollers to our major customers and partners, and I am now excited to share their feedback with you.

Miguel Castro talking about the STM32N6

What did we show at Embedded World 2023, and what is outstanding in this new STM32N6?

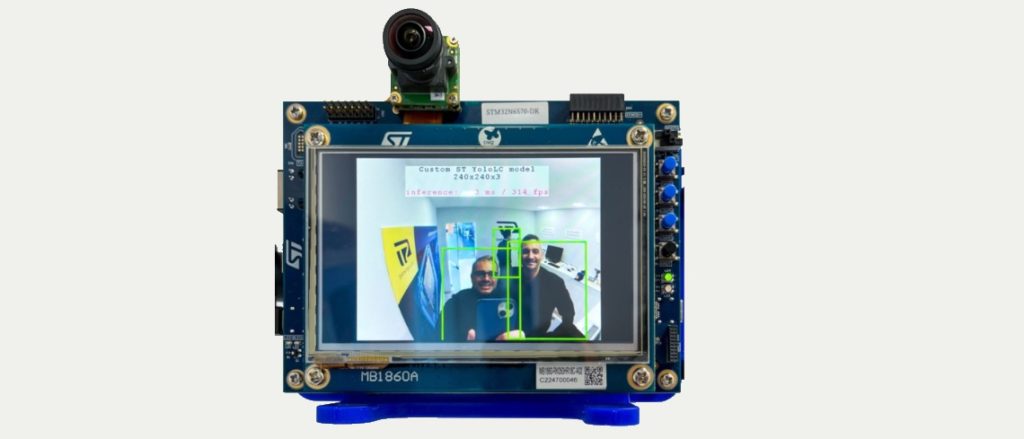

During Embedded World 2023, we privately showed a demo running an ST custom YOLO derivative neural network running on our STM32H747 (Cortex-M7 at 480MHz) discovery kit. This neural network can detect and locate multiple people in every single frame. It is a common type of Edge AI workload requested by many customers and is a commonly used reference to highlight device performance. We also ran the exact same neural network on our upcoming STM32N6 discovery board. The side-by-side demonstration showed the STM32N6 handled 75x more frames than the STM32H7 while running at a max frequency less than 2x that of the STM32H7.

The outstanding efficiency and performance of the STM32N6 are possible thanks to our Neural-Art Accelerator™, an ST-developed Neural Processing Unit. And while we didn’t show this in the demo, the STM32N6 infers 25x faster compared to our STM32MP1 microprocessor – a dual Cortex-A7 running at 800MHz. This performance creates a lot of new possibilities by bringing microprocessor-class performance to Edge AI workloads at the cost and power consumption of a general-purpose microcontroller! And that’s without taking into account the new IPs and peripherals we’ve added that have not previously been seen in a general-purpose microcontroller. These include a MIPI CSI camera, a machine-vision image signal processor (ISP), an H.264 video encoder, and a Gigabit Ethernet controller with Time-Sensitive Networking (TSN) endpoint support.

What were the most frequently asked questions at the show?

Customers’ most-asked question at the show was about the product’s mission profile. Many wondered why, in spite of the STM32N6’s GOPS capabilities, it did not need any passive cooling, as most existing products require passive cooling or a heat sink. The answer is that the STM32N6 is, first, a general-purpose STM32 product and not “limited to” operating as an Edge AI chip. As a result, its mission profile meets all the requirements of our industrial customers, including the ability to operate in high-temperature environments. The efficiency of the design, the architecture, and the ST Neural-ART NPU are ST’s secret recipe to this performance at this cost and power profile.

The second-most-common question was about the difference in response time between the displays (camera preview) of the two demos. The answer is that to perform the people detection and localization task, the STM32H7 needed almost all of its processing capabilities and had few resources left to focus on screen refresh. Moreover, there was little overhead to react when people were moving quickly in front of the camera. In contrast, the STM32N6 board display was super smooth and responsive and could perfectly follow individuals’ movements. Why? Because the STM32N6 redirects its AI compute task to the ST Neural-ART Accelerator™ and its preview functions to the STM32N6’s Machine Vision pipeline, leaving the Cortex-M with the flexibility to handle other tasks.

And how did customers approach the STM32N6, and did they inquire about working with it?

Yes! A frequent point of inquiry from partners and customers touched on the availability of an ecosystem to support the new ST Neural Processing Unit. The answer is simple: We’ve had the STM32Cube.AI available to optimally map neural networks on any STM32 since we launched it in 2019. For ST, the STM32N6 is not an exception. The tool automatically maps all supported operations and layers to the neural processing unit to maximize the efficiency of the inferences.

Moreover, for more advanced users, we can handle all customer-specific requests for optimization because the hardware, the software, and the tools are all developed by ST. As a result, we have no blind spots in how they all work together and can optimize for it.

The next milestone in the product’s release is to add the STM32N6 discovery board to the STM32Cube.AI developer cloud board farm that we introduced in January. This will allow anybody to test the result of inferences from their neural network onto the new chip without requiring that they have one on their desk and without needing to install anything specific ST environment on their computer.

STM32Cube.AI Developer Cloud

At this point in the conversation, what did we tell customers when they asked about availability?

Everyone at Embedded World wanted to know about availability. However, our priority isn’t to rush the mass market launch. Instead, we are focusing on a set of pre-selected lead customers who have committed to helping us demonstrate the wide spectrum of possibilities of this new chip. The STM32N6 brings many innovations, and because of this, we are working with more lead customers than usual. Why? The new IPs enable more advanced applications that can be more complex and require different skills. We’re bringing the best support to these lead customers to make sure they qualify our product for their applications. We will share more details in the coming months, so stay tuned!

Did customers share any concerns or things that potentially trouble them at this junction in the history of AI at the edge?

Today, customers are really interested in our long-term vision, that’s why our roadmap identifies a proliferation of Edge AI acceleration on the STM32 MCU and MPU portfolio over time. Drilling down, we also have a roadmap for our AI accelerators that shows many significant advances in performance and energy efficiency. Without sharing too much, we’ve shared papers and presentations at the most important tech conferences recently, including at the ISSCC in San Francisco in February 2023. It might give you some hints on what the future may hold. ST’s engineers and researchers are working hard to deliver the best Edge AI hardware, software, and tools to our customers. In addition, through regular communication with customers, they can influence our roadmaps and make sure that what is coming enables them to disrupt their markets.

Since we started working on Edge AI applications in 2017, we have supported, collaborated, and co-designed applications with many customers. From this effort, we have a detailed understanding of many of their applications already in production, and we can see the outlook for Edge AI market adoption will continue to grow. We are looking forward to seeing the tsunami that these AI-accelerated products will bring!

What can people do today to familiarize themselves with the ST AI ecosystem?

They can grab STM32Cube.AI, try our new Developer Cloud, or contact us at [email protected].