This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

We did the math: And here’s the cost of today’s AI Big Bang

As indicated in our whitepaper, The Future of AI is Hybrid (part 1): Unlocking the generative AI future with on-device and hybrid AI, a main motivation for on-device and hybrid artificial intelligence (AI) is cost. That cost is driven by the increasing size of AI models, the cost of running these models on cloud resources, and the increasing use of AI across devices and applications. Let’s put that into perspective.

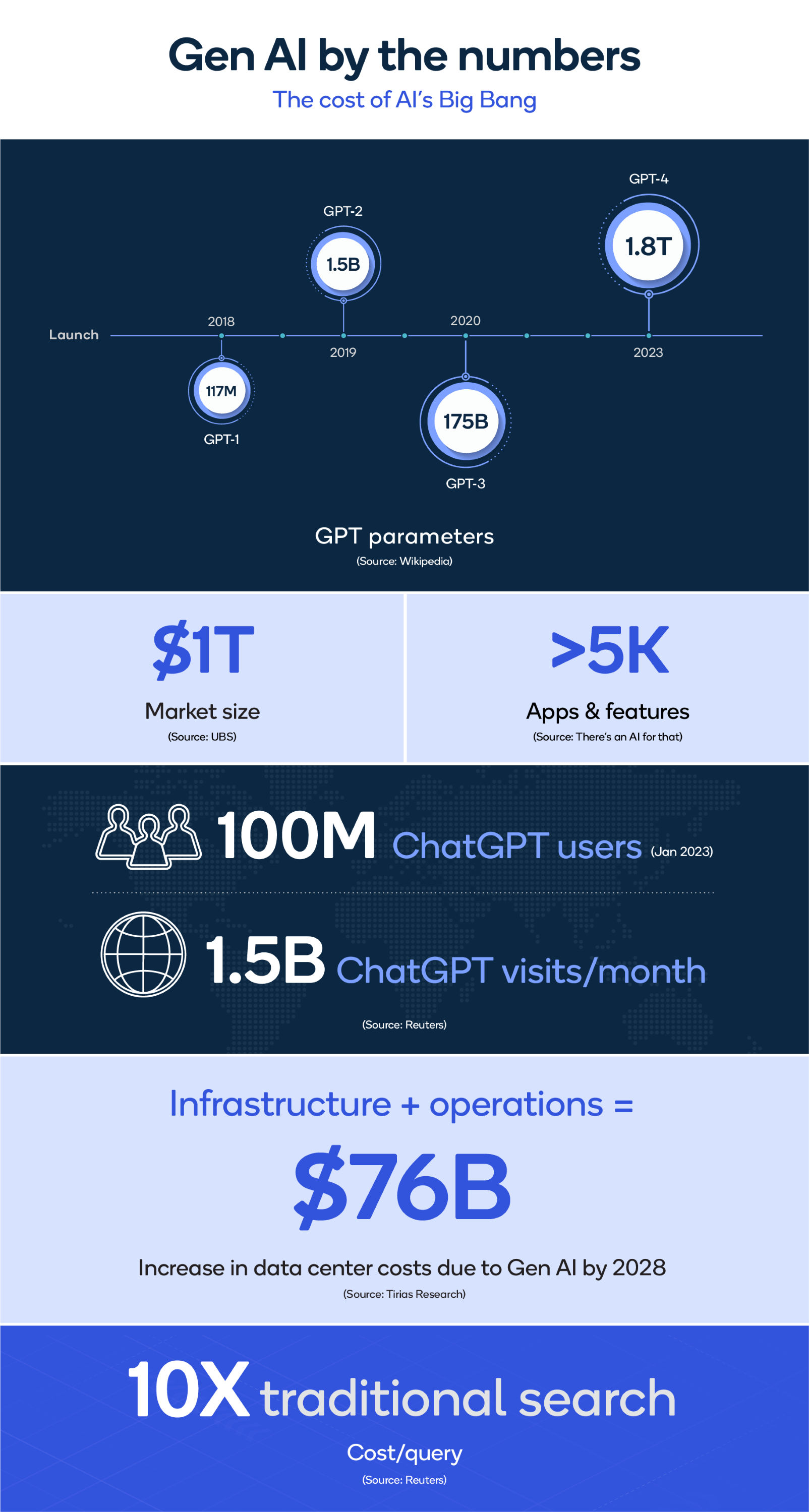

Foundation models, such as general-purpose large language models (LLMs) like GPT-4 and PaLM 2, have achieved unprecedented levels of language understanding, generation capabilities, and world knowledge. Most of these models are quite large and are growing rapidly. GPT-1 was first launched by OpenAI in 2018 with the second, third and fourth version in 2019, 2020 and 2023 respectively.

During that time, the number of parameters in the GPT models increased from more than 117 million to 1.5 billion, 175 billion and approximately 1.8 trillion (estimated for GPT-4). (1) This is an unprecedented growth rate.

Although the size of state-of-the-art models continue to grow rapidly, another trend is toward much smaller models that still provide high-quality outputs. For example, Llama 2 with 7 or 13 billion parameters performs very well against much bigger models in generative AI benchmarks.2 Running these smaller models in the cloud will help reduce the cloud resources required.

The cost of cloud computing to ISPs

The current cloud-based computing architecture for LLM inferencing leads to higher operating costs for internet search companies (ISPs), big and small. Consider a future with internet search augmented by generative AI LLMs, like GPT-3 running with 175 billion parameters. Generative AI searches can provide a much better user experience and results, but the cost per query is estimated to increase by 10 times compared to traditional search methods, if not more according to a report by Reuters.3

With more than 10 billion search queries per day currently, even if LLM-based searches take just a small fraction of queries, the incremental cost could be multiple billions of dollars annually. (4)

That cost translates to expensive graphics-processing-unit and tensor-processing-unit-accelerated servers, the infrastructure to support these high-performance servers, and the energy costs of running these servers. According to TIRIAS Research, AI infrastructure costs could exceed $76 billion by 2028.5 There is currently no effective business model to pass this cost on to consumers. So, new business models are required, or the costs need to be reduced significantly.

The cost of increased use

Use of these models is also increasing exponentially. Generative AI models, such as GPT and Stable Diffusion, can create new and original content like text, images, video, audio, or other data from simple prompts today. Future generative AI models will be able to create complete movies, gaming environments, and even metaverses.

These models are disrupting traditional methods of search, content creation, and recommendation systems — offering significant enhancements in utility, productivity and entertainment with use cases across industries, from the commonplace to the creative.

Architects and artists can explore new ideas, while engineers can create code more efficiently. Virtually any field that works with words, images, video, and automation can benefit.

ChatGPT has captured our imagination and engaged everyone’s curiosity to become the fastest growing app in history. ChatGPT reached more than 100 million active users in January 2023 just two months after it launched and now boasts more than 1.5 billion monthly visits, making OpenAI one of the top-20 websites in the world according to Reuters.6

Innovation is becoming more and more difficult to keep up with due to generative AI developments. According to a major aggregator site, there are more than 5,000 generative AI apps and features available.7 AI is having a Big Bang moment, akin to the launch of television, the worldwide web, or the smartphone. And this is just the beginning.

UBS estimates the market size that ChatGPT is operating in at $1 trillion to the entire ecosystem. (8)

The cost-efficient path to reaching AI’s full potential

The most efficient solution to reduce the tremendous costs of running generative AI in the cloud is to move models to edge devices. Transitioning models to the edge not only reduces the stress on the cloud infrastructure, but it also reduces execution latency while increasing data privacy and security.

AI models with more than 1 billion parameters are already running on phones with performance and accuracy levels similar to those of the cloud, and models with 10 billion parameters or more are slated to run on devices in the coming months. For example, at Mobile World Congress (MWC) 2023, Qualcomm Technologies demonstrated Stable Diffusion running completely on a smartphone powered by a Snapdragon 8 Gen 2 mobile platform.

Over time, advances in model optimization combined with increased on-device AI processing capabilities will allow many generative AI applications to run on the edge. In a hybrid AI solution, distributing AI processing between the cloud and devices will allow generative AI to scale and reach its full potential — on-device AI processing and cloud computing will complement each other.

The hybrid AI approach is applicable to virtually all generative AI applications and device segments — including phones, laptops, extended reality headsets, vehicles and the internet of things. The approach is crucial for generative AI to meet enterprise and consumer needs globally.

For more information check out previous OnQ blog posts on how on-device and hybrid is allowing AI to scale and how Qualcomm Technologies’ on-device leadership is enabling the global ecosystem for hybrid AI.

References

1. GPT-4. Wikipedia. Retrieved on July 25, 2023 on https://en.wikipedia.org/wiki/GPT-4.

2. Introducing Llama 2/Inside the Model/Benchmarks. Meta. Retrieved on July 25, 2023 on https://ai.meta.com/llama/.

3. Dastin, L. et.al. (Feb. 22, 2023). Focus: For tech giants, AI like Bing and Bard poses billion-dollar search problem. Reuters. Retrieved on July 19, 2023 on https://www.reuters.com/technology/tech-giants-ai-like-bing-bard-poses-billion-dollar-search-problem-2023-02-22/.

4. (Feb. 2023). How Large are the Incremental AI Costs. Morgan Stanley.

5. McGregor, Jim. (May 12, 2023). Generative AI Breaks The Data Center: Data Center Infrastructure And Operating Costs Projected To Increase To Over $76 Billion By 2028. Forbes. Retrieved on July 25 on https://www.forbes.com/sites/tiriasresearch/2023/05/12/generative-ai-breaks-the-data-center-data-center-infrastructure-and-operating-costs-projected-to-increase-to-over-76-billion-by-2028/?sh=f6d1b797c15e.

6. Hu, Crystal. ChatGPT sets record for fastest-growing user base – analyst note. Reuters (Feb. 2, 2023). Reuters. Retrieved on July 23, 2023 on https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/.

7. There’s an AI for that. Retrieved on July 25, 2023 on https://theresanaiforthat.com/.

8. Garfilkle, Alexandra. (Feb. 2, 2023). ChatGPT on track to surpass 100 million users faster than TikTok or Instagram: UBS. Yahoo! news. Retrieved on July 23, 2023 on https://news.yahoo.com/chatgpt-on-track-to-surpass-100-million-users-faster-than-tiktok-or-instagram-ubs-214423357.html.

Pat Lawlor

Director, Technical Marketing, Qualcomm Technologies