This blog post was originally published at Outsight’s website. It is reprinted here with the permission of Outsight.

This article explores the capabilities and limitations of each type of sensor, to provide a clear understanding of why LiDAR has emerged as a strong contender in the computer vision tech race. This article delves into the technology aspects and differences of these sensors in general, not for a specific market segment like automotive (as explained here, most applications of LiDAR are not in this field). If you’re seeking a concise overview specifically for people counting applications, download our comparative guide here.

Before delving into the relative strengths and weaknesses of these technologies, let’s first provide a brief overview of how cameras, radar and LiDAR systems operate and perceive the world around them.

Active vs. Passive Sensors

Generally speaking, sensors are devices that measure physical properties and transform them into signals suitable for processing, displaying, or storing. Radar and LiDAR are active sensors, different from cameras, which are passive.

Active sensors emit energy (e.g. radio waves or laser light) and measure the reflected or scattered signal, while passive sensors detect the natural radiation or emission from the target or the environment (e.g. sunlight or artificial light for cameras).

Each sensing modality has its advantages and inconveniences.

- As active sensors generate their own signals, external lighting conditions do not affect them. Both radar and LiDAR function perfectly in total darkness and in direct sunlight, which is not the case for cameras.

Why is this distinction important? The Insurance Institute for Highway Safety (IIHS) found that in darkness conditions, camera- and radar-based pedestrian automatic emergency braking systems fail in every instance to detect pedestrians.1

Additionally, the Governor’s Highway Safety Association (GHSA) of the USA found, in an evaluation of roadway fatalities in 2020, that 75% of pedestrian fatalities occur at night.2

Beyond night vision, the impact of external lighting on cameras has far-reaching consequences. For instance, computer vision algorithms may fail in areas with shadows caused by objects (moving or static e.g. trees or buildings) and even in indoor settings when lighting conditions change (e.g., a door opening adding more light to the scene).

- Weather conditions can significantly affect passive sensors since their sensing doesn’t directly interact with physical phenomena like rain or fog. Instead, they can only work with the resulting image, making them more susceptible to weather-related limitations. However, active sensors can also be affected by adverse conditions, depending on their wavelength.

- Passive sensors are typically more energy-efficient, whereas active sensors allocate a significant portion of their energy budget to emit laser light or radar waves. The same principle applies to their size and weight.

- Passive sensors like cameras are highly stealthy and difficult to detect. On the contrary, active sensors like LiDAR or radar emit signals that can be detected by other sensors, making them more conspicuous. This aspect holds paramount importance in defense applications.

- Similarly, active sensors can encounter signal interference, known as crosstalk. Nevertheless, with the ongoing advancement of technology, this issue is progressively becoming less of a concern for LiDAR.

However, the most crucial disparities among these sensing modalities lie in two key aspects that warrant further exploration:

- The inherently different types of perception they provide, and

- The potential privacy concerns they may give rise to or help evade.

Perceiving Various Dimensions of the World

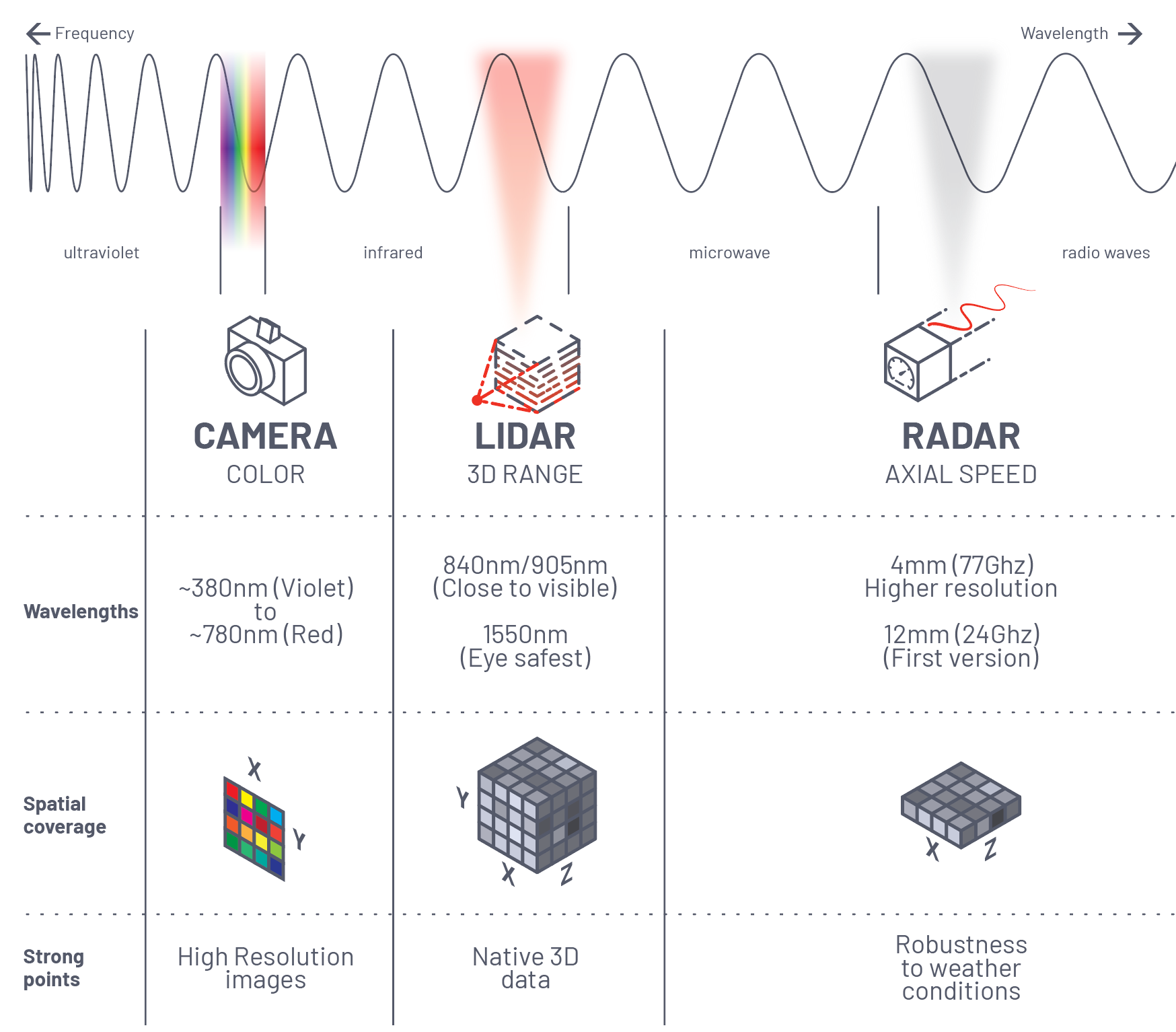

Each type of sensor operates in distinct sections of the electromagnetic spectrum, utilizing signals with varying wavelengths.

Cameras capture colors in two dimensions, lacking any notion of depth. Consequently, a large object positioned far away from the device can have the same number of pixels as a small object situated close to the camera.

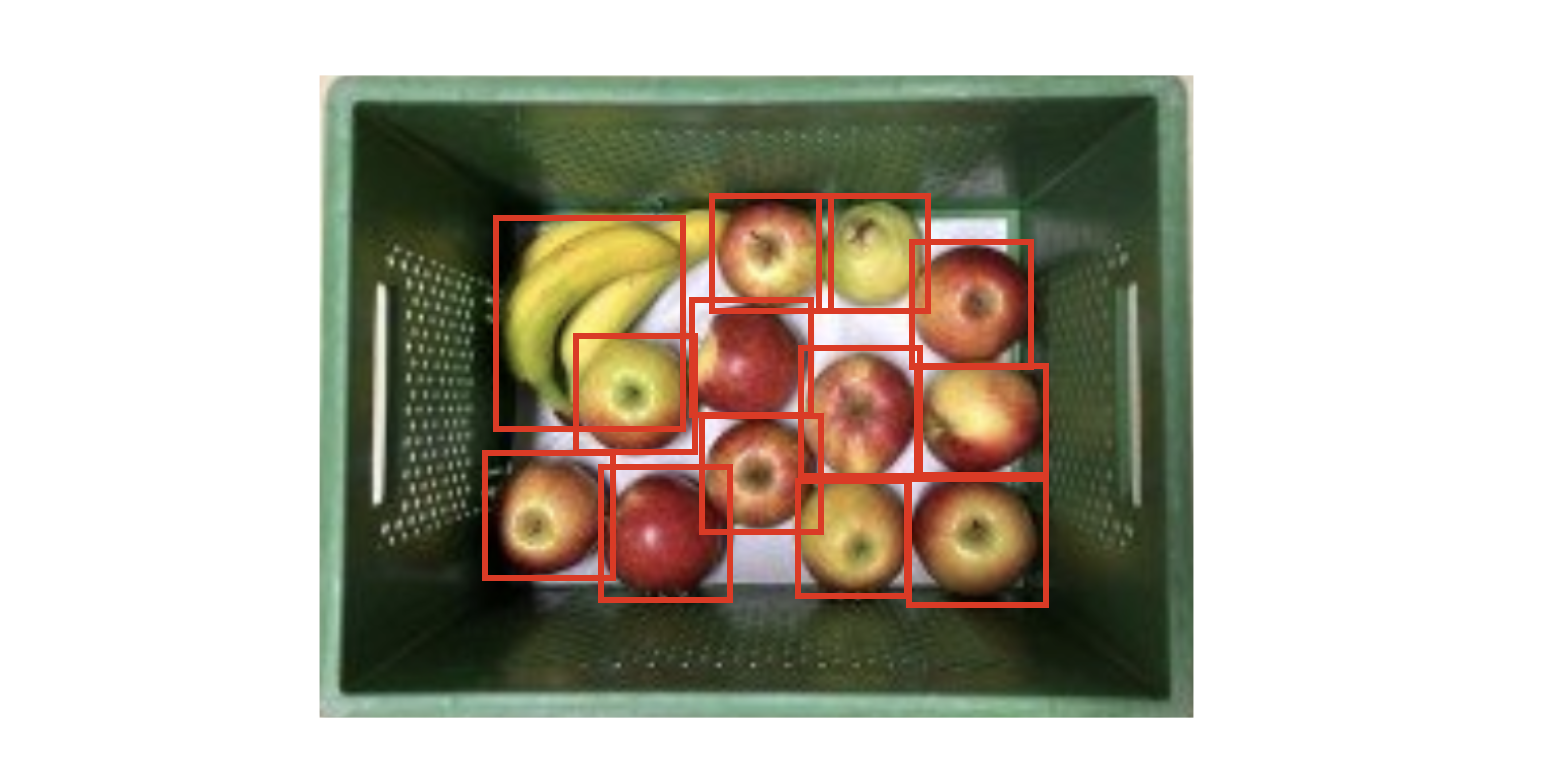

This depth perception is not required in many computer vision tasks, such as classifying objects, where cameras excel:

Indeed, the lack of depth perception in computer vision software can pose challenges in tasks that require precise detection of object position, size, or movement. This capability is especially important for applications such a precise crowd monitoring at scale. In this example real persons and a printed representation are both displayed to the camera:

Active sensors like LiDAR and radar can detect the distance of each object, in the case of LiDAR these measurements are done in 3 dimensions, that is not only the depth but also the exact position of any object in the space. However, this is only applicable to 3D LiDAR, which is frequently referred to as “LiDAR” due to its recent popularity, largely attributed to self-driving cars. 2D LiDAR has existed for decades prior to that, functioning more like conventional radar, where depth information in only 2 dimensions was accessible.

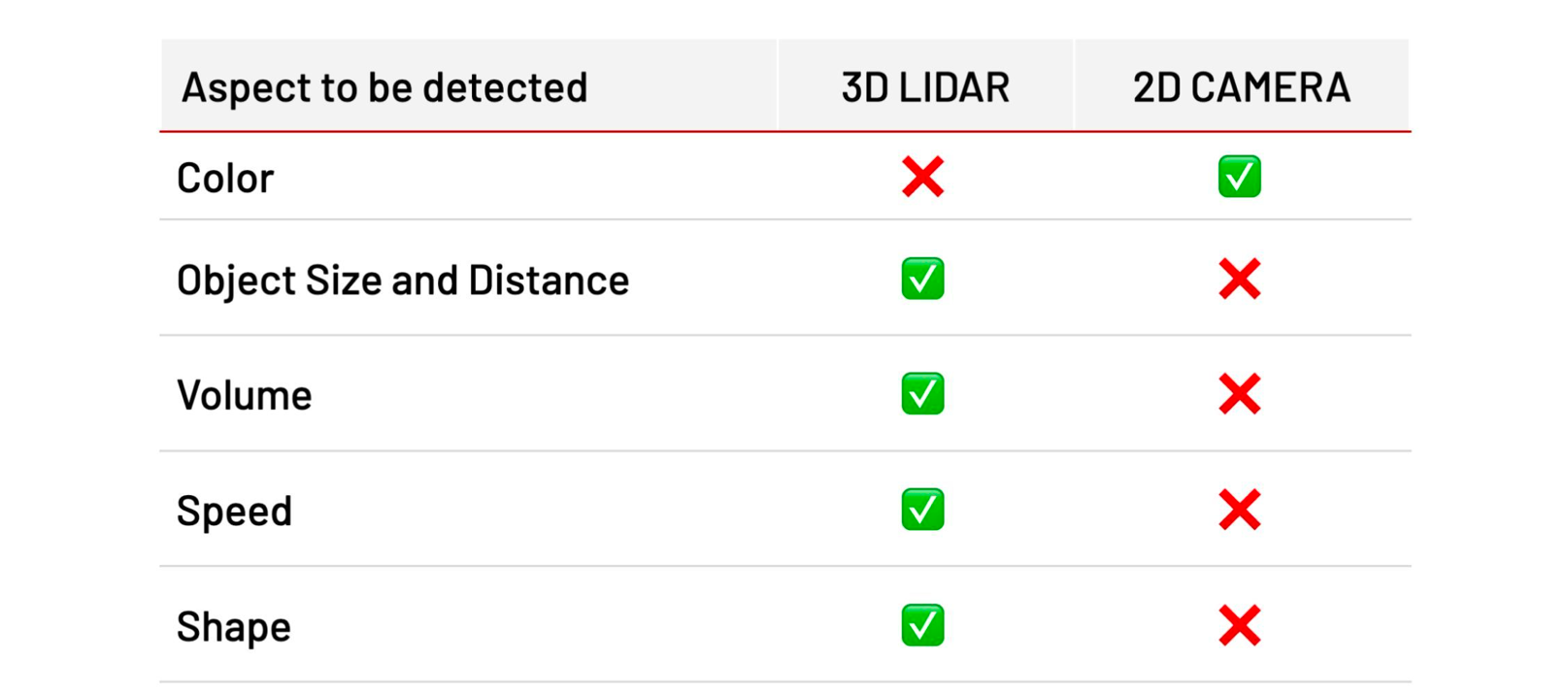

In a nutshell, 3D LiDAR provides spatial data but can’t detect colors:

The right sensor to use will depend on what aspects do you need to detect

That means that tasks such as tracking people at scale, individually and with cm accuracy, are much more appropriate for 3D spatial sensors like LiDAR:

Outsight’s software processes raw data from LiDAR to extract information like object position

Radar vs. LiDAR

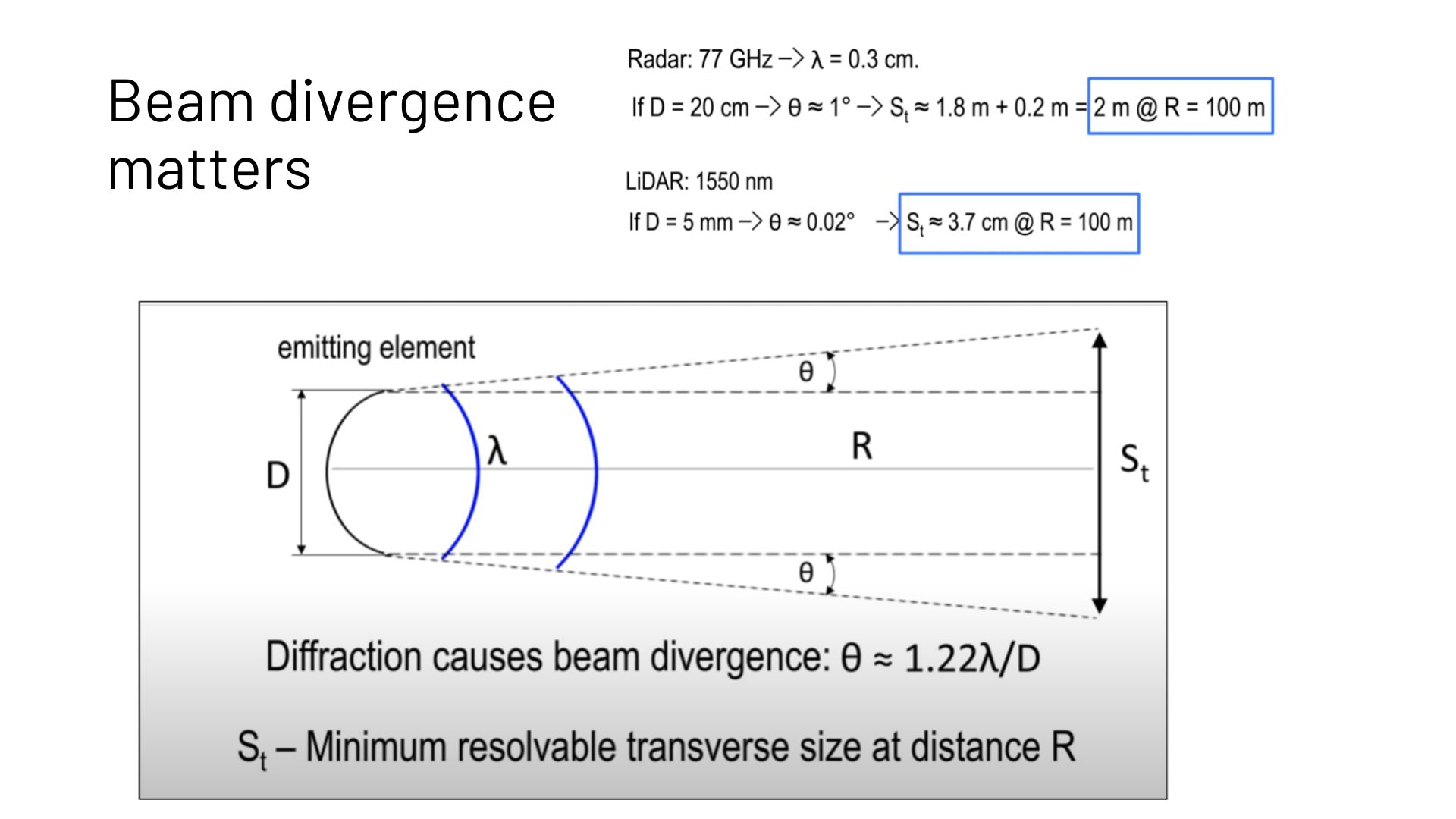

As we’ve seen both sensors share many characteristics, mostly being active and able to detect distance. The key differences lie in precision: LiDAR’s laser-level accuracy versus radar’s lower resolution. LiDAR boasts laser-level accuracy, providing cm-level precision (mm precision in some 2D LiDARs), while radar’s resolution is significantly lower, posing challenges in precise tracking and distinguishing individuals or objects in crowded environments. One of the main reasons why lies in the wavelengths of radio waves compared to laser:

While imaging radar, the 3D version of radar, shows promising potential and is currently in development, its capabilities are still limited. Presently, radar primarily detects information in 2D, making it suitable for various automotive use cases but less applicable in many other contexts.

Respect for Privacy

Cameras were purposefully designed for human consumption of images, enabling them to capture and deliver information that closely mirrors what a person would perceive in reality (e.g. cinema, TV, portable cameras, smartphones…)

Only recently have the same images taken by cameras found applications in automated processing by computers, utilizing technologies such as computer vision and image processing AI. This remarkable capability has expanded to encompass the identification of individuals through advanced techniques like FaceID.

The use of cameras in facial recognition technology raises legitimate apprehensions about data security and personal privacy. Consequently, the significance of safeguarding privacy has gained considerable attention from policymakers and regulators worldwide. State policies, such as the General Data Protection Regulation (GDPR) in the European Union, have been put in place to address these concerns by imposing strict guidelines and limitations on the usage of cameras and biometric data.

LiDAR stands out from cameras in this crucial aspect, thanks to its remarkable ability to uphold privacy. Unlike cameras, LiDAR devices generate point-cloud images that do not contain personally identifiable information or reveal sensitive characteristics such as gender.

Point-cloud data doesn’t allow to identify an individual

Other aspects to consider

The following chart presents a summary of the previous comparison points, along with some additional ones:

- Cost: Cameras exhibit the lowest cost, while LiDAR costs are rapidly decreasing, especially considering that 3D LiDAR sensors require fewer units per square meter compared to cameras (refer to the low density criteria in the chart), which translates into much less setup, wiring and networking costs.

- Flexible mounting: 3D sensors offer the advantage of versatile installation options, enabling placement in various positions and granting many of them the ability to perceive in 360º. This feature significantly reduces setup constraints, making 3D LiDAR ideal for large infrastructure projects.

- Range detection: Certain sensors, such as stereo vision Cameras, suffer from diminishing performance as the detection distance increases. In contrast, LiDAR sensors maintain consistent and reliable range detection capabilities even at greater distances.

Conclusion

In the world of automated processing, cameras have become a ubiquitous choice not necessarily due to their superiority as sensors for the task, but rather because of their widespread availability.

As we explored earlier in this article, the use of cameras may be suitable for certain applications like object classification, where their effectiveness is evident. However, when it comes to capturing complex spatial data of the physical world, these sensors reveal their limitations.

The intricacies of spatial data processing necessitate more specialized and sophisticated sensor technologies to overcome challenges and provide more accurate and comprehensive results.

While radar shares the benefits of being an active sensor, akin to LiDAR, and equally excels in preserving privacy, its significantly lower resolution and precision disqualify it as a viable candidate for numerous use cases, such as crowd monitoring and precise traffic management.

The orders of magnitude difference in resolution makes radar less capable of capturing intricate details and spatial data with the same level of accuracy as LiDAR.

In conclusion, the rapid advancements in LiDAR technology have ushered in a new era of affordability, reliability, and performance.

As we have explored the numerous benefits it offers, from its active sensing capabilities to the preservation of privacy, it becomes evident that LiDAR has emerged as the optimal choice for a wide array of use cases and applications, but also in enabling new ones that were previously unimaginable with other sensors.

As LiDAR continues to evolve and find wider integration, it holds the key to unlocking unprecedented insights and driving us into a more advanced and interconnected world.

However, while LiDAR data, in the form of point-cloud information, provides a wealth of spatial data, this raw data alone is essentially useless without effective processing and analysis.

Extracting actionable insights and valuable information from point-cloud data requires sophisticated processing software that can interpret, analyze, and transform the data into meaningful outputs.

This is precisely where Outsight comes into play.

Through advanced algorithms, cutting-edge techniques and a full set of tools, Outsight can derive valuable insights from LiDAR point-cloud data, enabling a wide range of applications across industries.

One such tool is the first Multi-Vendor LiDAR Simulator, an online platform that empowers our partners and customers to make informed decisions about which LiDAR to utilize, their optimal placement, and the projected performance and cost for any given project.

Kevin Vincent

Outsight