This blog post was originally published at Embedl’s website. It is reprinted here with the permission of Embedl.

Recently, we have all witnessed the stunning success of prompt engineering in natural language processing (NLP) applications like ChatGPT: one supplies a text prompt to a large language model (LLM) like GPT-4 and the result is a long stretch of very well crafted text, both grammatically correct and often very meaningful to the topic mentioned in the prompt.

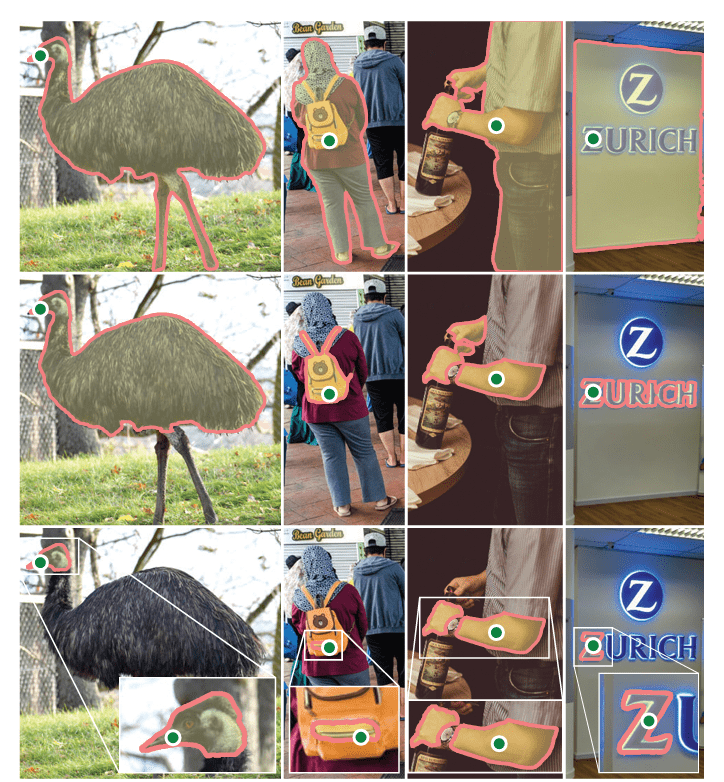

Recently a number of efforts have done the same for vision. Segmentation is a fundamental problem in computer vision: identifying which image pixels belong to an object. This is used in a broad array of applications, from analyzing scientific imagery to editing photos. Traditionally, the problem was addressed in one of two ways: interactive segmentation which required a person to guide the method by iteratively refining a mask or automatic segmentation, which allowed for segmentation of specific object categories defined ahead of time (e.g., cats or cars) but required substantial amounts of manually annotated objects to train (e.g., thousands or even tens of thousands of examples of segmented cats), along with the compute resources and technical expertise to train the segmentation model. Neither approach provided a general, fully automatic approach to segmentation.

Enter Foundation Models for Image Segmentation! The idea is to train a large vision model that can then be prompted to create a segmentation. The model could be could be prompted in various ways: for example, one could simply click on a region of an image to indicate an object and on other parts to indicate background. And hey presto!

There are two main ingredients to make this happen: the model and the data.

The model is surprisingly simple: image encoder produces a one-time embedding for the image, while a lightweight encoder converts any prompt into an embedding vector in real time. These two information sources are then combined in a lightweight decoder that predicts segmentation masks.

In contrast to LLMs, data to train this model is not available readily on the web to be simply scraped off. So Meta’s Segment Anything project (SAM) created a very large data set to pre train such a foundational segmentation model. Annotators used SAM to interactively annotate images, and then the newly annotated data was used to update SAM in turn. This cycle was repeated many times to iteratively improve both the model and dataset. The final dataset includes more than 1.1 billion segmentation masks collected on about 11 million licensed and privacy-preserving images. These are verified by human evaluation studies and thus of high quality and diversity, and in some cases even comparable in quality to masks from the previous much smaller, fully manually annotated datasets.

There are exciting possibilities for the future to use composition as a powerful tool that allows the model to be used in extensible ways to potentially accomplish many new tasks and enable a wide variety of applications than systems trained specifically for a fixed set of tasks. Such vision models can become a powerful component in domains such as AR/VR, content creation, scientific domains, and more general AI systems.

As with NLP foundation models, these vision foundation models would also need compressed versions to be deployed widely across cheap hardware. This is the mission of Embedl!