This blog post was originally published at TechNexion’s website. It is reprinted here with the permission of TechNexion.

In embedded vision applications, one of the most ignored parameters when it comes to camera integration is sensor-lens matching. It is not enough for the image sensor and the lens to have their own individual features. It is also necessary for the two components of a camera system to ‘align’ with each other for the optimal performance of the embedded vision system.

In this article, let us explore:

- Why sensor and lens matching is important?

- What are the consequences of not doing it properly?

- And finally, the factors you need to consider while matching a lens with a sensor.

The Significance of Sensor and Lens Matching

The lens must be carefully chosen to match the design specifications of the image sensor so that it can accurately capture light and convert it into an electrical signal that is readable by processors. Without this balance, captured images will be distorted or inaccurate.

During the camera integration process, the image sensor is chosen first since it is the most critical imaging component. Then, in most cases, the lens is carefully chosen to match the design specifications of the image sensor so that it can accurately capture the desired field of view (FOV).

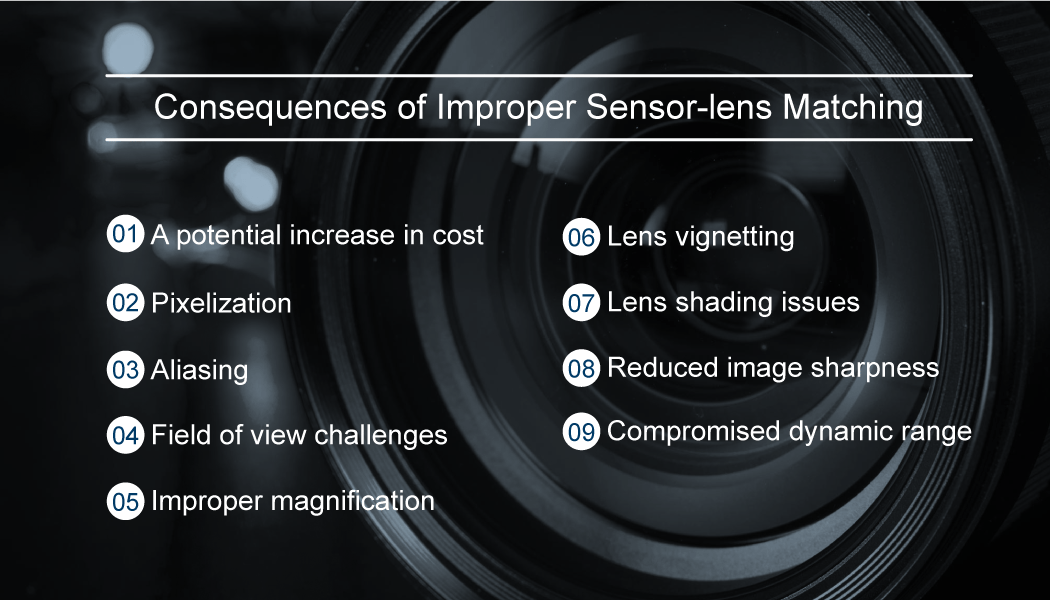

Consequences of an Improper Sensor-lens Matching

If the lens and sensor aren’t properly matched, several issues can arise that compromise the quality and functionality of the imaging system. Here are the potential problems and their implications:

- A potential increase in cost

- Pixelization

- Aliasing

- Field of view challenges

- Improper magnification

- Lens vignetting

- Lens shading issues

- Reduced image sharpness

- Compromised dynamic range

Cost

Carefully selecting a lens that is compatible with the image sensor can help organizations avoid inflated costs. In the context of production lines of thousands of embedded vision devices, even a slight cost increase per unit can have a huge financial impact.

Pixelization

When an image sensor’s resolution is lower than the lens’s optical resolution, pixelation can occur and details become more difficult to discern. This can significantly reduce image quality and make it appear as if the image has been viewed through a screen door or a grid. This phenomenon is also called the screen door effect.

Aliasing

Aliasing occurs when the spacing of camera pixels closely matches the spacing between the edges of features in the optical image. This can lead to banding or fringing, causing distortions and other artifacts to appear in images. These artifacts can make certain patterns, such as a striped shirt, appear wavy or distorted.

Incorrect Field-of-view

If the size of the image formed by the lens does not match the size of the image sensor, incorrect field-of-view can occur. This could mean that either parts of the intended scene are not included in the output image, or you have captured more than you wanted, leading to undesired areas in your image.

Improper Magnification

When considering magnification for microscopic imaging, it is important to ensure that the image captures the right level of detail. This is also critical in applications where the zoom feature has to be used to zoom in on distant objects or a particular ROI (Region Of Interest).

Too much or too little magnification can result in missing critical cellular structures in microscopes. Therefore, careful consideration should be given to selecting the correct magnification setting for your sensor lens configuration.

Vignetting

Vignetting is a photographic effect that results in the darkening of the image corners. It occurs when all light rays entering the lens don’t reach the sensor, either because they are partially blocked by mechanical structures or because it is not large enough to cover all of it. This can reduce the usable area of a photograph and cause uneven illumination across its frame. Fortunately, many modern lenses have been designed to reduce and even avoid this problem entirely.

Color Shading Errors

Color shading is a type of error that can occur when the lens’s chief ray angle (CRA) does not match the image sensor’s CRA requirement. In RGB sensors, this can cause light rays to hit adjacent pixels, leading to color banding where certain parts of the image might appear with a color tint. Color shading also causes illumination errors which can result in loss of critical details of the image.

Reduced Image Sharpness

If the lens’s resolution does not match the sensor’s resolution, then the image might appear blurry or lacking in detail. This can be especially problematic for applications that require sharp images, such as medical imaging and satellite imagery.

Compromised Dynamic Range

Mismatched components can cause a decrease in dynamic range, leading to problems such as blown-out highlights or lost details in shadows. Poor image quality can result from incorrect sensor and lens combinations, making it difficult for embedded vision systems to capture the best possible images.

Key Factors to Consider when Matching an Image Sensor and Lens

Matching an image sensor and lens must be done meticulously to ensure you don’t face any imaging-related challenges in your embedded vision system in the future.

Here are the key factors to consider when matching an image sensor and lens:

Understanding the application and use case

Before diving into technical specifications, it’s essential to understand the intended application and use case. For instance, a surveillance camera in a dimly lit area would have different requirements than a camera used for bright outdoor sports broadcasting and analytics systems.

Also read: Embedded Vision in Sports Analytics – Relevance and Applications

Working distance

This refers to the distance between the subject and the camera. Depending on the application, you might need a lens that can focus on objects very close (macro lenses) or far away (telephoto lenses). As you pick the right lens type, you must ensure that it matches the sensor in terms of all the design parameters.

Resolution requirement

The resolution of the image sensor should match the resolving power of the lens. A high-resolution sensor paired with a low-resolution lens will not utilize the sensor’s full potential, and vice versa.

Depth-of-field

Depth-of-field (DoF) determines how much of the scene (from near to far) appears sharp. Different applications require different DoFs. For instance, portrait photography often uses a shallow DoF to blur the background, while landscape photography prefers a deeper DoF to keep everything in focus.

Field-of-View or object size

This refers to the amount of the scene captured by the camera. A wide-angle lens captures a broad field of view, while a telephoto lens captures a narrow one. The lens should be chosen such that the image it projects onto the sensor matches the sensor’s size.

Operating wavelength spectrum

Different sensors are sensitive to different parts of the light spectrum. Ensure that the lens coatings and materials are compatible with the sensor’s sensitivity range, especially if working outside the visible spectrum, like in infrared imaging.

Image sensor parameters

In addition to the other parameters we discussed so far, you also need to consider the following sensor parameters in the process of sensor-lens matching:

- Pixel Size: The size of individual pixels on the sensor. A lens should be able to resolve details down to the size of these pixels or smaller.

- Sensor Format or Aspect Ratio: This is the ratio of the sensor’s width to its height. Ensuring that the lens’s image circle fully covers the sensor is essential.

Lens parameters

The following are the lens parameters you should consider while doing sensor-lens matching:

- Focal length: Determines the lens’s angle of view and magnification. It should be chosen based on the desired field of view and the sensor’s size.

- f-number: Indicates the lens’s aperture size, affecting the amount of light reaching the sensor.

- Image circle size: The lens projects an image in a circular shape. This circle should be large enough to cover the entire sensor.

- Back focal distance: The distance from the lens’s rear element to the image sensor. It’s crucial for ensuring compatibility with camera housings and mounts.

Applications and Real-world Impacts

The implications of lens and sensor matching extend to various real-world applications:

- Autonomous vehicles: For self-driving cars, precision in visual input is non-negotiable. A sensor-lens mismatch can lead to misinterpretation of road signs or obstacles, endangering lives.

- Augmented reality: In AR glasses, an optimal lens-sensor match ensures that digital overlays align perfectly with the real world, enhancing user experience.

- Industrial automation: Robots, guided by visual input, need accurate images to perform tasks like assembly, welding, or painting.

Here, it is important to note that sensor-lens matching is critical in every embedded vision application. Those given above are just examples where it probably has a high impact.

Achieving the Perfect Match

Understanding the application is the first step. Is the system going to be used for close-up inspections, like in PCB examinations, or for broader views, like in agriculture drones? Parameters like working distance, resolution requirement, depth-of-field, and operating wavelength spectrum come into play.

Once the application is clear, selecting the right image sensor depends on pixel size, resolution, and sensor format. The lens choice follows, considering focal length, f-number, and image circle size.

The matching process isn’t just a technical detail; it’s the bedrock of the efficacy of embedded vision systems. As technology advances and the applications of embedded vision expand, ensuring this synergy becomes not just important, but imperative.

How can TechNexion Help in Sensor-lens Matching?

TechNexion’s portfolio consists of a wide range of cameras starting from 1MP to 13MP. They also come in varying sizes. Hence, to ensure that our customers choose the right sensor-lens combination, we guide them throughout the camera integration process. With our imaging and engineering expertise, we know how to do sensor-lens matching the right way. Learn more about our embedded vision cameras here.