In an era where reality seems increasingly malleable, the emergence of deepfake technology has catapulted us into uncharted territory. Deepfakes, synthetic digital media generated by artificial intelligence to manipulate images, audio, or video, pose a profound threat to the fabric of truth and trust in our society.

The allure of deepfake technology lies in its ability to create hyper-realistic content, often indistinguishable from authentic media. With sophisticated algorithms and ample training data, creators can seamlessly superimpose faces onto bodies, alter speech patterns, and fabricate entire scenarios, all with alarming accuracy. But while the allure of deepfakes may seem innocuous at first glance, the implications of their unchecked proliferation are far-reaching and deeply troubling.

Currently, the primary use of deep fake technology has been to create media portraying public figures in situations that never actually happened, and saying things they never actually said. In the future, how will you know that the person you are speaking to on a Zoom call really is your friend/relation/business colleague? Perhaps we will need biometric technology to authenticate ourselves on phone and video calls. Looking further out, will we be creating deep fakes of family members so we can continue talking with them over a video link after they die?

One of the most pressing concerns surrounding deepfakes is the glaring inadequacy of current detection technology. Ironically, because much of the software used to detect deep fakes is similar to the software used to create deep fakes, some of the best providers of deep fake detection also provide the software to create deep fakes. Despite concerted efforts by researchers and tech giants, the arms race between creators and detectors remains decidedly lopsided. As deepfake algorithms evolve and adapt, conventional methods of detection struggle to keep pace. While strides have been made in the development of detection tools, the sheer volume and sophistication of deepfake content render these efforts largely ineffective. The result? A landscape where truth becomes increasingly elusive, and the burden of discerning fact from fiction falls squarely on the shoulders of an unsuspecting public.

The ramifications of this technological arms race extend far beyond mere entertainment or novelty. The weaponization of deepfakes for malicious purposes poses a grave threat to societal stability and democratic discourse. In an age where information spreads at the speed of light, the potential for deepfakes to sow discord, manipulate public opinion, and undermine trust in institutions is staggering. From fabricated political speeches to falsified evidence, the implications of deepfake-driven disinformation campaigns are nothing short of chilling. Moreover, the democratization of deepfake technology only exacerbates these risks. With increasingly accessible tools and tutorials available online, the barrier to entry for would-be propagandists and malicious actors has never been lower. As a result, the proliferation of deepfakes is not merely a theoretical concern but a very real and present danger that demands immediate attention.

Addressing the threat of deepfakes requires a multifaceted approach that combines technological innovation with robust regulatory frameworks and media literacy initiatives. While advancements in detection algorithms are essential, they must be complemented by policies that hold creators and disseminators of malicious deepfakes accountable. Moreover, empowering individuals with the critical thinking skills necessary to navigate an increasingly digital landscape is paramount in mitigating the impact of deepfake-driven disinformation. In the face of this looming crisis, complacency is not an option. The unchecked proliferation of deepfakes represents a ticking time bomb for society, one whose detonation could have far-reaching and irreversible consequences. Only by confronting this threat head-on, with vigilance, innovation, and a steadfast commitment to truth, can we hope to safeguard the integrity of our discourse and preserve the foundations of democracy for generations to come.

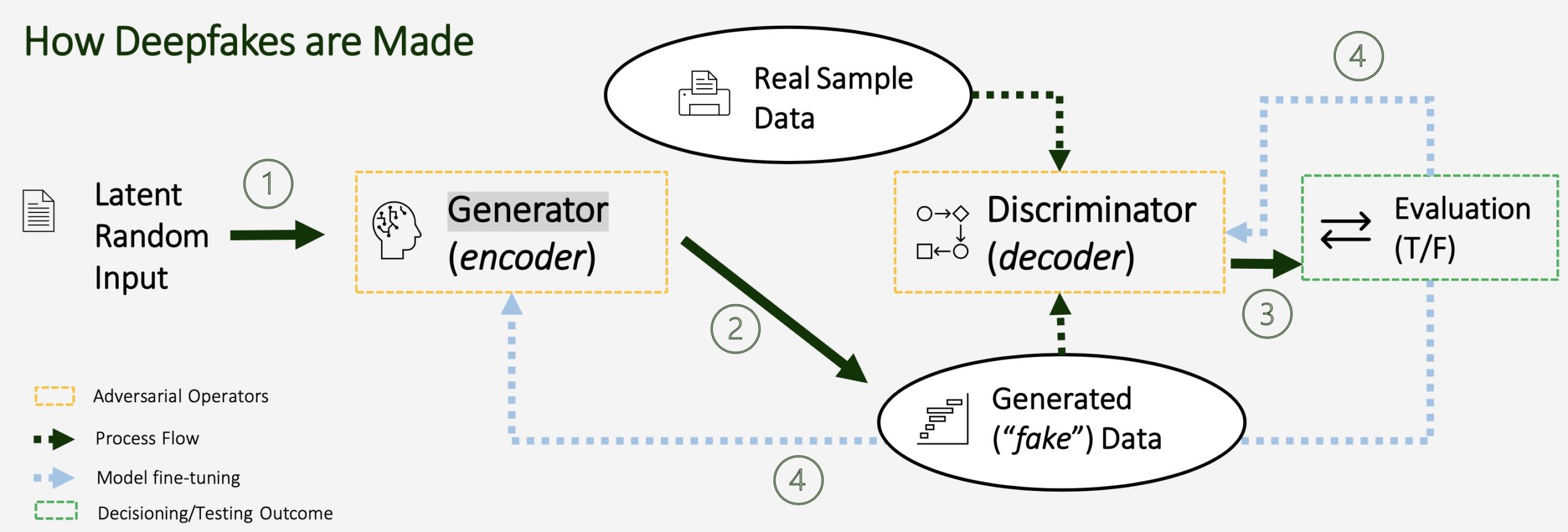

Deepfakes can take many forms, from simple face swaps between real people, to generated images, expressions and actions that modify real instances – all with potentially pernicious downstream affects. The challenge with detecting deep fakes, and AI-modified images lies in the efficacy of generative adversarial networks (GANs)

- Generator Network Creation: The generator produces images or videos resembling a target person’s face by initially generating random images and progressively learning to create more realistic ones throughout the training process.

- Discriminator Network Training: The discriminator is trained to distinguish between real and generated content using a dataset that includes both real and synthetic images. Over time, it improves its ability to differentiate between the two.

- Adversarial Training: The generator creates realistic images to fool the discriminator, while the discriminator improves its ability to distinguish between real and generated images. This ongoing adversarial process results in continuous improvement for both, making the generated images increasingly difficult to differentiate from real ones.

Put simply, as the generative capabilities of the generator gets stronger, the discriminating capabilities of the discriminator gets stronger – which is why those who are best equipped to produce deepfakes are the ones who are also best equipped to detect them.

Below is a diagram that describes this “adversarial” training process:

- Latent Random Input is a sample start value that goes into the generator to prompt it to create a piece of fake content

- Generator creates a piece of content based on the random input which is then sent to the discriminator

- Discriminator receives both the fake generated data and the real sample data from a stored set and attempts to discriminate one from the other – it then sends this input to an evaluator mechanism that determines whether the discriminator correctly distinguished the fake images from the real ones.

- Evaluation and model fine-tuning then takes place based on how well the discriminator and generator performed. The generators performance is tuned to better generate images that will pass the discriminator’s test. The discriminator’s performance is tuned to better identify generated images that the generator creates.

Rudy Burger

Founder & Managing Partner, Woodside Capital Partners

Akhilesh Shridar

Senior Analyst, Woodside Capital Partners

Access the full report “Deep Fakes – WCP Computer Vision Spotlight“.