This blog post was originally published at Expedera’s website. It is reprinted here with the permission of Expedera.

Most NPUs available today are not actually optimized for AI processing. Rather, they are variations of former CPU, GPU, or DSP designs. Every neural network has varying processing and memory requirements and offers unique processing challenges that make those derivative NPU architectures a less-than-ideal choice. Expedera’s OriginTM is a purpose-built AI processing architecture focusing on primitives that comprise neural network layers and feature packet-based processing optimized for different networks and workloads.

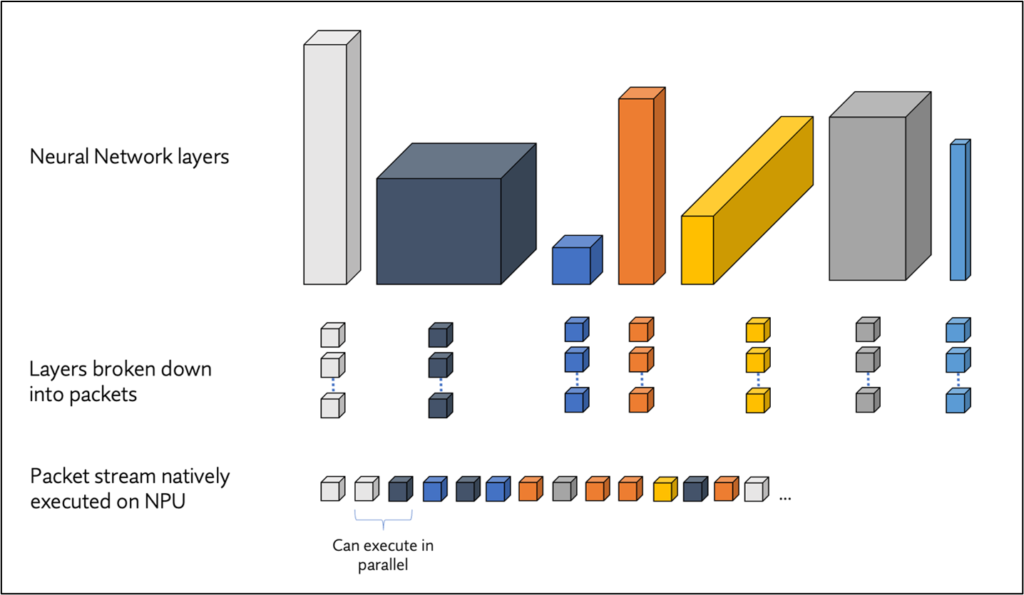

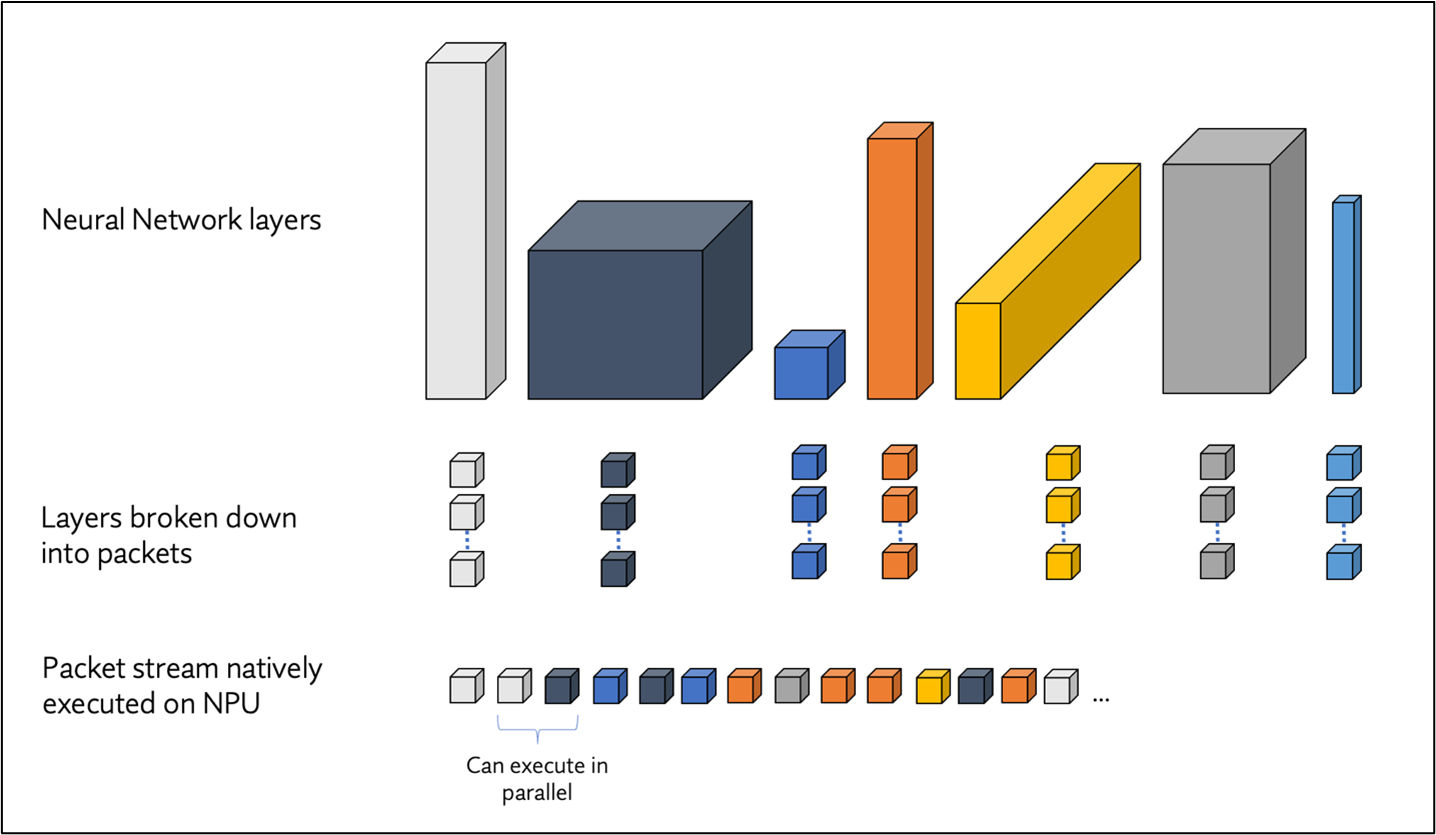

Let’s take a more relatable approach to understanding a neural network. At a very high level, a neural network is a series of layers, each with its own unique characteristics. The unique characteristics of the layers are best visualized as a series of 3D blocks, with some layers being ‘taller,’ ‘wider,’ or ‘deeper’ than others.

Typical AI processors struggle with AI network layers variances. They are designed to handle consistent memory and processing loads; layer variances lead to low utilization and inefficiencies such as excess power consumption, delayed results, and degraded user experience. It’s clear that a more efficient NPU solution is needed, and that’s where Expedera’s packet-based solution comes in, offering a superior alternative to traditional processors.

So what is a packet, and why do they matter? Think of packets as very small, efficient portions of the path of neural networks. Expedera breaks down the layer computations so that a small bundle of data (the packet), which includes all the required dependencies, can traverse the entire defined portion of the network. These packets are reorderable and dispatchable to any available execution unit. This simple yet effective approach simplifies connections, optimizes memory access at any scale, and ultimately significantly boosts efficiency and performance, providing a reassuring alternative to the inefficiencies of typical NPUs.

A key advantage of packets is the ability to optimize the computation of activations. Typical NPUs process one layer at a time and must store all the activations of the still-to-be-used layer before proceeding to the next layer. By breaking each layer into packets, Expedera enables multiple layers to be processed concurrently. In this packet-based traversal of the network, an activation is removed from memory after it is no longer needed, a practical benefit that decreases the number of cycles required to transfer activations to and from external memory (DDR). This approach can minimize and even eliminate activation moves to external memory, a significant practical advantage.

Optimizing network traversal through packets increases MAC utilization by enabling parallel computations and memory accesses. In other words, the Expedera NPU delivers more operations per second for a given frequency and number of MAC cores. Higher MAC utilization, in turn, increases throughput without adding additional bandwidth or compute.

Packets allow Expedera, without changing the accuracy or math behind the neural network, to address neural network processing in a manner that is more processor, memory, and power efficient. Particularly noteworthy is how this extended routing of packets through the network allows Expedera to minimize I/O operations, meaning that packets directly address the issue that typically dominates the power and latency costs of neural network inference.

Besides the inherent performance and power advantages, packets also offer the following advantages to Expedera users:

- No accuracy sacrifices — the packetization process does not degrade network accuracy at all. AI models will infer exactly as accurately trained.

- Process your trained models as-is — Expedera’s software stack analyzes your network(s) and packetizes based on their unique characteristics. Models are processed exactly as trained with no re-learning requirements.

- No learning required — Packetization is handled within the Expedera hardware/software stack without the need for you to learn complicated custom compilation languages.

- No network limits — Origin supports a variety of text, video, audio, and other networks, including popular LLM, LVM, RNN, CNN, and other types.

Expedera’s Origin with packet technology is more power- and area-efficient while maintaining much higher utilization than lesser NPU alternatives available on the market and has been the hardware optimization of choice for more than 10 million devices shipped by manufacturers worldwide.