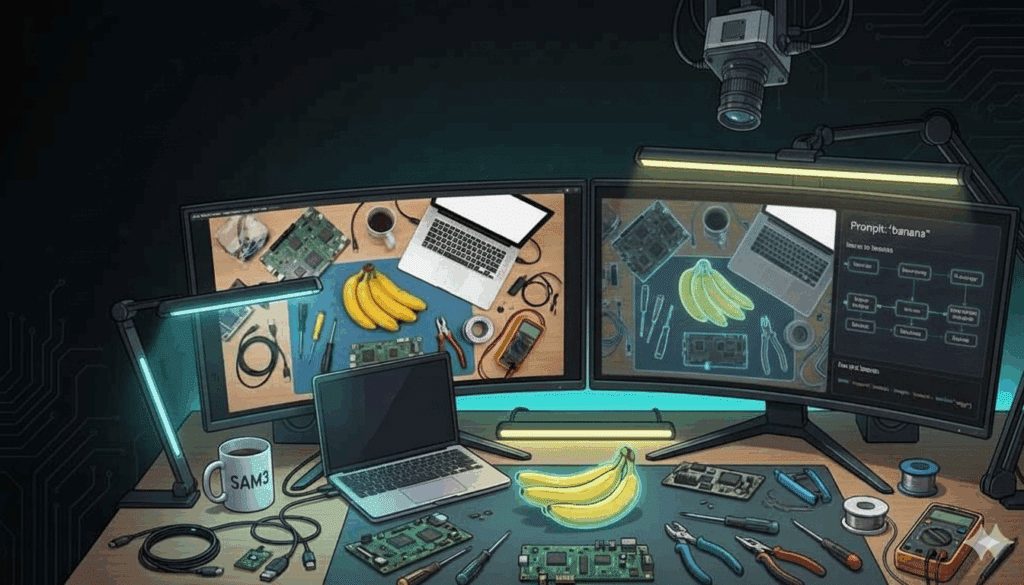

Quality training data – especially segmented visual data – is a cornerstone of building robust vision models. Meta’s recently announced Segment Anything Model 3 (SAM3) arrives as a potential game-changer in this domain. SAM3 is a unified model that can detect, segment, and even track objects in images and videos using both text and visual prompts. Instead of painstakingly clicking and labeling objects one by one, you now can simply tell SAM3 what you want to segment – e.g., “red baseball cap” – and it will identify and mask all matching objects in an image or throughout a video. This capability represents a leap forward in open-vocabulary segmentation and promises to accelerate how we create and utilize vision datasets.

TL;DR:

- SAM3 can segment and track multiple objects across frames with simple text description.

- META is releasing SAM3 model weights and dataset under an open license, enabling rapid experimentation and broad deployment.

- Scaling data annotation with SAM3 will have major impacts on AI training pipelines

From SAM1 and SAM2 to SAM3: What’s Changed?

SAM3 builds on Meta’s Segment Anything journey that began with SAM1 in early 2023 and SAM2 in 2024. The original Segment Anything Model (SAM1) introduced a powerful interactive segmentation tool that generated masks given visual prompts (like points or boxes) on static images. SAM2 expanded these capabilities to videos, allowing a user to segment an object in the first frame and track it across frames. However, both SAM1 and SAM2 were limited to visual prompts, not textual ones – they required a human to indicate which object to segment (e.g., by clicking on it) and typically handled one object per prompt.

SAM3’s breakthrough takes it from a geometry-focused tool into a concept-level vision foundation model. Instead of being constrained to a fixed set of labels or a single ROI, SAM3 can take a short noun phrase (e.g., “yellow school bus”) or an image exemplar, and automatically find all instances of that concept in the scene. . Unlike prior models that might handle generic labels like “car” or “person” but stumble on specific descriptions (“yellow school bus with black stripe”), SAM3 can also accept far more detailed text inputs and segments accordingly. In fact, it recognizes over 270,000 unique visual concepts and can segment them on the fly – an enormous jump in vocabulary size.

SAM3’s segmentation is exhaustive. Given a textual concept prompt, it returns all matching objects with unique masks and IDs at once. This is a fundamental shift from earlier Segment Anything versions (and most traditional models) which usually returned one mask per prompt. In practical terms, SAM3 performs open-vocabulary instance detection and segmentation simultaneously. For example, prompt it with “vehicle” on a street scene and SAM3 will identify every car, truck, or motorbike present with separate masks – no extra training required.

Implications for Edge AI and Training Data Pipelines

For teams building edge AI and vision products, SAM3’s capabilities translate into very tangible benefits:

- Faster, Smarter Data Annotation: SAM3 can dramatically speed up the creation of labeled datasets for new vision tasks. Since it supports zero-shot segmentation of virtually any concept, you can feed unannotated images or video frames and simply specify the objects of interest in plain language. The model will return segmentation masks for all instances of that object, which engineers can then refine or verify. This greatly reduces manual labeling effort for segmentation tasks. These masks can bootstrap training of a smaller model. As Meta is releasing SAM3’s model weights and even the massive SA-Co dataset under an open license, practitioners have a strong foundation to build upon.

- Open-Vocabulary On the Edge: While SAM3 itself may be too large to deploy on a low-power edge device, its open-vocabulary recognition enables a new workflow. You can use SAM3 as a “teacher” model to label data in any domain – even niche or custom object categories – and then fine-tune compact models for on-device use. The Roboflow team (an annotation and deployment platform) has already integrated SAM3 into their ecosystem to facilitate exactly this: you can prompt SAM3 via their cloud API or Playground, get segmentations, and then train a smaller model that suits your edge constraints. Even if SAM3 runs in the cloud or on a beefy local machine, it can greatly reduce the burden of collecting and annotating edge-case data for edge devices.

- Unified Solution (Detection + Segmentation + Tracking): Traditionally, a product might have relied on separate components (an object detector trained on specific classes, a segmentation model per class or a generic segmenter, and a tracking algorithm to link detections frame-to-frame). SAM3 offers all these in one foundation model. This unified approach will shorten development cycles and reduce integration complexity for computer vision pipelines. It’s now feasible to prototype features like “find and blur any logo that appears in my security camera feed” or “track that car through the intersection video” by just writing a prompt, without collecting a custom dataset or writing tracking code from scratch.

Moreover, Meta has provided a Segment Anything Playground – a web-based demo platform – to try SAM3 on your own images/videos with no setup. They’ve also partnered with annotation tools to make fine-tuning easier. The aim is to empower developers to plug SAM3 into their workflows quickly, whether for data preparation or direct analysis.

Why SAM3 Matters for the Physical AI Revolution

Beyond traditional vision tasks, SAM3 is a stepping stone toward vision AI that you can communicate with in natural language about real-world scenes, enabling new forms of human-AI interaction in physical environments.

Meta’s announcement also introduced SAM3D, a companion set of models for single-image 3D reconstruction. With SAM3D, the system can take a single 2D image and produce a 3D model of an object or even a human body within it. This is a notable development for physical AI because it translates visual understanding into spatial, physical understanding. For example, SAM3D can generate 3D shapes of real objects (say, a piece of furniture or a monument) from just a photo. This ability has immediate uses in AR/VR – Meta is already using it to power a “View in Room” feature on Marketplace, letting shoppers visualize how a lamp or table would look in their actual living space. It could also aid robotics and simulation, where understanding the 3D form of objects from vision is critical. Together, SAM3 and SAM3D underscore a trend: AI that perceives not just in abstract pixels, but in terms of objects, concepts, and physical structures – much like a human would when navigating the real world.

A Significant Leap Forward

SAM3 represents a significant leap forward for the computer vision community. It elevates segmentation from a manual, one-object-at-a-time chore to an intelligent, scalable service: “Segment Anything” now truly means anything you can describe. For engineers and product teams, SAM3 offers a powerful new toolbox – whether it’s speeding up dataset creation, simplifying vision model pipelines, or enabling natural language interfaces for visual tasks. Meta’s open-sourcing of the model and data, and integrations with platforms like Roboflow, mean that this technology is readily accessible for experimentation and deployment. As we build AI products that increasingly interface with the messy, unpredictable physical world, the need for adaptable vision systems will only grow. SAM3’s open-vocabulary, multi-domain segmentation is a big step in that direction, pointing toward a future where teaching an AI “what to see” is as straightforward as telling a colleague what you’re looking for – and where generating high-quality training data is faster and easier than ever before.

To Probe Further:

- Meta AI News (2025). New Segment Anything Models Make it Easier to Detect Objects and Create 3D Reconstructions https://about.fb.com/news/2025/11/new-sam-models-detect-objects-create-3d-reconstructions

- Meta AI Blog (2025). Introducing Meta Segment Anything Model 3 and Segment Anything Playground https://ai.meta.com/blog/segment-anything-model-3/

- Meta Research (2025). SAM 3: Segment Anything with Concepts (ICLR 2026 submission) https://openreview.net/forum?id=r35clVtGzw

- James Gallagher and Jacob Solawetz (Nov 2025). What is Segment Anything 3 (SAM 3)? (Roboflow Blog) https://blog.roboflow.com/what-is-sam3/

- Frederik Hvilshøj (October 2025). Segment Anything Model 3 (SAM 3): What to Expect from the Next Generation of Foundation Segmentation Models (Encord Blog) https://encord.com/blog/segment-anything-model-3

- Trevor Lynn (Nov 2025). Launch: Use Segment Anything 3 (SAM 3) with Roboflow (Roboflow Blog) https://blog.roboflow.com/sam3/

- Harsh Vardhan (Nov 2025). “Meta SAM 3: The AI That Understands ‘Find Every Red Hat’” (Medium) https://medium.com/@harsh.vardhan7695/meta-sam-3-the-ai-that-understands-find-every-red-hat-b489d341977b

- GitHub – facebookresearch/sam3 (Nov 2025). README and documentation for SAM3 https://github.com/facebookresearch/sam3