AI Chips Market by AI Chip Type. For full data, refer to “AI Chips for Data Centers and Cloud 2025-2035: Technologies, Market, Forecasts”.

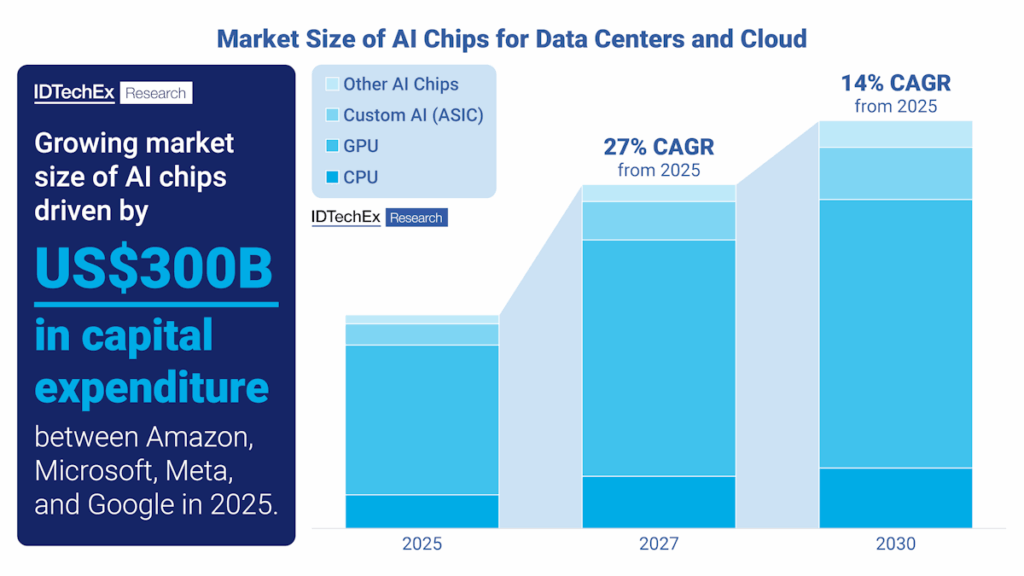

By 2030, IDTechEx forecasts that the deployment of AI data centers, commercialization of AI, and the increasing performance requirements from large AI models will perpetuate the already soaring market size of AI chips to over US$400 billion. However, the underlying technology must evolve to remain competitive with the demand for more efficient computation, lower costs, higher performance, massively scalable systems, faster inference, and domain-specific computation.

IDTechEx’s latest report, “AI Chips for Data Centers and Cloud 2025-2035: Technologies, Market, Forecasts“, characterizes data center and cloud AI chip technologies, key players, and markets. This report provides market intelligence for the AI chips space, with coverage across AI chip types, including graphics processing units (GPUs), AI-capable central processing units (CPUs), custom AI application-specific integrated circuits (ASICs), and other AI chips, spanning over 50 industry players, and with market forecasts from 2025 to 2035.

The opportunity for AI chips

Frontier artificial intelligence (AI) has persistently attracted hundreds of billions in global investment year on year, with governments and hyperscalers racing to lead in domains like drug discovery and autonomous infrastructure. Graphics processing units (GPUs) and other AI chips have been instrumental in driving the growth in performance of top AI systems, providing the compute needed for deep learning within data centers and cloud infrastructure. However, with the capacity of global data centers expected to reach hundreds of GWs in the coming years, and investments reaching hundreds of billions of US dollars, concerns about the energy efficiency and costs of current hardware have increasingly come into the spotlight.

Graphics Processing Units (GPUs) currently dominate the AI chips market

The largest systems for AI are massive scale-out HPC and AI systems – these heavily implement GPUs. These tend to be hyperscaler AI data centers and supercomputers, both of which can offer exaFLOPS of performance, on-premise or over distributed networks. NVIDIA has seen remarkable success over recent years with its Hopper (H100/H200) chips and recently released Blackwell (B200/B300) chips. AMD has also created competitive chips with its MI300 series processors (MI300X/MI325X). Over the last few years, Chinese players have also been developing solutions due to sanctions from the US on advanced chips, which prevent the export of US-based chips to China.

These high-performance GPUs continue to adopt the most advanced semiconductor technologies, which are explored in detail in the new IDTechEx report “AI Chips for Data Centers and Cloud 2025-2035: Technologies, Market, Forecasts”. In-depth examination of these technologies, such as high-bandwidth memory (HBM) from memory providers (e.g., Samsung, SK Hynix, and Micron Technology) and advanced semiconductor 2.5D and 3D packaging, transistor technologies, and chiplet technologies from foundries and IDMs (e.g., TSMC, Samsung Foundry, and Intel Foundry) can be found within the report.

Custom AI chips used by hyperscalers and Cloud Service Providers (CSPs)

High-performance GPUs have been integral for training AI models; however, they do face various limitations. These include high total cost of ownership (TCO), vendor lock-in risks, low utilization for AI-specific operations, and can be overkill for specific inference workloads. Because of this, an emerging strategy used by hyperscalers is to adopt custom AI ASICs from ASIC designers, such as Broadcom and Marvell.

These custom AI ASICs have purpose-built cores for AI workloads, are cheaper per operation, are specialized for particular systems (e.g., transformers, recommender systems, etc.), and offer energy-efficient inference. These also give hyperscalers and CSPs the opportunity for full-stack control and differentiation without sacrificing performance. Evaluation of potential risks, key partnerships, player activity, benchmarking, and technology overviews are available within “AI Chips for Data Centers and Cloud 2025-2035: Technologies, Market, Forecasts”.

Emergence of other AI chips for data center and cloud

Both large vendors and AI chip-specific startups have released alternative AI chips, which offer benefits over the incumbent GPU technologies. These are designed using similar and novel AI chip architectures, intending to make more suitable chips for AI workloads, targeted at lowering costs and more efficient AI computations. Some large chip vendors, such as Intel, Huawei, and Qualcomm, have designed AI accelerators (e.g., Gaudi, Ascend 910, Cloud AI 100), using heterogeneous arrays of compute units (similar to GPUs), but purpose-built to accelerate AI workloads. These offer a balance between performance, power efficiency, and flexibility for specific application domains.

AI chip-focused startups often take a different approach, deploying cutting-edge architectures and fabrication techniques with the likes of dataflow-controlled processors, wafer-scale packaging, spatial AI accelerators, processing-in-memory (PIM) technologies, and coarse-grained reconfigurable arrays (CGRAs). Various companies have successfully launched these systems (Cerebras, Groq, Graphcore, SambaNova, Untether AI, and others) for data centers and cloud computing. These systems perform exceptionally, especially in scale-up environments, but may struggle in massive scale-out environments, especially when compared to high-performance GPUs. IDTechEx’s report offers comprehensive benchmarking, comparisons, key trends, technology breakdowns, challenges, and player activity.

The various technologies involved in designing and manufacturing give a wide breadth for future technological innovation across the semiconductor industry supply chain. Government policy and heavy investment show the prevalent interest in pushing frontier AI toward new heights, and this will require exceptional volumes of AI chips within AI data centers to meet this demand. IDTechEx forecasts that the AI Chips market will reach US$453 billion by 2030 at a CAGR of 14% between 2025 and 2030.

IDTechEx’s latest report, “AI Chips for Data Centers and Cloud 2025-2035: Technologies, Market, Forecasts”, offers an independent analysis of the AI chip market for data centers and the cloud. This report includes benchmarking current and emerging technologies, technology breakdowns of over 50 industry players, and key trends, covering existing and forthcoming hardware architectures, advanced node technologies, and advanced semiconductor packaging, along with information on the supply chain, investments, and policy. Granular revenue forecasts from 2025 to 2035 for the data center and cloud AI chips market are provided, segmented by types of AI chips.

To find out more about this new IDTechEx report, including downloadable sample pages, please visit www.IDTechEx.com/AIChips.

For the full portfolio of semiconductors, computing and AI market research available from IDTechEx, please see www.IDTechEx.com/Research/Semiconductors.

Jameel Rogers

Technology Analyst, IDTechEx

About IDTechEx

IDTechEx provides trusted independent research on emerging technologies and their markets. Since 1999, we have been helping our clients to understand new technologies, their supply chains, market requirements, opportunities and forecasts. For more information, contact [email protected] or visit www.IDTechEx.com.