This blog post was originally published at Qualcomm’s website. It is reprinted here with the permission of Qualcomm.

Our papers, demos, workshops and tutorial continue our leadership in generative AI and learning systems

-

At Qualcomm AI Research, we are advancing AI to make its core capabilities — perception, reasoning and action — ubiquitous across devices.

-

During the annual Computer Vision and Pattern Recognition Conference (CVPR), we showcase our leadership in cutting-edge generative AI and learning systems.

-

Through hands-on demos, workshops and tutorials, we feature our innovative AI applications and technologies, efficient vision models, low-power computer vision and power-efficient neural networks.

Qualcomm AI Research is gearing up to participate in the Computer Vision and Pattern Recognition Conference (CVPR) 2025, kicking off June 11. We’re not just attending; we’re making a splash with eight main track papers, three co-located workshop papers, three accepted demos, three co-organized workshops and one co-organized tutorial. Plus, attendees won’t want to miss visiting our booth #1201.

With CVPR boasting a 22% paper acceptance rate, it’s clear that this conference is where game-changing research happens. You get a front-row seat to groundbreaking advancements in generative AI for images and videos, XR, automotive innovations, computational photography and robotic vision.

Papers

At top-tier conferences like CVPR, meticulously peer-reviewed research defines the cutting edge and drives the field forward. We’re excited to highlight a few of our accepted papers that push the boundaries of computer vision, spanning two key areas: generative AI and learning systems.

Our papers contributing to generative AI include:

- DiMA: Distilling Multi-modal Large Language Models for Autonomous Driving

- SwiftEdit: Lightning Fast Text-guided Image Editing via One-step Diffusion

- SharpDepth: Sharpening Metric Depth Predictions Using Diffusion Distillation

- HyperNet Fields: Efficiently Training Hypernetworks without Ground Truth by Learning Weight Trajectories

- ADAPTOR: Adaptive Token Reduction for Video Diffusion Transformers

These papers introduce innovative ways to generate structured outputs using generative models and diffusion distillation techniques.

From enhancing autonomous driving systems to enabling real-time image editing and streamlining video generation, they mark significant progress in applying generative AI to complex, real-world problems.

Autonomous driving requires safe and reliable motion planning, especially in rare and complex “long-tail” scenarios. While recent end-to-end systems have started using large language models (LLMs) as planners to better handle these edge cases, running LLMs in real time can be computationally intensive. Our paper, “DiMA: Distilling Multi-modal Large Language Models for Autonomous Driving,” introduces a novel approach that combines the efficiency of a lightweight AI planner with the rich world knowledge of an LLM. The result? State-of-the-art performance on key benchmarks and an impressive 80% reduction in collision rates.

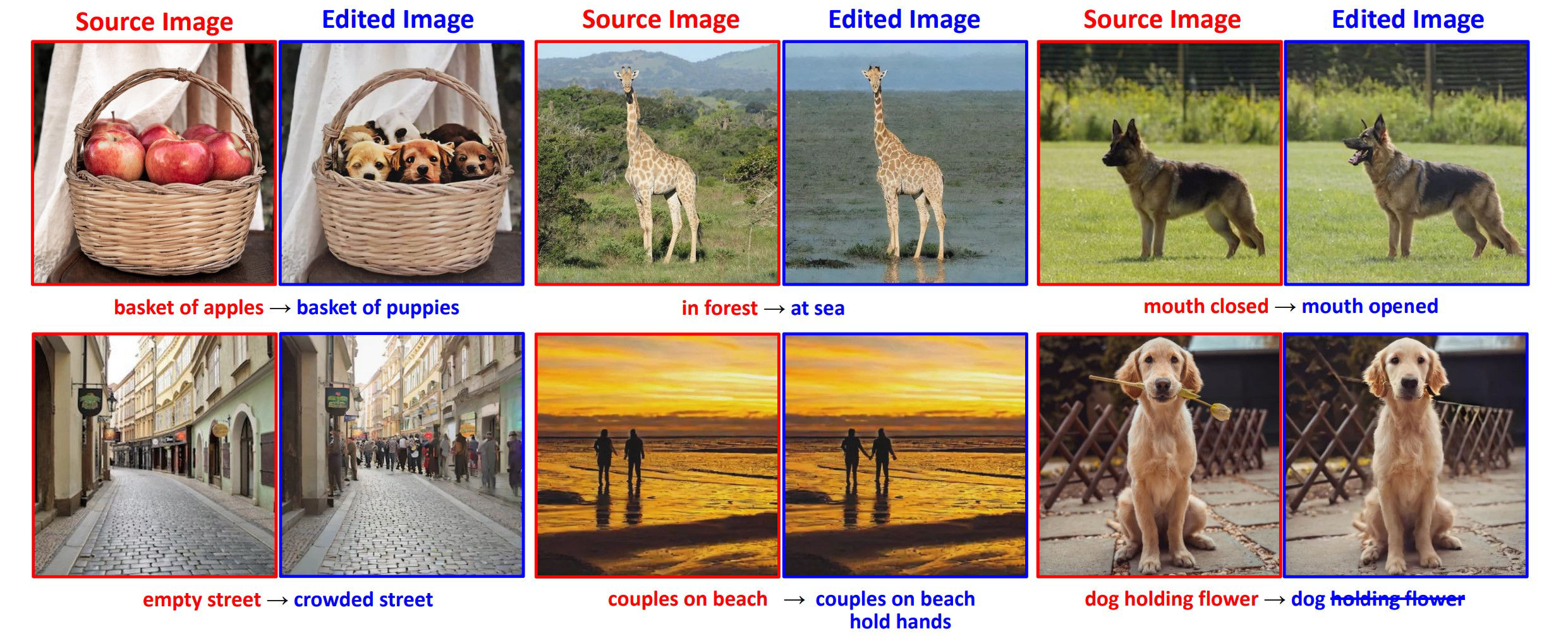

Text-guided image editing has come a long way, but most methods are too slow for real-world use. Our paper, “SwiftEdit: Lightning Fast Text-guided Image Editing via One-step Diffusion,” proposes a lightning-fast editing tool that delivers high-quality results in a fraction of a second, which is over 50 times faster than previous approaches. With a one-step inversion framework and a novel mask-guided editing technique, SwiftEdit makes real-time, on-device image editing a reality. Check out our project page.

Discriminative models offer accurate depth but often lack detail, while generative models produce sharper depth maps with lower accuracy. Our paper, “SharpDepth: Sharpening Metric Depth Predictions Using Diffusion Distillation,” bridges this gap by combining the precision of metric depth estimation with the fine-grained detail of generative methods. It delivers both high accuracy and sharp boundaries, making it ideal for real-world applications that demand high-quality depth perception.

Many generative modeling tasks require models to adapt to specific conditions or user inputs — meaning their parameters must change dynamically based on the data. Hypernetworks offer a promising solution but training them typically demands ground-truth weights for every sample, which is both time-consuming and resource-intensive. Our paper, “HyperNet Fields: Efficiently Training Hypernetworks without Ground Truth by Learning Weight Trajectories,” introduces a new approach that removes this bottleneck. By modeling the entire training trajectory of a task network, it predicts weights at any convergence state using only gradient consistency. We show its effectiveness on tasks like personalized image generation and 3D shape reconstruction, all without relying on per-sample ground truth.

Transformers are powerful and scalable, but their high computational cost, especially for video content, remains a challenge. Our paper, “ADAPTOR: Adaptive Token Reduction for Video Diffusion Transformers,” presents a lightweight token reduction method that compresses video data by leveraging temporal redundancy. Seamlessly integrating into existing video diffusion transformers, ADAPTOR cuts compute by 2.85 times while improving output quality and exceling at capturing motion.

Our contributions to learning systems include the following papers:

- Any3DIS: Class-Agnostic 3D Instance Segmentation by 2D Mask Tracking

- Low-Rank Adaptation in Multilinear Operator Networks for Security-Preserving Incremental Learning

- CustomKD: Customizing Large Vision Foundation for Edge Model Improvement via Knowledge Distillation

- Tripartite Weight-Space Ensemble for Few-Shot Class-Incremental Learning

- Learning Optical Flow Field via Neural Ordinary Differential Equation

- Improving Optical Flow and Stereo Depth Estimation by Understanding Learning Difficulties

These papers advance the field of learning systems through innovations in segmentation, tracking, incremental learning and knowledge distillation, tackling challenges such as 3D perception, secure model adaptation and robust optical flow estimation.

Many 3D instance segmentation methods struggle with over-segmentation, producing redundant proposals that hinder downstream tasks. Our paper, “Any3DIS: Class-Agnostic 3D Instance Segmentation by 2D Mask Tracking,” tackles this with a 3D-aware 2D mask tracking module to ensure consistent object masks across frames. Instead of merging all visible points, our 3D mask optimization module smartly selects the best views using dynamic programming, and then refines proposals for better accuracy. Tested on ScanNet200 and ScanNet++, our method improves performance across class-agnostic, open-vocabulary and open-ended segmentation tasks.

In security-sensitive applications, decrypting data for processing poses serious risks. While polynomial networks can operate directly on encrypted data using homomorphic encryption, they often suffer from catastrophic forgetting in continual learning. Our paper, “Low-Rank Adaptation in Multilinear Operator Networks for Security-Preserving Incremental Learning,” presents a new solution: a low-rank adaptation method paired with gradient projection memory. This approach maintains full compatibility with encrypted inference while significantly improving performance in incremental learning tasks, pushing the boundaries of secure, privacy-preserving AI.

Large vision foundation models (LVFMs) like DINOv2 and CLIP are powerful, but their potential to boost lightweight edge models has been underused, until now. Our paper, “CustomKD: Customizing Large Vision Foundation for Edge Model Improvement via Knowledge Distillation,” introduces a simple yet effective knowledge distillation method that bridges the gap between massive LVFMs and compact models. By aligning teacher and student features, CustomKD makes it easier for edge models to learn from their larger counterparts. This leads to significant performance gains in real-world scenarios like domain adaptation and low-label learning, all without labeled data.

In many cases, a few examples are available. Still, learning new classes from just a few examples is tough since models often forget old ones or overfit. Our method in “Tripartite Weight-Space Ensemble for Few-Shot Class-Incremental Learning,” Tri-WE, remedies this by blending past and current models in weight space and boosting knowledge distillation with simple data mixing. This leads to top-tier performance across benchmarks like miniImageNet, CUB200 and CIFAR100.

Optical flow estimation plays a vital role in computer vision, and our latest research brings fresh momentum to the field, as described in two complementary papers. The first paper, “Learning Optical Flow Field via Neural Ordinary Differential Equation,” introduces a novel approach that models optical flow as a dynamic equilibrium process using neural ODEs, allowing the model to adaptively refine predictions based on the data, rather than relying on a fixed number of steps. The second paper, “Improving Optical Flow and Stereo Depth Estimation by Understanding Learning Difficulties,” tackles the learning challenges in optical flow and stereo depth estimation by identifying pixel-wise difficulty and addressing it with two new loss functions: difficulty balancing and occlusion avoiding. Together, these works offer both architectural innovation and smarter training strategies, leading to consistent improvements across standard benchmarks.

Technology demonstrations

We’re bringing our cutting-edge research to life at CVPR 2025, and attendees are invited to see it in action at booth #1201. They can explore how our latest innovations in generative AI and computer vision are pushing boundaries and solving real-world challenges.

From mobile-first generative models to intelligent avatars and edge-ready vision systems, our live demos showcase the practical power of AI beyond the lab. Here’s a sneak peek at what you can experience:

Generative AI in action:

- World’s first on-device image-to-video generation on mobile (official CVPR demo)

- Video-to-video generation on mobile (official CVPR demo)

- Text-to-3D for gaming

- Agentic AI as an assistant

- Multi-modal visual Q&A assistant

- Gaussian splatting for data augmentation

- Efficient multi-modal memory storing and retrieval

- 3D talking avatar with facial expression

Advanced computer vision:

- Efficient segmentation for edge devices (official CVPR demo)

- 3D Gaussian splatting scanning on mobile

- Lightweight open-vocabulary vision on mobile

- Portable dash cam with driver monitoring

- Relightable photorealistic avatar on mobile

- Gaussian splatting for 3D avatar

Whether you’re into generative creativity, real-time perception or edge AI, there’s something here to inspire. Come see how we’re turning research into reality, live and hands-on.

Workshops

We’re not just showcasing demos, we’re also co-organizing several exciting workshops at CVPR 2025 that dive deep into the future of generative AI, efficient vision models and real-world applications. If you’re attending, don’t miss these:

- Workshop on Vision-based Assistants in the Real World

Explore how vision-powered agents can operate in dynamic, real-world environments using advances in simulation, generative methods and foundation models. - Workshop on Efficient Large Vision Models (eLVM)

Tackle the challenge of scaling vision models without the massive compute cost by exploring compact architectures, adaptive computation and efficient generative modeling. - Workshop on Efficient Deep Learning for Computer Vision (ECV)

Dive into the latest in energy-efficient vision systems, from hardware-aware design to data-efficient training and benchmarking for low-power applications. Winners from the LPCV challenge will also present their solution and receive laptops powered by Snapdragon processors sponsored by Qualcomm Technologies. - SyntaGen Workshop – Harnessing Generative Models for Synthetic Visual Datasets

Discover how generative models can be used to create high-quality synthetic data for training and evaluating vision systems, boosting performance where real data is scarce.

These workshops are a great opportunity to connect with researchers, share ideas and shape the future of computer vision.

Tutorial

As neural networks grow larger, especially in generative AI, their hunger for compute and energy becomes a real bottleneck. But what if we could shrink that cost without shrinking performance?

Join us at CVPR 2025 for our co-organized tutorial on Power-Efficient Neural Networks Using Low-Precision Data Types and Quantization. We’ll dive into how low-precision computation, when paired with smart quantization techniques and hardware support, can dramatically cut down energy use and latency — making AI more sustainable and edge ready.

Expect hands-on examples, practical guidance and a deep dive into the latest quantization methods that preserve accuracy while slashing compute. Whether you’re building for the cloud or the edge, this tutorial is your roadmap to efficient, scalable AI.

Join us in expanding the limits of generative AI and computer vision

This is a brief overview of the key highlights from our participation at CVPR 2025. If you are attending the event, we encourage you to visit the Qualcomm Technologies booth #1201 to learn more about our research initiatives, observe our demonstrations and explore career opportunities in AI with us.

Fatih Porikli

Sr. Director, Technology, Qualcomm Technologies, Inc.