A funny thing is happening in the edge AI world: some of the most important product decisions you’ll make this year won’t be about TOPS, sensor resolution, or which transformer variant to deploy. They’ll be about memory—how much you can get, how much it costs, and whether you can ship the exact part you designed around.

If that sounds abstract, here’s a very concrete, engineer-facing signal: on December 1, 2025, Raspberry Pi raised prices on several Pi 4 and Pi 5 SKUs explicitly citing an “unprecedented rise in the cost of LPDDR4 memory,” and said the increases help secure memory supply in a constrained 2026 market. For many teams, Pis aren’t “consumer gadgets”—they’re prototyping platforms, lab fixtures, vision pipeline testbeds, and quick-turn demos. When the cost of your dev fleet and internal tooling moves like this, it’s a canary.

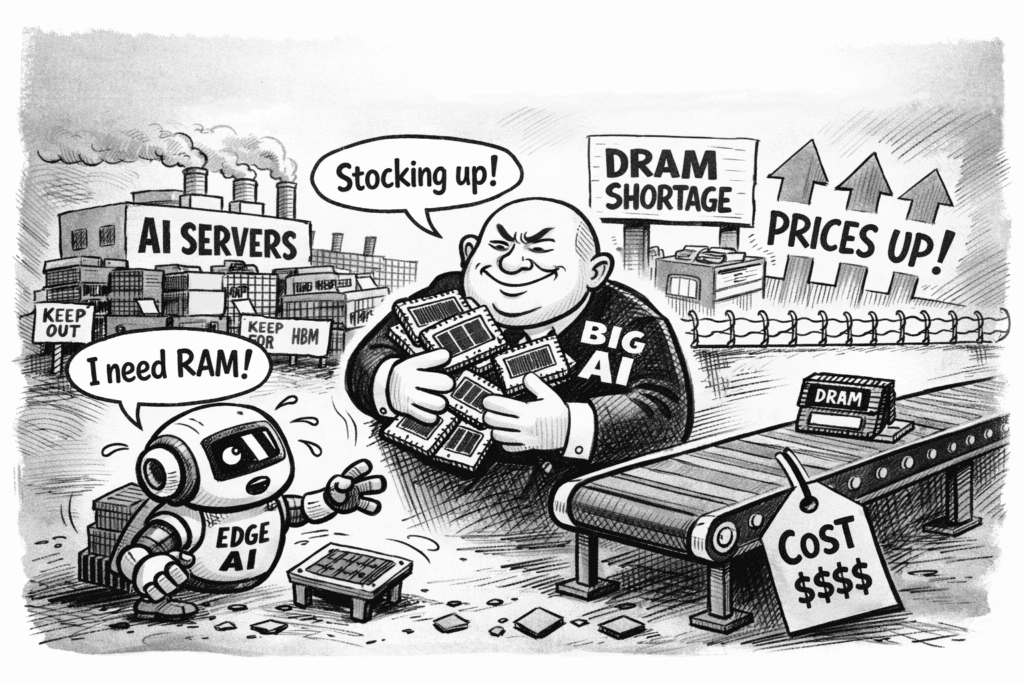

Zoom out and the picture gets sharper: the memory market is splitting into “AI infrastructure gets what it needs” and “everyone else adapts.” EE Times calls this the “Great Memory Pivot,” and—crucially—it’s being amplified by stockpiling behavior. Major OEMs are buffering memory inventory to reduce risk, which in turn worsens shortages and pushes prices higher.

For edge AI and computer vision teams, the takeaway isn’t “PCs are expensive.” It’s that we’re heading into a period where memory behaves less like a commodity and more like a capacity-allocated input—and edge products sit uncomfortably close to the blast radius.

The two forces that matter most to edge teams

1) AI infrastructure is crowding out conventional DRAM/LPDDR

The clearest near-term data point comes from TrendForce: conventional DRAM contract prices for 1Q26 are forecast to rise ~55–60% QoQ, driven by DRAM suppliers reallocating advanced nodes and capacity toward server and HBM products to support AI server demand. TrendForce also says server DRAM contract prices could surge by more than 60% QoQ.

Edge implication: even if you never touch HBM, the market dynamics around HBM and server DRAM pull the entire supply chain toward higher-margin, AI-driven segments, tightening availability and raising prices for the memory your edge designs actually use. And in practice, edge teams don’t just experience “higher price”; they experience allocation, lead-time uncertainty, and last-minute substitutions that turn into board spins and slipped launches.

2) LPDDR is explicitly called out as staying undersupplied

TrendForce doesn’t just talk about servers. It says LPDDR4X and LPDDR5X are expected to stay undersupplied, with uneven resource distribution supporting higher prices.

That’s directly relevant to edge AI and vision because LPDDR is everywhere in the edge stack: smart cameras and NVRs, robotics compute modules, industrial gateways, in-cabin systems, drones, and many “embedded Linux + NPU” boxes. LPDDR constraints hit you both ways:

- Capacity: can you get the density you want?

- Cost: can you afford it at scale?

- SKU fragility: can you swap without a redesign if allocation tightens?

Again, the Raspberry Pi move is the engineer-friendly example: they directly attribute price changes to LPDDR4 costs and explicitly mention AI infrastructure competition.

Why edge AI is more sensitive than typical embedded systems

Edge AI and computer vision systems are in the middle of a structural shift: workloads are getting wider and more concurrent, not just more accurate.

A 2022-ish camera pipeline might have been: ISP → detection → tracking. A 2026 product pipeline often includes some mix of: detection + tracking + re-ID + segmentation + multi-camera fusion + privacy filtering + local search/embedding + event summarization. Even when models are “small,” the system-level reality is that you’re holding more intermediate state, more queues, more buffers, and more simultaneous streams.

Three practical reasons memory becomes the choke point:

- Bandwidth limits show up before compute limits. Many edge systems are memory-traffic-bound long before the NPU saturates. “More TOPS” doesn’t help if tensors are waiting on memory.

- Concurrency drives peak usage. You can optimize average footprint and still lose to peak bursts: a model swap, two video streams, a backlog spike, a logging burst—and suddenly you’re in the danger zone (OOM resets, frame drops, tail-latency explosions).

- Soldered-memory designs reduce escape routes. If you ship soldered LPDDR, you can’t treat memory like a field-upgradable afterthought. You either got the config right—or you’re spinning hardware.

Stockpiling changes the rules for edge product planning

One of the most important new themes in the last two weeks of reporting is that the shortage is being amplified by behavior, not just fundamentals. EE Times describes large OEMs stockpiling critical components (including memory) to buffer shortages—and explicitly notes that this stockpiling makes shortages worse and pushes prices higher.

This matters for edge companies because stockpiling is a competitive weapon:

- Big buyers secure allocation and smooth out volatility.

- Smaller and mid-sized edge OEMs/ODMs get pushed toward spot markets, last-minute substitutions, and uncomfortable BOM surprises.

- Product teams end up redesigning around what’s available rather than what’s optimal.

In other words: forecasting discipline and supplier relationships start to determine product viability, not just product-market fit.

What this changes in edge AI product decisions

1) “Memory optionality” becomes a design requirement

If you can credibly support multiple densities (or multiple qualified parts) without a full board spin, you reduce existential risk.

Practical patterns:

- PCB/layout options that support more than one density or vendor part

- Firmware that can adapt model scheduling to available RAM

- Feature flags / “degrade gracefully” modes that reduce peak memory without breaking core value

2) Your AI strategy becomes a supply-chain strategy

Teams will increasingly win by shipping memory-efficient capability, not just higher accuracy.

Engineering investments that suddenly have real business leverage:

- Activation-aware quantization and buffer reuse (not just weight compression)

- Streaming/tiled vision pipelines that avoid large live tensors

- Smarter scheduling to prevent worst-case concurrency peaks

- Bandwidth reduction techniques (operator fusion, lower-resolution intermediate features, fewer full-frame copies)

3) SKU strategy will simplify (whether you like it or not)

In a tight allocation market, too many SKUs becomes self-inflicted pain: each memory configuration increases planning complexity, qualification cost, and the probability that one SKU becomes unbuildable.

Many edge companies will converge toward:

- Fewer memory configurations

- Clear “base” and “pro” SKUs

- Longer pricing windows (or more frequent repricing)

4) Prototyping and internal infrastructure costs rise

This is the “engineer tax” that’s easy to miss. If Raspberry Pi prices move because LPDDR moves, your dev boards, test rigs, and in-house tooling budgets are likely to move too. That can slow iteration velocity precisely when teams are trying to ship more complex, more AI-forward products.

The realistic timeline: don’t bet on a quick snap-back

One reason this cycle feels different is that multiple credible sources are describing tightness persisting and prices moving sharply.

Micron’s fiscal Q1 2026 earnings call prepared remarks argues that aggregate industry supply will remain substantially short “for the foreseeable future,” that HBM demand strains supply due to a 3:1 trade ratio with DDR5, and that tightness is expected to persist “through and beyond calendar 2026.” Reuters reporting similarly frames this as more than a one-quarter wobble, describing an AI-driven supply crunch and quoting major players calling the shortage “unprecedented.”

Edge takeaway: plan like this is a multi-quarter design and sourcing constraint, not a temporary annoyance you can outwait.

A pragmatic playbook for edge AI and vision teams

For engineering leads

- Instrument peak memory, not just average. Treat worst-case bursts as first-class test cases.

- Make bandwidth visible. Profile memory traffic and copy counts; optimize data movement early.

- Build a “ship mode.” Define what features can drop (or run less frequently) when memory is constrained.

- Treat memory as a product KPI. Publish memory budgets alongside latency and accuracy.

For product and business leads

- Tie roadmap bets to buildability. A feature that requires an unavailable memory configuration is not a feature—it’s a slip.

- Reduce SKU sprawl. Fewer configurations means fewer ways supply can break you.

- Qualify alternates on purpose. Make multi-sourcing part of the schedule, not an emergency scramble.

- Treat allocation like GTM. Your launch plan should include supply assurance milestones, not just marketing milestones.

The punchline

Edge AI is getting smarter, more multimodal, and more “always on.” But the industry is also learning—again—that the constraint that matters is often the one you don’t put on the slide.

In 2026, the teams that win won’t just have better models. They’ll have better memory discipline: designs that tolerate volatility, software that respects bandwidth, and product plans that assume supply constraints are real.

Disclosure: Micron Technology is a member of the Edge AI and Vision Alliance. The company is cited here as one of several sources for public market and supply commentary.

Further Reading:

1GB Raspberry Pi 5 now available at $45, and memory-driven price rises – Raspberry Pi press release, December 2025.

The Great Memory Stockpile – EE Times, January 2026.

Chip shortages threaten 20% rise in consumer electronics prices – Financial Times, January 2026.

Memory Makers Prioritize Server Applications, Driving Across-the-Board Price Increases in 1Q26, Says TrendForce – TrendForce, January 2026.

Micron Technology Fiscal Q1 2026 Earnings Call Prepared Remarks – Micron Technology investor filings, December, 2025.

Micron HBM Designed into Leading AMD AI Platform – Micron Technology press release, June 2025.

AI Sets the Price: Why DRAM Shortages Are Rewriting Memory Market Economics – Fusion WorldWide, November 2025.

Samsung likely to flag 160% jump in Q4 profit as AI boom stokes chip prices – Reuters, January 2026.

Memory chipmakers rise as global supply shortage whets investor appetite – Reuters, January 2026.