This blog post was originally published at Intel's website. It is reprinted here with the permission of Intel.

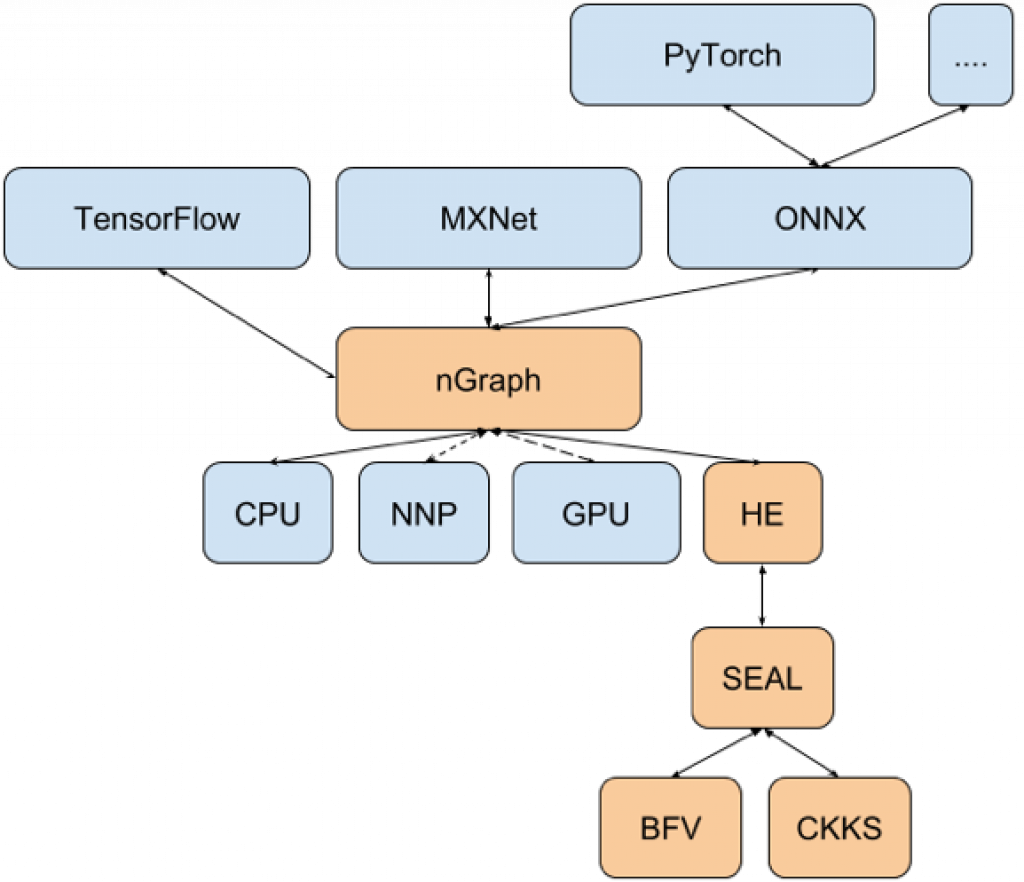

We are pleased to announce the open source release of HE-Transformer, a homomorphic encryption (HE) backend to nGraph, Intel’s neural network compiler. HE allows computation on encrypted data. This capability, when applied to machine learning, allows data owners to gain valuable insights without exposing the underlying data; alternatively, it can enable model owners to protect their models by deploying them in encrypted form. HE-transformer is a research tool that enables data scientists to develop neural networks on popular open-source frameworks, such as TensorFlow*, then easily deploy them to operate on encrypted data.

We are also pleased to announce that HE-Transformer uses the Simple Encrypted Arithmetic Library (SEAL) from Microsoft Research to implement the underlying cryptography functions. Microsoft has just released SEAL as open source, a significant contribution to the community. “We are excited to work with Intel to help bring homomorphic encryption to a wider audience of data scientists and developers of privacy-protecting machine learning systems,” said Kristin Lauter, Principal Researcher and Research Manager of the Cryptography group at Microsoft Research.

Why HE-transformer?

Growing concerns about privacy make HE an attractive solution to resolve the seemingly conflicting demands that machine learning requires data, while privacy requirements tend to preclude its use. Recent advances in the field have now made HE viable for deep learning. Currently, however, designing deep learning HE models requires simultaneous expertise in deep learning, encryption, and software engineering. HE-transformer provides an abstraction layer, allowing users to benefit from independent advances in each field. With HE-transformer, data scientists can deploy trained models with popular frameworks like TensorFlow, MXNet* and PyTorch* directly, without worrying about integrating their model into HE cryptographic libraries. Researchers can leverage TensorFlow to rapidly develop new HE-friendly deep learning topologies. Meanwhile, advances in the nGraph compiler are automatically integrated, without any impact on the user.

Current Features

- We currently directly integrate with nGraph Compiler and runtime for TensorFlow to allow users to on encrypted data. We also plan to integrate directly with PyTorch.

- HE-transformer leverages the compute graph representation of nGraph to provide a host of optimizations that are specific to HE, so users automatically benefit where possible. These optimizations include plaintext value bypass, SIMD packing, OpenMP parallelization, and plaintext operations. See our paper for more information.

- HE-transformer is compatible with any model serialized with nGraph and supports a variety of operations, including add, broadcast, constant, convolution, dot, multiply, negate, pad, reshape, result, slice, subtract. Due to the constraints of HE, supported operations are currently limited to those requiring only addition and multiplication.

- For deep learning frameworks that can export their networks to ONNX, such as PyTorch, CNTK, and MXNet, users can use HE-transformer by first importing their model using the ONNX importer in nGraph, then exporting it in nGraph serialized format, which HE-transformer can then load and run.

Current Performance

HE-transformer incorporates one of the latest breakthroughs in HE—the CKKS encryption scheme. This enables state-of-the-art performance on the Cryptonets neural network using a floating-point model trained in TensorFlow. Sparse or quantized models may yield additional performance benefits.

Future Work

We plan to adopt hybrid schemes that integrate multi-party computation to allow HE-transformer to support a wider variety of neural network models. We also plan to integrate directly with more deep learning frameworks, such as PyTorch. For more information, see our GitHub.

Fabian Boemer

Research Scientist, CTO Office, Artificial Intelligence Products Group, Intel

Casimir Wierzynski

Senior Director, Office of the CTO, Artificial Intelligence Products Group, Intel