Automotive Applications for Embedded Vision

Vision products in automotive applications can make us better and safer drivers

Vision products in automotive applications can serve to enhance the driving experience by making us better and safer drivers through both driver and road monitoring.

Driver monitoring applications use computer vision to ensure that driver remains alert and awake while operating the vehicle. These systems can monitor head movement and body language for indications that the driver is drowsy, thus posing a threat to others on the road. They can also monitor for driver distraction behaviors such as texting, eating, etc., responding with a friendly reminder that encourages the driver to focus on the road instead.

In addition to monitoring activities occurring inside the vehicle, exterior applications such as lane departure warning systems can use video with lane detection algorithms to recognize the lane markings and road edges and estimate the position of the car within the lane. The driver can then be warned in cases of unintentional lane departure. Solutions exist to read roadside warning signs and to alert the driver if they are not heeded, as well as for collision mitigation, blind spot detection, park and reverse assist, self-parking vehicles and event-data recording.

Eventually, this technology will to lead cars with self-driving capability; Google, for example, is already testing prototypes. However many automotive industry experts believe that the goal of vision in vehicles is not so much to eliminate the driving experience but to just to make it safer, at least in the near term.

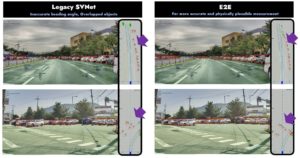

STRADVISION to Unveil Next-Gen ‘3D Perception Network’ and Showcase SVNet Portfolio at CES 2024

SEOUL, South Korea, Dec. 13, 2023 /PRNewswire/ — STRADVISION, an automotive industry pioneer in deep learning-based vision perception technology, is set to revolutionize the ADAS landscape at CES® 2024 in Las Vegas. Located at the Westgate Hotel Hospitality Suite #28115, the showcase will be open to industry professionals from January 9th to 12th. At the

A Brief Introduction to Millimeter Wave Radar Sensing

This blog post was originally published by D3. It is reprinted here with the permission of D3. We’ve found that a lot of innovators are considering including millimeter wave radar in their solutions, but some are not familiar with the sensing capabilities of radar when compared with vision, lidar, or ultrasonics. I want to review some

Ambarella Unveils Full Software Stack for Autonomous and Semi-autonomous Driving, Optimized for its CV3-AD Central AI Domain Controller Family

Neural network processing-based stack for perception, sensor fusion and path planning Chip family and stack co-developed for superior AI performance per watt Accelerates OEM development; offers scalability for L2+ and higher autonomy levels Realtime HD map generation from perception and SD maps eliminates need for stored HD maps SANTA CLARA, Calif., Dec. 12, 2023 —

Regulations: Drivers for Mandating Driver Monitoring Systems

Driver monitoring systems (DMS) have gained considerable momentum, driven by the escalating SAE autonomous driving levels and regulatory frameworks in key regions like the USA, Europe, China, Japan, and others. While DMS is not a novel concept, traditional DMS techniques typically rely on passive data sourced from advanced driver-assistance systems (ADAS), such as driving duration

Nextchip Demonstration of Driver and Occupant Monitoring On the Apache5 SoC with emotion3D Software

Barry Fitzgerald, local representative for Nextchip, demonstrates the company’s latest edge AI and vision technologies and products at the December 2023 Edge AI and Vision Alliance Forum. Specifically, Fitzgerald demonstrates a driver and broader occupant monitoring system (DMS/OMS) running on the company’s Apache5 SoC in conjunction with algorithms from emotion3D. This demonstration leverages a 3Mpixel

Cars On the Lookout: Image Sensors and Radars in Vehicles

Could cars with perfect night vision one day be a possibility? With a more heightened awareness of their surroundings in low visibility conditions thanks to short wave infrared (SWIR) image sensors, vehicles are on the road to visualizing beyond what the human eye can see. The powerful combination of new SWIR sensors with radar technology

High Performance Computing for Automotive

Computers on wheels. That’s how people currently see cars. Practically everything that happens in a vehicle is being monitored and actuated by a microcontroller, from opening windows to calculating the optimal fuel-air mixture for the current torque demand. But the surface has only just been scratched in terms of how much computing power is making

The Global In-cabin Sensing Market Will Exceed US$8.5 Billion by 2034

For more information, visit https://www.idtechex.com/en/research-report/in-cabin-sensing-2024-2034-technologies-opportunities-and-markets/972. Driver Monitoring Systems (DMS), Occupant Monitoring Systems (OMS), Interior Monitoring, Infrared Cameras, Time-of-Flight Cameras, Radars, Capacitive Sensors, Torque Sensors, ECG, EEG, Eye Movement Tracking As the autonomous driving capabilities, specifically the SAE autonomous driving level, continue to advance, and with the imminent implementation of regional regulations such as Euro NCAP

A Detailed Look at Using AI in Embedded Smart Cameras

This blog post was originally published at e-con Systems’ website. It is reprinted here with the permission of e-con Systems. Combining artificial intelligence (AI) with embedded cameras has paved the way for visual systems that can intelligently see and interact with their surroundings. Discover the role of AI in embedded camera applications, the benefits, and

The Future of Automotive Radar: Miniaturizing Size and Maximizing Performance

Radar has been one of the most significant additions to vehicles in the past two decades. It provides luxury advanced driver assistance system (ADAS) features like adaptive cruise control (ACC), as well as critical safety features like automatic emergency braking and blind spot detection. It has grown from an expensive accessory feature on the most