Automotive Applications for Embedded Vision

Vision products in automotive applications can make us better and safer drivers

Vision products in automotive applications can serve to enhance the driving experience by making us better and safer drivers through both driver and road monitoring.

Driver monitoring applications use computer vision to ensure that driver remains alert and awake while operating the vehicle. These systems can monitor head movement and body language for indications that the driver is drowsy, thus posing a threat to others on the road. They can also monitor for driver distraction behaviors such as texting, eating, etc., responding with a friendly reminder that encourages the driver to focus on the road instead.

In addition to monitoring activities occurring inside the vehicle, exterior applications such as lane departure warning systems can use video with lane detection algorithms to recognize the lane markings and road edges and estimate the position of the car within the lane. The driver can then be warned in cases of unintentional lane departure. Solutions exist to read roadside warning signs and to alert the driver if they are not heeded, as well as for collision mitigation, blind spot detection, park and reverse assist, self-parking vehicles and event-data recording.

Eventually, this technology will to lead cars with self-driving capability; Google, for example, is already testing prototypes. However many automotive industry experts believe that the goal of vision in vehicles is not so much to eliminate the driving experience but to just to make it safer, at least in the near term.

VeriSilicon’s 2nd-generation Automotive ISP Series IP Has Passed ISO 26262 ASIL B and ASIL D Certifications

ISP8200-FS series IP meets the evolving demands of the rapidly expanding automotive market Las Vegas, USA, January 8, 2024–VeriSilicon (688521.SH) today announced its Image Signal Processor (ISP) IP ISP8200-FS and ISP8200L-FS, designed for high-performance automotive applications, have been certified compliant with the ISO 26262 automotive functional safety standard, achieving ASIL B certification for random failures

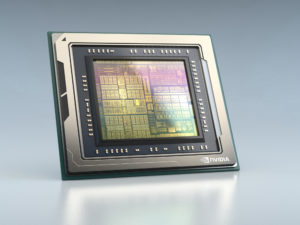

Wave of EV Makers Choose NVIDIA DRIVE for Automated Driving

Li Auto Selects DRIVE Thor for Next-Gen EVs; GWM, ZEEKR and Xiaomi Develop AI-Driven Cars Powered by NVIDIA DRIVE Orin January 8, 2024 — CES — NVIDIA today announced that Li Auto, a pioneer in extended-range electric vehicles (EVs), has selected the NVIDIA DRIVE Thor™ centralized car computer to power its next-generation fleets. NVIDIA also announced

TI Debuts New Automotive Chips at CES, Enabling Automakers to Create Smarter, Safer Vehicles

News highlights: The industry’s first single-chip radar sensor designed for satellite architectures can increase vehicle sensing ranges beyond 200 meters and enable more accurate advanced driver assistance systems (ADAS) decision-making. New driver chips support safe and efficient control of power flow in battery management or other powertrain systems with functional safety compliance and built-in diagnostics

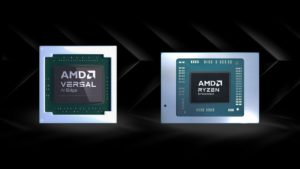

AMD Reshapes Automotive Industry with Advanced AI Engines and Elevated In-Vehicle Experiences at CES 2024

New Versal AI Edge XA adaptive SoCs and Ryzen Embedded V2000A Series processors underscore AMD leadership for powering next-generation automotive systems Participating auto ecosystem partners at CES include: BlackBerry, Cognata, ECARX, Hesai, Luxoft, QNX, QT, Robosense, SEYOND, Tanway, Visteon and XYLON SANTA CLARA, Calif., Jan. 04, 2024 (GLOBE NEWSWIRE) — Today, AMD (NASDAQ: AMD) announced

Imagination and MulticoreWare Collaboration Accelerates Automotive Compute Workloads

San Jose, California – 4 January 2024 – MulticoreWare Inc and Imagination Technologies announce that they have enabled GPU compute on the Texas Instruments TDA4VM processor, unleashing around 50 GFLOPS of extra compute and demonstrating a massive improvement in the performance of common workloads used for autonomous driving and advanced driver assistance systems (ADAS). The

Kodiak Robotics Selects Ambarella AI Domain Controller SoC For Next-Generation Autonomous Trucks

Kodiak Moves AI Perception and Machine Learning Systems to Ambarella’s CV3-AD685 AI SoC for Higher AI Efficiency and Performance in Long-Haul Trucking Fleets SANTA CLARA, Calif. and MOUNTAIN VIEW, Calif., Jan. 2, 2024 — Ambarella, Inc. (NASDAQ: AMBA), an edge AI semiconductor company, and Kodiak Robotics, Inc., a leading autonomous vehicle company focused on trucking

ADAS in 2024: Don’t Expect Clarity on Autonomy and Safety

Will OEMs continue to treat the world’s most valuable car maker, Tesla, as a role model – beyond electrification and over-the-air (OTA) updates? What’s at stake: If 2023 marked the public’s disillusionment with robotaxis, 2024 augurs a big shift toward advanced driver assistance systems (ADAS) crammed with automated features. Expect the auto industry to play

Future Automotive Technologies Represent a US$1.6 Trillion Opportunity in 2034

For more information, visit https://www.idtechex.com/en/research-report/future-automotive-technologies-2024-2034-applications-megatrends-forecasts/979. The automotive megatrends that will define the future of vehicles: autonomous driving, mobility as a service, electric vehicles, connected cars, software-defined vehicles, everything-as-a-service, and more. Autonomous driving technologies and vehicle electrification are megatrends that are reshaping the automotive industry. In addition to these, connected and software defined vehicles have recently

What’s In the Box? In-cabin Monitoring Systems At a Glance

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Twice a month, Yole SystemPlus analysts share noteworthy points from their automotive Teardown Tracks. Today, they take us to the heart of imaging-based occupant and driver monitoring systems (OMS and DMS). Already

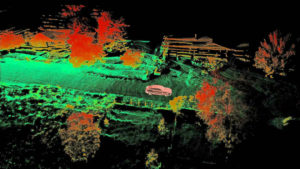

What’s In the Box? Automotive LiDARs At a Glance

This market research report was originally published at the Yole Group’s website. It is reprinted here with the permission of the Yole Group. Twice a month, Yole SystemPlus analysts share the noteworthy points from their automotive Teardown Tracks. Today, Benjamin Pussat, Technology & Cost Analyst at Yole SystemPlus, takes us to the heart of LiDAR